Real-time object recognition using fixations to control prosthetic hand

Community Stories

Author(s): Pupil Dev Team

December 15, 2017

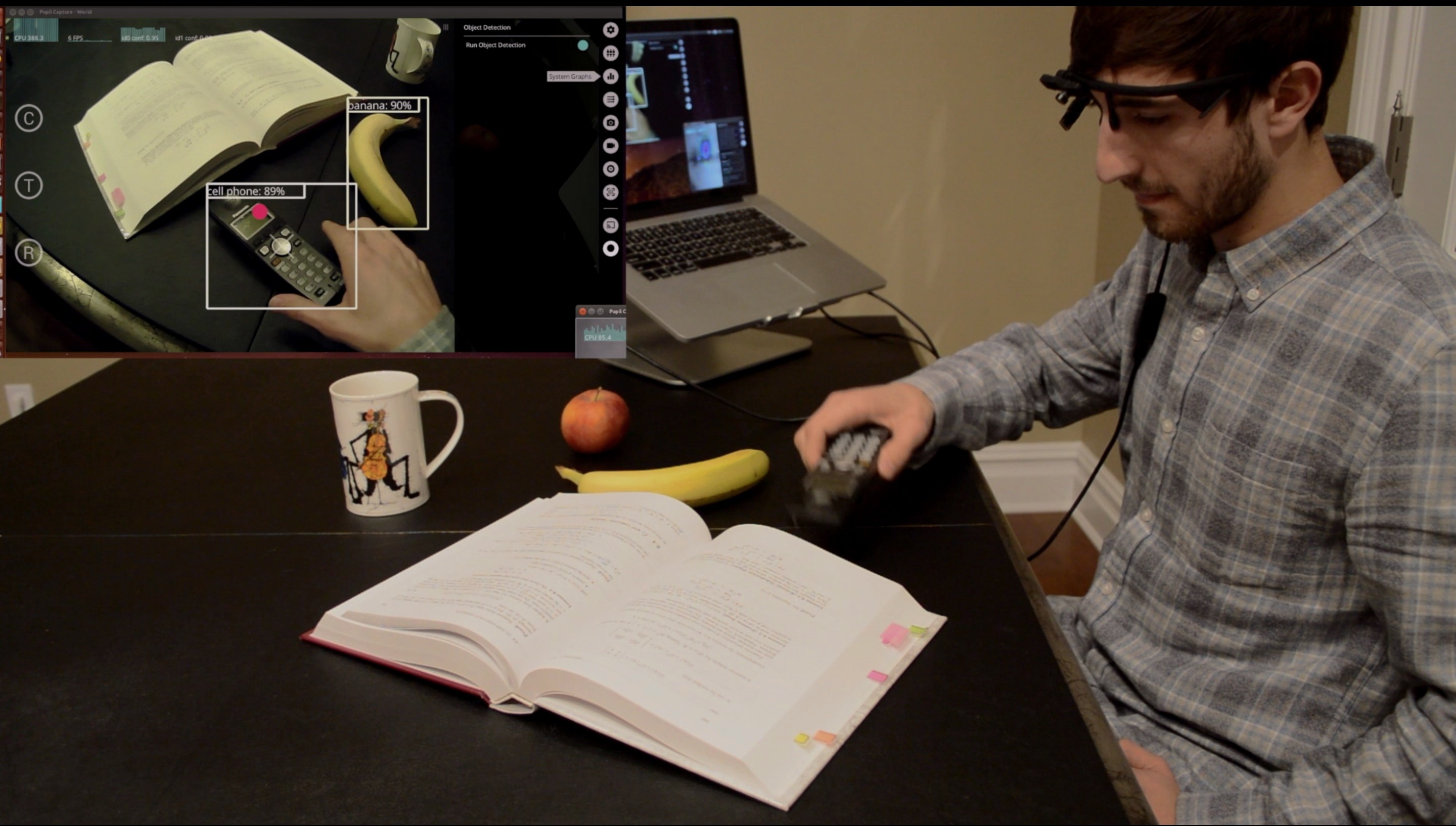

We are excited to share a project from a Pupil community member, Jesse Weisberg. Jesse has developed a plugin for Pupil that enables a prosthetic hand to form grasps based on what the wearer is fixating on in the scene in real-time on the CPU (10-15 fps)!

This plugin was developed as part of a project called Therabotics. Therabotics was created by a team of graduate students at Washington University in St. Louis to explore how computer vision can be used to improve prosthetic hand control.

This project is a great demonstration of how to extend Pupil and contribute back to the open source community!

Robotic Prosthetic Hand Controlled with Object Recognition and Eye-Tracking

The object detection plugin publishes the detected object that is closest to the fixation to a rostopic. An Ardunio Mega 2560 subscribes to the rostopic and controls the linear actuators in an OpenBionics 3D printed hand to form a predefined grasp that is associated with the detected object.

Check out Jesse's Pupil fork on github. He provides a very well written readme along with demo videos. Or jump straight to his "object detector app" in his repo.

We hope that others will build on top of Jesse's work and contribute back to his project!

Finally, if you have built something with Pupil, please consider sharing with the community via chat on DiscordApp.