All software releases

Here you will find a log of all features, changes, bug fixes, and developer notes for Pupil Labs software.

April 1, 2024

We are excited to announce another round of updates for Pupil Cloud. This release focuses mostly on stability, but we’re also adding a few nice UI updates as well!

Enrichment timeline visualization

We have put more design work into the enrichment timelines to make it easier for researchers to discover the results of enrichments. Timelines for enrichments now visualize three states:

Processing: The processing states of the enrichment. Ready, Processing, Done.

Localization: Visualizes where the reference image or surface detected in the recording.

Gaze on reference: Visualizes where the subject is looking at the reference image or surface in the recording.

Automated recording recovery

We developed an automatic recovery tool that fixes recordings that were not playable on the Companion Device or that were directly exported from the Companion Device to desktop.

AOI Heatmap Metrics: Fixation Count

We’ve added average fixation count metric to the AOI Heatmap visualization.

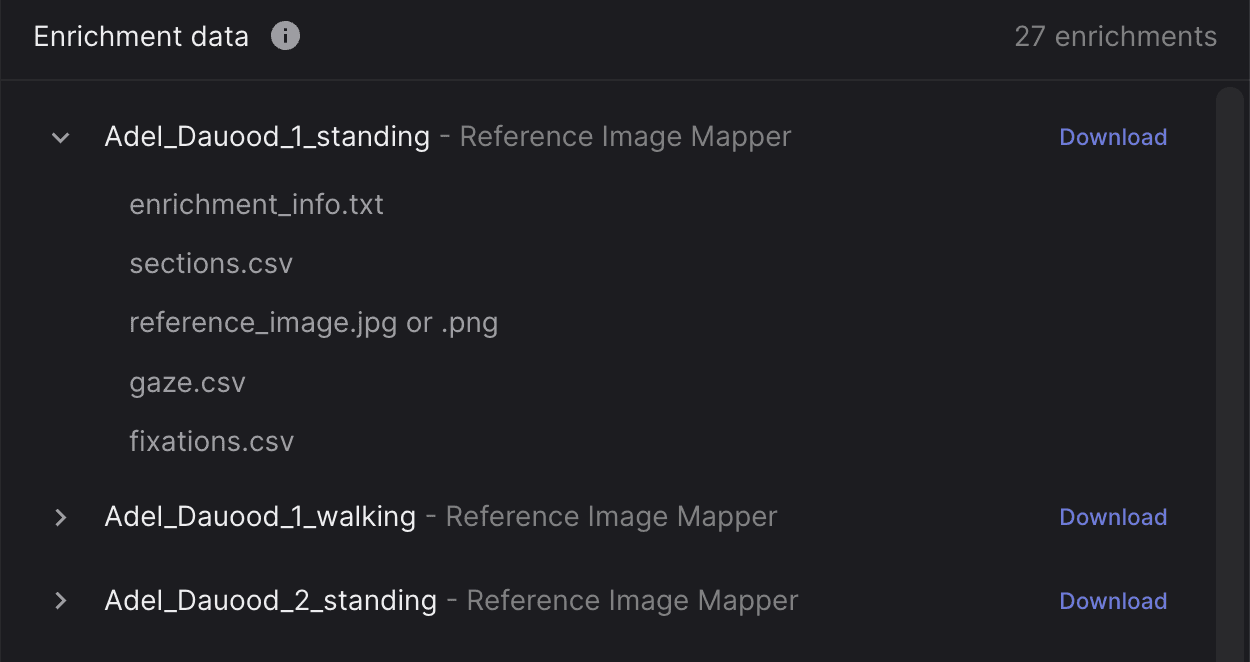

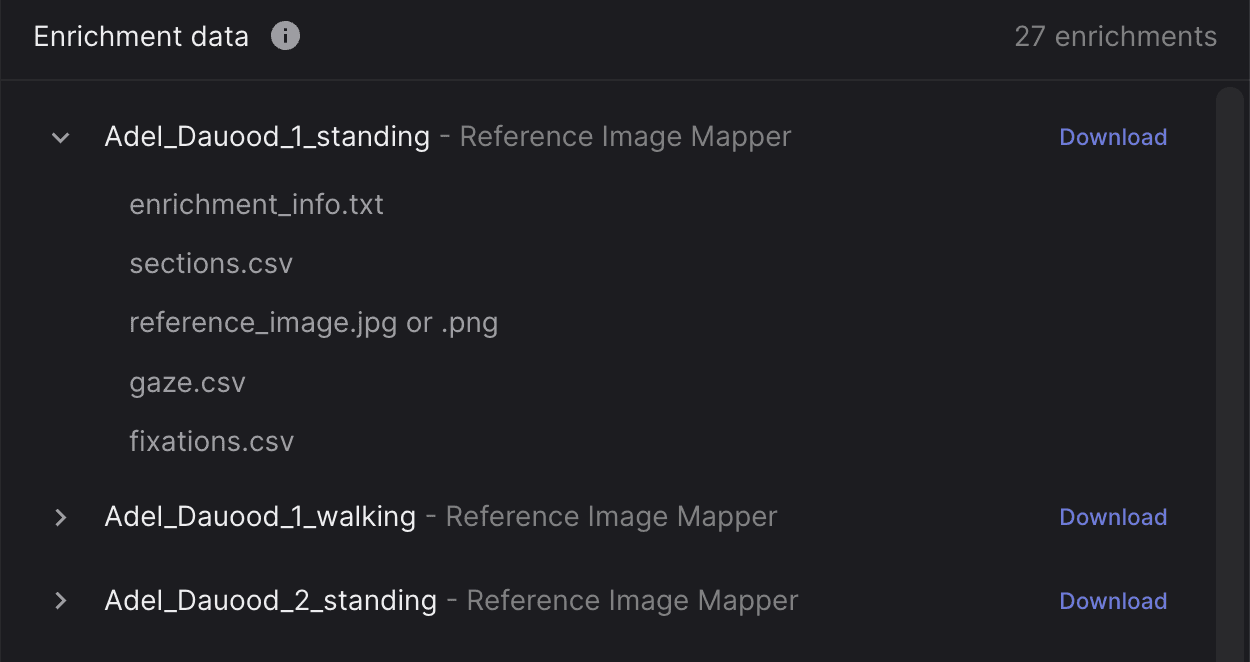

Marker Mapper Image

The Marker Mapper image now automatically selects an image where all markers are visible from within the enrichment section.

Workspace management

You can now leave workspaces. This can be useful if you are no longer contributing to a workspace and want to clean up the list of workspaces associated with your account. You can always join a workspace again by accepting an invite from a workspace admin.

Follow mode for playback as default

We’ve added a new feature so that the playhead is always in view during playback. This is now the default playback mode. If you want to switch back to the old style, you can click the “follow mode” button.

Improved Fullscreen Experience

We removed the top application bars when in fullscreen so that we can maximize the available space for fullscreen viewing.

April 1, 2024

We are excited to announce another round of updates for Pupil Cloud. This release focuses mostly on stability, but we’re also adding a few nice UI updates as well!

Enrichment timeline visualization

We have put more design work into the enrichment timelines to make it easier for researchers to discover the results of enrichments. Timelines for enrichments now visualize three states:

Processing: The processing states of the enrichment. Ready, Processing, Done.

Localization: Visualizes where the reference image or surface detected in the recording.

Gaze on reference: Visualizes where the subject is looking at the reference image or surface in the recording.

Automated recording recovery

We developed an automatic recovery tool that fixes recordings that were not playable on the Companion Device or that were directly exported from the Companion Device to desktop.

AOI Heatmap Metrics: Fixation Count

We’ve added average fixation count metric to the AOI Heatmap visualization.

Marker Mapper Image

The Marker Mapper image now automatically selects an image where all markers are visible from within the enrichment section.

Workspace management

You can now leave workspaces. This can be useful if you are no longer contributing to a workspace and want to clean up the list of workspaces associated with your account. You can always join a workspace again by accepting an invite from a workspace admin.

Follow mode for playback as default

We’ve added a new feature so that the playhead is always in view during playback. This is now the default playback mode. If you want to switch back to the old style, you can click the “follow mode” button.

Improved Fullscreen Experience

We removed the top application bars when in fullscreen so that we can maximize the available space for fullscreen viewing.

January 24, 2024

We are excited to announce another round of updates for Pupil Cloud! We are introducing Area of Interest (AOI) analysis, improvements for the recording timeline, and several other smaller changes.

Areas of Interest

We are excited to launch AOI tools in Pupil Cloud! Use the AOI Editor in your Reference Image Mapper and Marker Mapper enrichments to draw AOIs on top of the reference image or surface. You can draw anything from simple polygons to multiple disconnected shapes.

AOI Metrics

After you have drawn AOIs you will automatically get CSV files of standard metrics of fixations on AOIs: total fixation duration, average fixation duration, time to first fixation, and reach.

AOI Heatmaps

Create visualizations of AOI metrics using an AOI Heatmap!

Enrichment Results in the Timeline

The recording timeline now contains visualizations for enrichments that indicate when they successfully tracked the reference image, surface, or face respectively.

This provides you with a high-level overview of when the stimuli were in the subject’s field of view.

Timeline Zoom

You can now zoom in the timeline. Zoom out for an overview of the entire recording and zoom in up to 1-second intervals to inspect the details.

Project Layout Changes

Enrichments now have a dedicated table. This enables you to search/sort enrichments and view more information about each enrichment in a single view. It also cleans up the sidebar!

Create Button

We added a “create” button in the enrichment and visualization views. Each button opens a modal that also contains more information about each enrichment and visualization. The aim is to make it easier to understand the setup and output of enrichments and visualizations.

Faster Downloads

Downloads of Marker Mapper and Reference Image Mapper exports are now 20x faster!

Search

You can now search for workspaces and projects by their ID.

Native Recording Data

If you want to download recording data from Pupil Cloud, in the same format it was saved on the Neon Companion Device, it is now called Native Recording Data.

Timestamps

We have switched to hardware based timestamps for video feeds for increased accuracy. If you need old recordings in Pupil Cloud updated to hardware timestamps reach out info@pupil-labs.com

January 24, 2024

We are excited to announce another round of updates for Pupil Cloud! We are introducing Area of Interest (AOI) analysis, improvements for the recording timeline, and several other smaller changes.

Areas of Interest

We are excited to launch AOI tools in Pupil Cloud! Use the AOI Editor in your Reference Image Mapper and Marker Mapper enrichments to draw AOIs on top of the reference image or surface. You can draw anything from simple polygons to multiple disconnected shapes.

AOI Metrics

After you have drawn AOIs you will automatically get CSV files of standard metrics of fixations on AOIs: total fixation duration, average fixation duration, time to first fixation, and reach.

AOI Heatmaps

Create visualizations of AOI metrics using an AOI Heatmap!

Enrichment Results in the Timeline

The recording timeline now contains visualizations for enrichments that indicate when they successfully tracked the reference image, surface, or face respectively.

This provides you with a high-level overview of when the stimuli were in the subject’s field of view.

Timeline Zoom

You can now zoom in the timeline. Zoom out for an overview of the entire recording and zoom in up to 1-second intervals to inspect the details.

Project Layout Changes

Enrichments now have a dedicated table. This enables you to search/sort enrichments and view more information about each enrichment in a single view. It also cleans up the sidebar!

Create Button

We added a “create” button in the enrichment and visualization views. Each button opens a modal that also contains more information about each enrichment and visualization. The aim is to make it easier to understand the setup and output of enrichments and visualizations.

Faster Downloads

Downloads of Marker Mapper and Reference Image Mapper exports are now 20x faster!

Search

You can now search for workspaces and projects by their ID.

Native Recording Data

If you want to download recording data from Pupil Cloud, in the same format it was saved on the Neon Companion Device, it is now called Native Recording Data.

Timestamps

We have switched to hardware based timestamps for video feeds for increased accuracy. If you need old recordings in Pupil Cloud updated to hardware timestamps reach out info@pupil-labs.com

August 7, 2023

Introducing the Analysis View

We are excited to introduce the Analysis View in Pupil Cloud! This is where high-level analysis, data aggregation, and advanced visualization will take place.

While this is just the first release and we are starting small, we have many features in the pipeline that will make the Analysis View one of the most important areas in Pupil Cloud in the near future!

What is available today?

Here is what has changed in this release:

In this first release, we are mostly rearranging existing features in preparation for bigger upcoming releases.

Analysis View: In each Project, you will see “Analysis” as a new menu item in the sidebar.

Heatmaps: We moved heatmaps out of enrichments and into Analysis. A Heatmap visualization is automatically added for each Reference Image Mapper or Marker Mapper enrichment.

Video Renderer: We renamed the Gaze Overlay Enrichment to Video Renderer and moved it into the Analysis view.

What’s next?

We have many exciting features planned for the Analysis View in the near future. This includes:

Areas of Interest (AOIs): We’re putting the finishing touches on an AOI tool. Draw AOIs on reference images and surfaces. Aggregate data on AOIs.

Metrics: Automatically calculate standard metrics for data on AOIs like e.g. “Average Fixation Duration”. Visualize them using various graphing options.

Video Renderer: More gaze visualization options (think cross-hair, eye video overlay). Abilities to visualize and render data from multiple enrichments.

Sharing: Share links to videos and visualizations with others.

We will be adding more and more of these features in the coming weeks, so stay tuned for updates!

Updates and Fixes

Workspace collaboration: Now when you create a new workspace, you can immediately invite other editors to collaborate. Hopefully this makes this feature more discoverable! You can also invite users from workspace settings.

Enrichment recordings: The enrichment track now has a tooltip and visual style to clearly communicate if a recording is not matched/included in the enrichment.

August 7, 2023

Introducing the Analysis View

We are excited to introduce the Analysis View in Pupil Cloud! This is where high-level analysis, data aggregation, and advanced visualization will take place.

While this is just the first release and we are starting small, we have many features in the pipeline that will make the Analysis View one of the most important areas in Pupil Cloud in the near future!

What is available today?

Here is what has changed in this release:

In this first release, we are mostly rearranging existing features in preparation for bigger upcoming releases.

Analysis View: In each Project, you will see “Analysis” as a new menu item in the sidebar.

Heatmaps: We moved heatmaps out of enrichments and into Analysis. A Heatmap visualization is automatically added for each Reference Image Mapper or Marker Mapper enrichment.

Video Renderer: We renamed the Gaze Overlay Enrichment to Video Renderer and moved it into the Analysis view.

What’s next?

We have many exciting features planned for the Analysis View in the near future. This includes:

Areas of Interest (AOIs): We’re putting the finishing touches on an AOI tool. Draw AOIs on reference images and surfaces. Aggregate data on AOIs.

Metrics: Automatically calculate standard metrics for data on AOIs like e.g. “Average Fixation Duration”. Visualize them using various graphing options.

Video Renderer: More gaze visualization options (think cross-hair, eye video overlay). Abilities to visualize and render data from multiple enrichments.

Sharing: Share links to videos and visualizations with others.

We will be adding more and more of these features in the coming weeks, so stay tuned for updates!

Updates and Fixes

Workspace collaboration: Now when you create a new workspace, you can immediately invite other editors to collaborate. Hopefully this makes this feature more discoverable! You can also invite users from workspace settings.

Enrichment recordings: The enrichment track now has a tooltip and visual style to clearly communicate if a recording is not matched/included in the enrichment.

May 10, 2023

Pupil Cloud UI Overhaul

Since the first release of Pupil Cloud in 2019 (time flies!), we have received a lot of feedback from the community and gained tons of experience using it ourselves. This led us to rethink the entire UI and do a major overhaul, which is now ready for release.

The new UI is available starting today at cloud.pupil-labs.com and we encourage you to check it out! In case you are not ready to make the switch yet, you can still access the old Pupil Cloud UI at old.cloud.pupil-labs.com.

We compiled a shortlist of the most important changes and the motivation behind them below. If you'd prefer a short video introduction check out our new onboarding video!

There are some significant changes, and we would love to hear what you think about them! If you have any feedback or feature requests, please let us know. If you have any issues or questions, reach out via via chat!

Navigation

The new navigation bar lets you move between workspaces and projects faster and easier. The breadcrumb structure keeps you informed of your current location.

Project Editor

We have completely redesigned the project and enrichment interaction. In the old design, there was a lot of back-and-forth between different views. With this update we aim to provide a streamlined pipeline.

Enrichment View

The new Enrichment View lets you see everything about your enrichment in one place, including its definition and visualizations of the results.

Downloads

We have added a new Downloads View to projects, which enables you to download all recording and enrichment data from a single place. The overview now includes a summary of the files that will be included in each download. In a future update, you will also be able to customize which files you wish to download.

Global Events

We are making changes to how events work to create more consistency. Previously, there were two types of events in Pupil Cloud:

Recording events: These events were created at recording time and uploaded to Pupil Cloud with the recording. They were then available in all projects to which the recording was added.

Project events: These events were created post hoc as part of a project and were only available within that project.

This system was confusing because events did not behave consistently. It also meant additional work for many users, as they had to define the same events repeatedly if they used the same recording in multiple projects.

With this update, we are introducing Global Events. Every event, no matter when or where it was created, will always be available with the respective recording. This allows you to define an event once and use it in every project.

As part of this change, we are promoting all existing events to global events. Users who previously defined the same event multiple times in different projects will now see duplicate events in their projects. We apologize for any inconvenience caused.

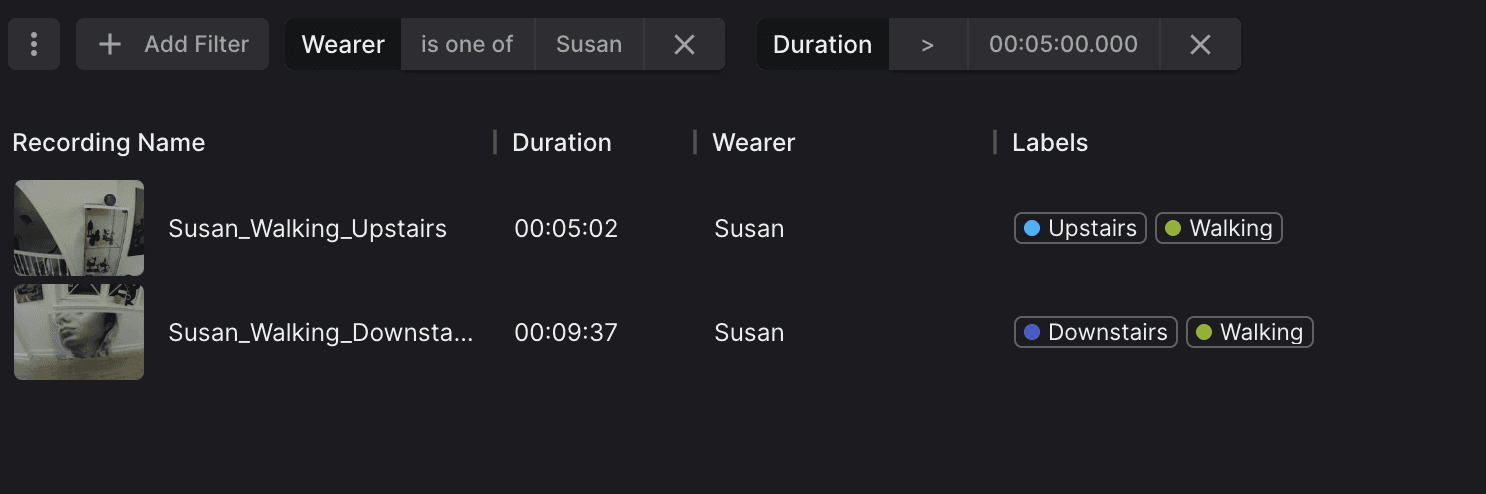

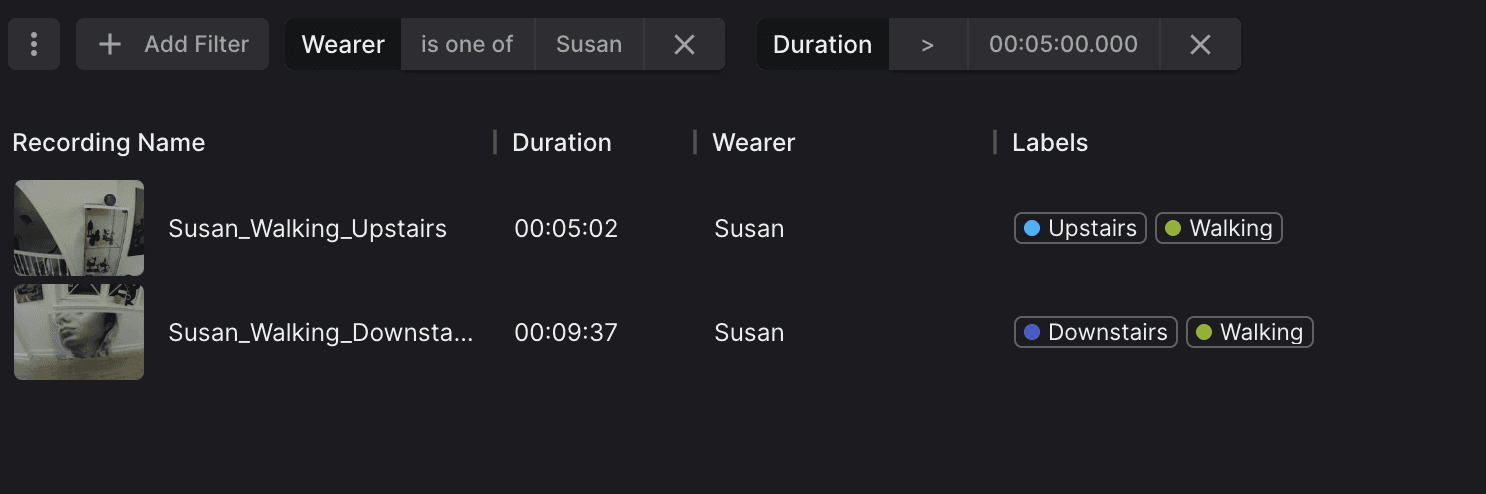

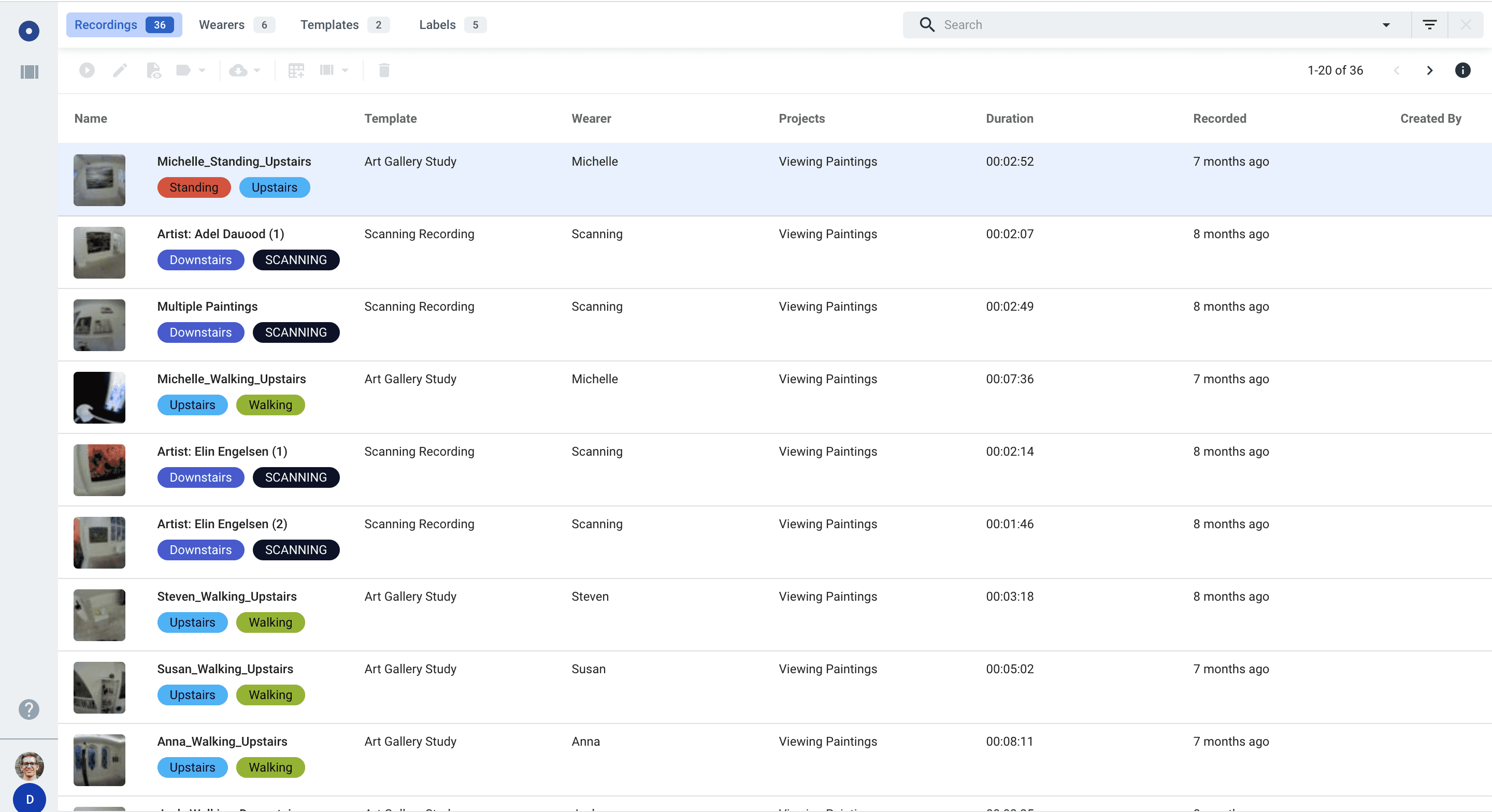

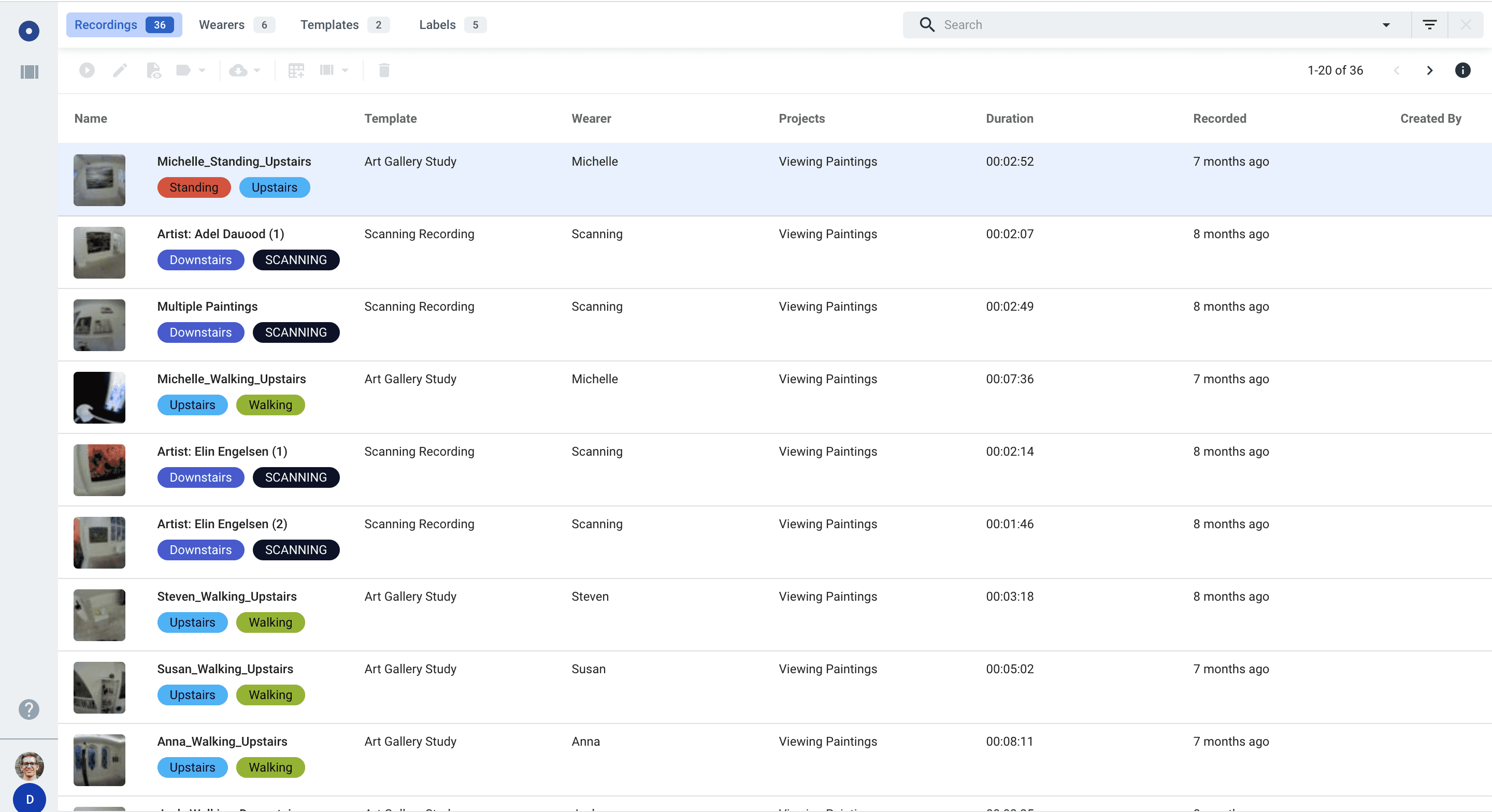

Customizing and Filtering Recording Tables

We added Filters to make it even easier to find recordings in your workspace. You can use filters together with search. Filter based on attributes like wearer, duration, or any other recording attribute.Furthermore, you can now customize the columns displayed in the recording table. This means you can add columns that are important to you and remove those that are less relevant.

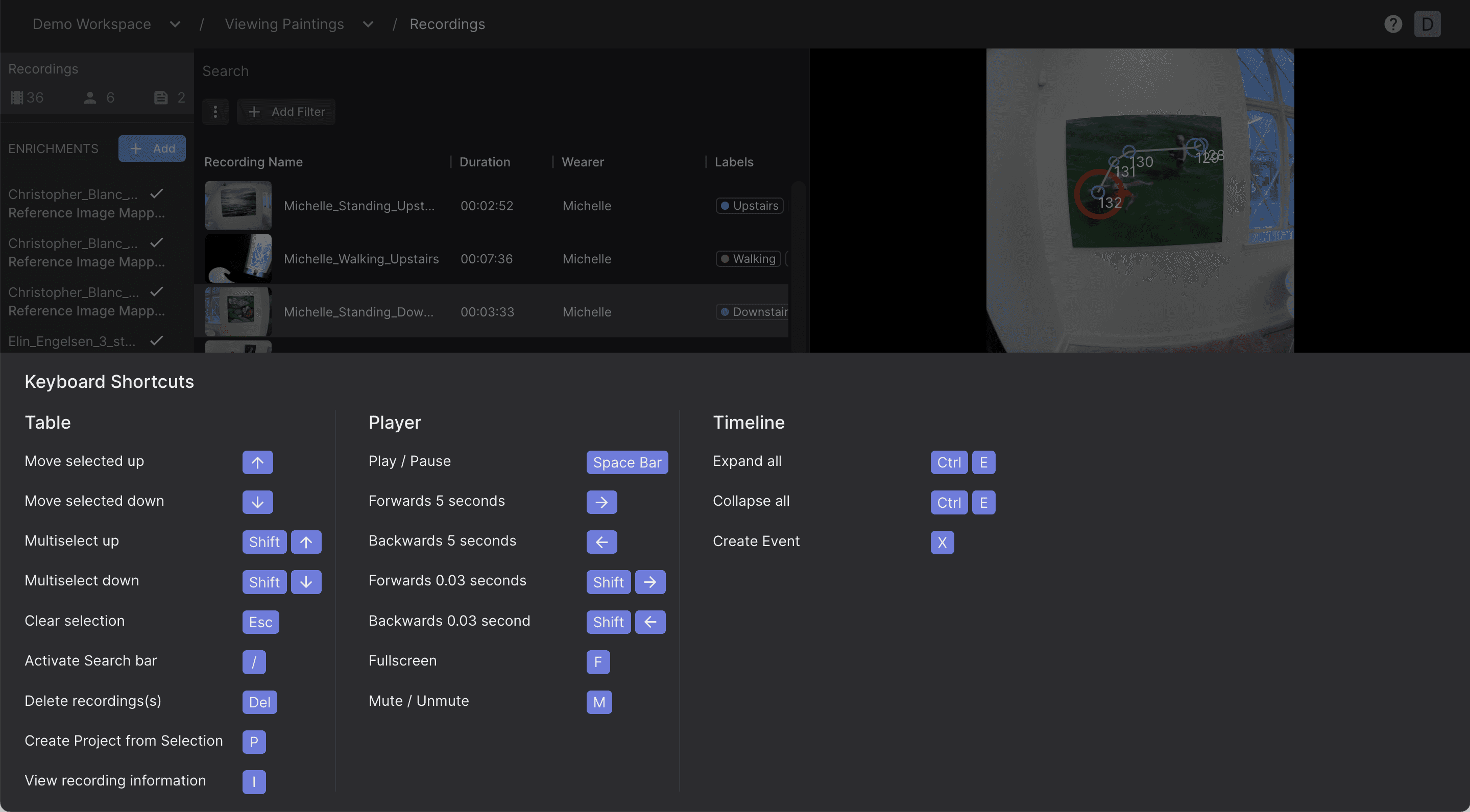

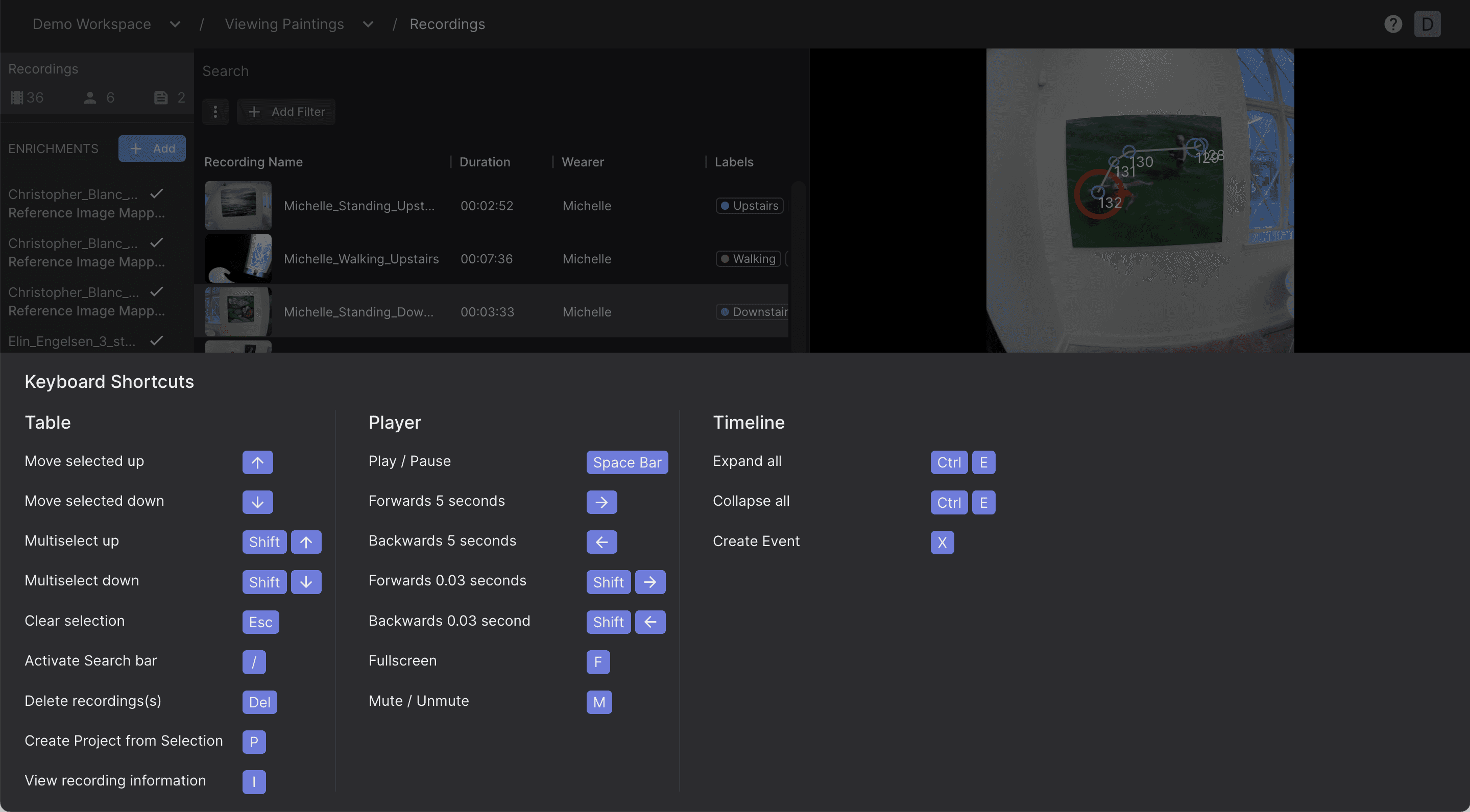

Hotkeys

We have introduced several new hotkeys to help power users move more quickly. To view the hotkey overview, access the help menu in the top-right corner of the screen.

These hotkeys will enable you to move more swiftly through recordings and speed up the event annotation process. If there are any additional hotkeys you would like to see implemented let us know.

Resizable Panels

The panels in the new user interface are resizable. You can choose what you want to see most of: the recording table, the player, or the timeline!

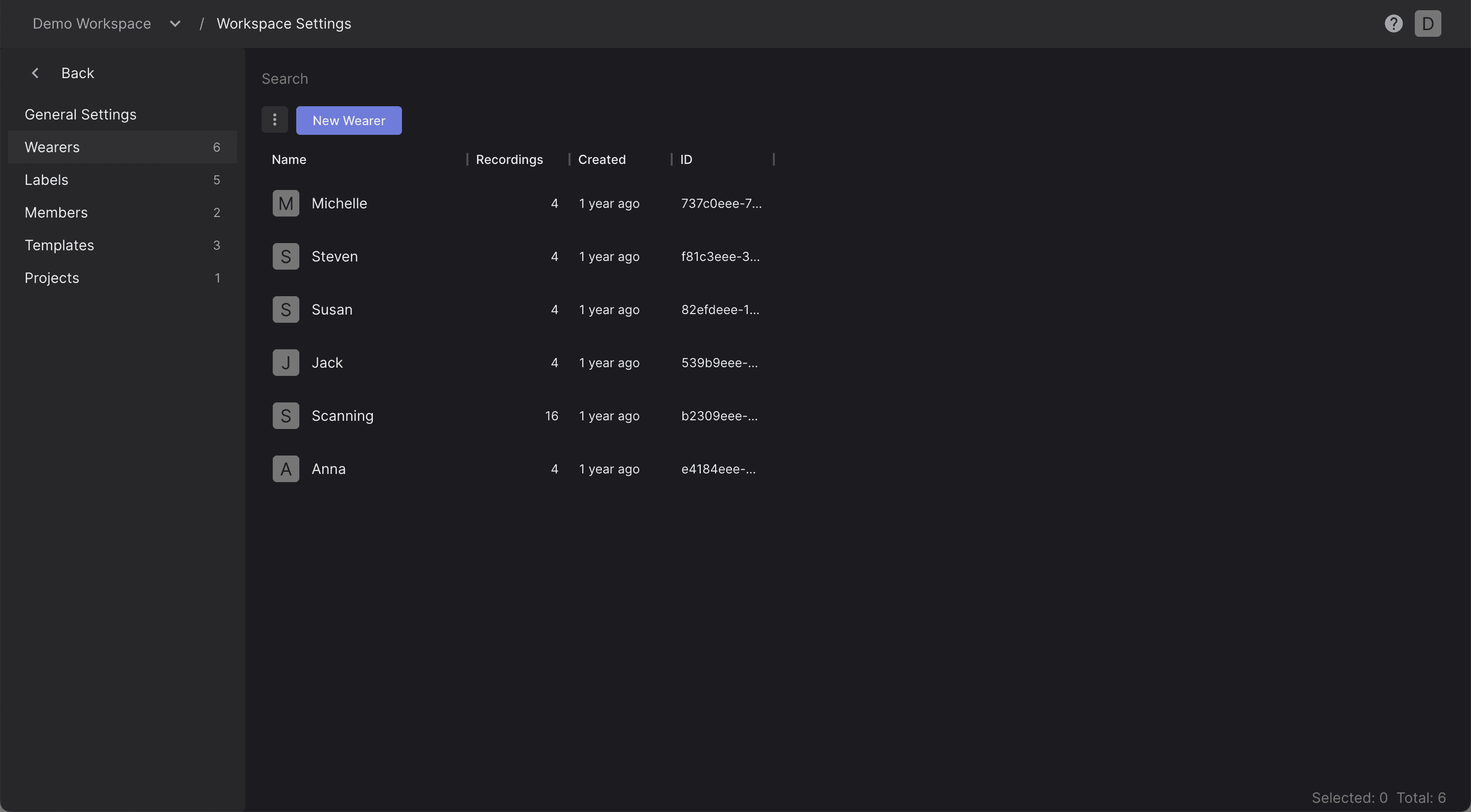

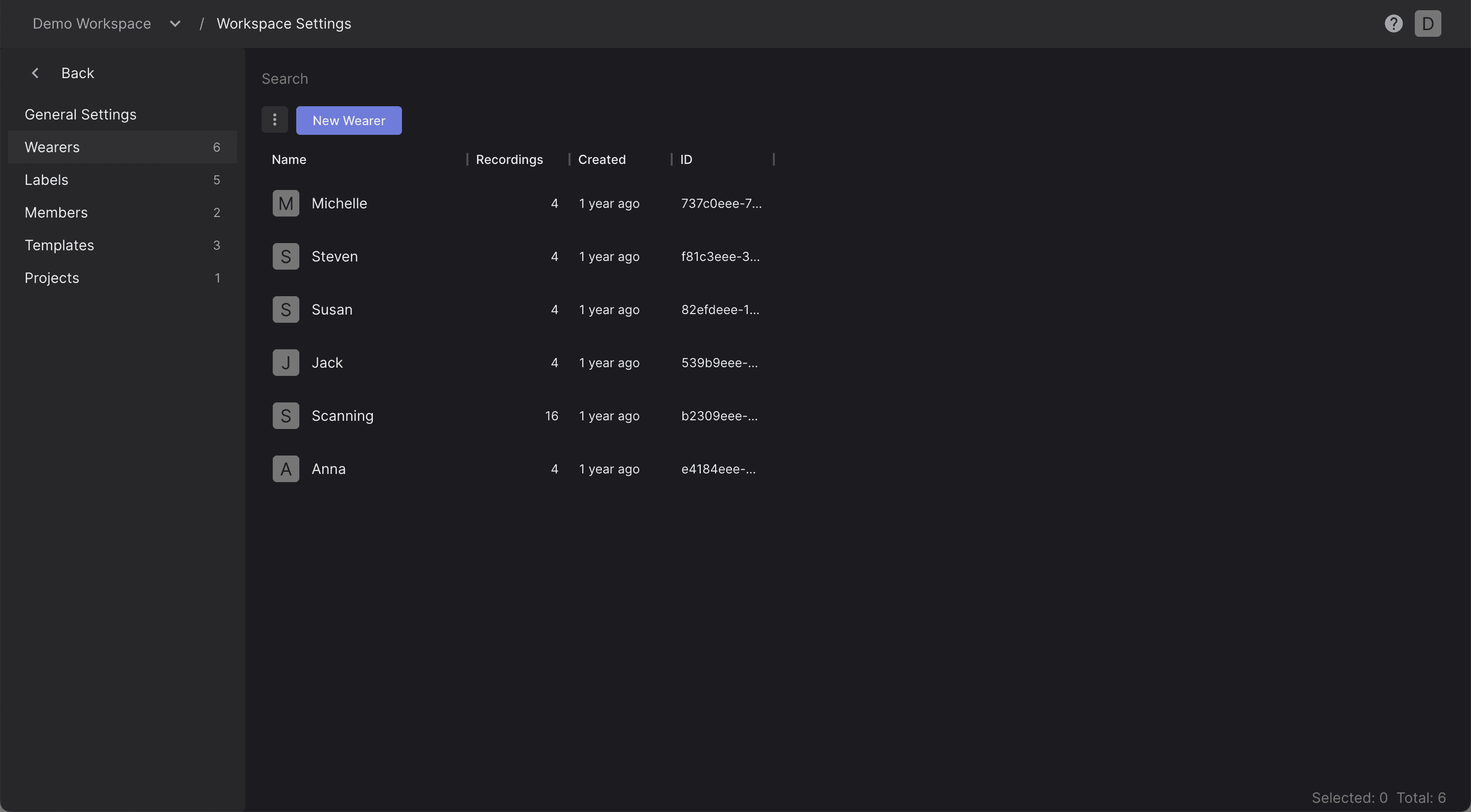

Templates and Wearers in Workspace Settings

All functionality to create, edit and delete Templates and Wearers has been moved to the workspace settings.

May 10, 2023

Pupil Cloud UI Overhaul

Since the first release of Pupil Cloud in 2019 (time flies!), we have received a lot of feedback from the community and gained tons of experience using it ourselves. This led us to rethink the entire UI and do a major overhaul, which is now ready for release.

The new UI is available starting today at cloud.pupil-labs.com and we encourage you to check it out! In case you are not ready to make the switch yet, you can still access the old Pupil Cloud UI at old.cloud.pupil-labs.com.

We compiled a shortlist of the most important changes and the motivation behind them below. If you'd prefer a short video introduction check out our new onboarding video!

There are some significant changes, and we would love to hear what you think about them! If you have any feedback or feature requests, please let us know. If you have any issues or questions, reach out via via chat!

Navigation

The new navigation bar lets you move between workspaces and projects faster and easier. The breadcrumb structure keeps you informed of your current location.

Project Editor

We have completely redesigned the project and enrichment interaction. In the old design, there was a lot of back-and-forth between different views. With this update we aim to provide a streamlined pipeline.

Enrichment View

The new Enrichment View lets you see everything about your enrichment in one place, including its definition and visualizations of the results.

Downloads

We have added a new Downloads View to projects, which enables you to download all recording and enrichment data from a single place. The overview now includes a summary of the files that will be included in each download. In a future update, you will also be able to customize which files you wish to download.

Global Events

We are making changes to how events work to create more consistency. Previously, there were two types of events in Pupil Cloud:

Recording events: These events were created at recording time and uploaded to Pupil Cloud with the recording. They were then available in all projects to which the recording was added.

Project events: These events were created post hoc as part of a project and were only available within that project.

This system was confusing because events did not behave consistently. It also meant additional work for many users, as they had to define the same events repeatedly if they used the same recording in multiple projects.

With this update, we are introducing Global Events. Every event, no matter when or where it was created, will always be available with the respective recording. This allows you to define an event once and use it in every project.

As part of this change, we are promoting all existing events to global events. Users who previously defined the same event multiple times in different projects will now see duplicate events in their projects. We apologize for any inconvenience caused.

Customizing and Filtering Recording Tables

We added Filters to make it even easier to find recordings in your workspace. You can use filters together with search. Filter based on attributes like wearer, duration, or any other recording attribute.Furthermore, you can now customize the columns displayed in the recording table. This means you can add columns that are important to you and remove those that are less relevant.

Hotkeys

We have introduced several new hotkeys to help power users move more quickly. To view the hotkey overview, access the help menu in the top-right corner of the screen.

These hotkeys will enable you to move more swiftly through recordings and speed up the event annotation process. If there are any additional hotkeys you would like to see implemented let us know.

Resizable Panels

The panels in the new user interface are resizable. You can choose what you want to see most of: the recording table, the player, or the timeline!

Templates and Wearers in Workspace Settings

All functionality to create, edit and delete Templates and Wearers has been moved to the workspace settings.

July 8, 2022

Big updates for Pupil Cloud! A Demo Workspace with sample recordings, projects, and enrichments for everyone to explore. Fixation scanpaths can now visualized in all video playback in Cloud.

Demo Workspace

Every Pupil Cloud user now has access to our new Demo Workspace. It contains recordings and an example project with enrichments. We encourage everyone to explore it to help understand cloud features, best practices, and to get hands-on on with a real world dataset recorded with Pupil Invisible. We will continue to add more projects over time. Have a use-case you'd like to see as a demo? Get in touch!

Learn more about the demo workspace here.

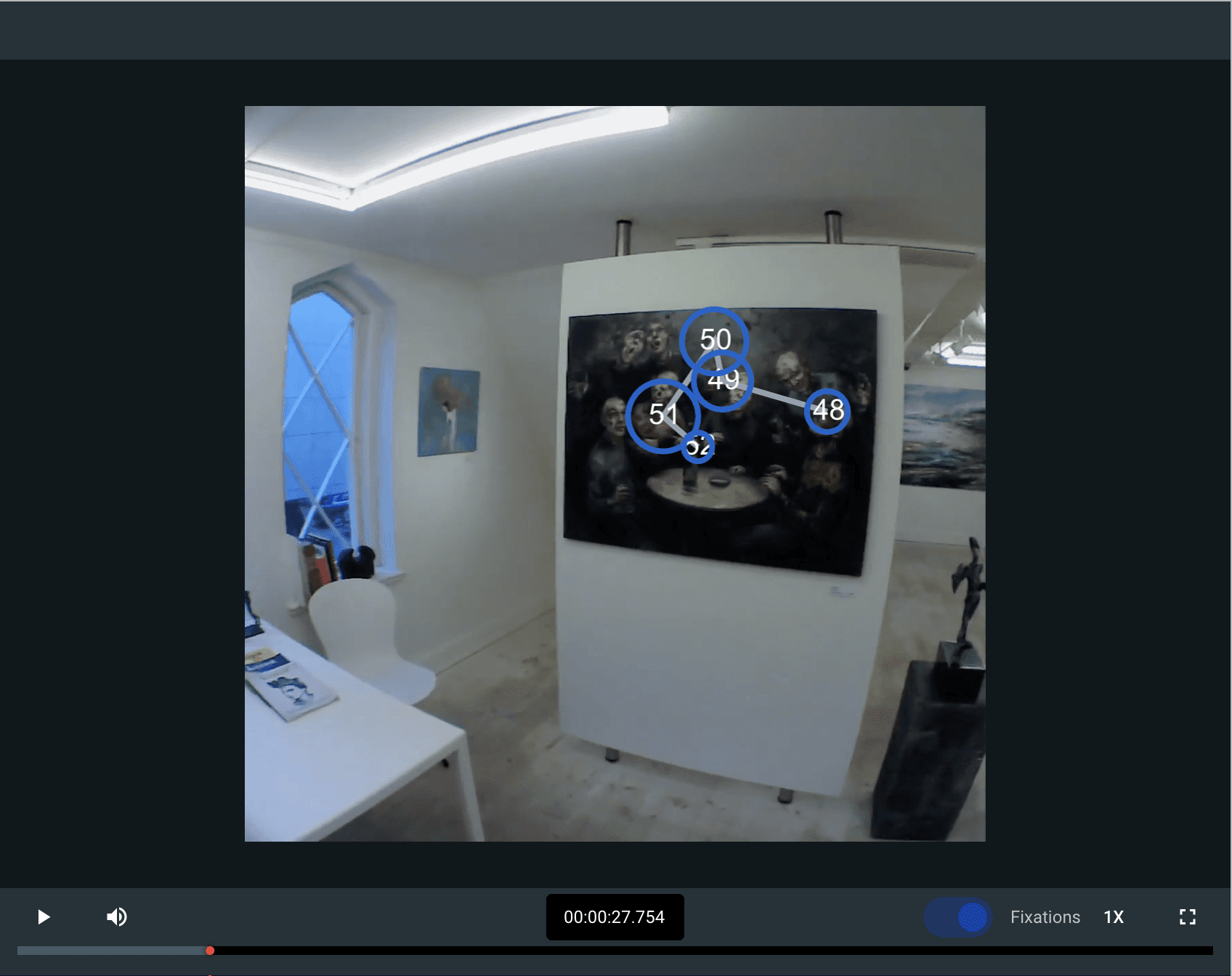

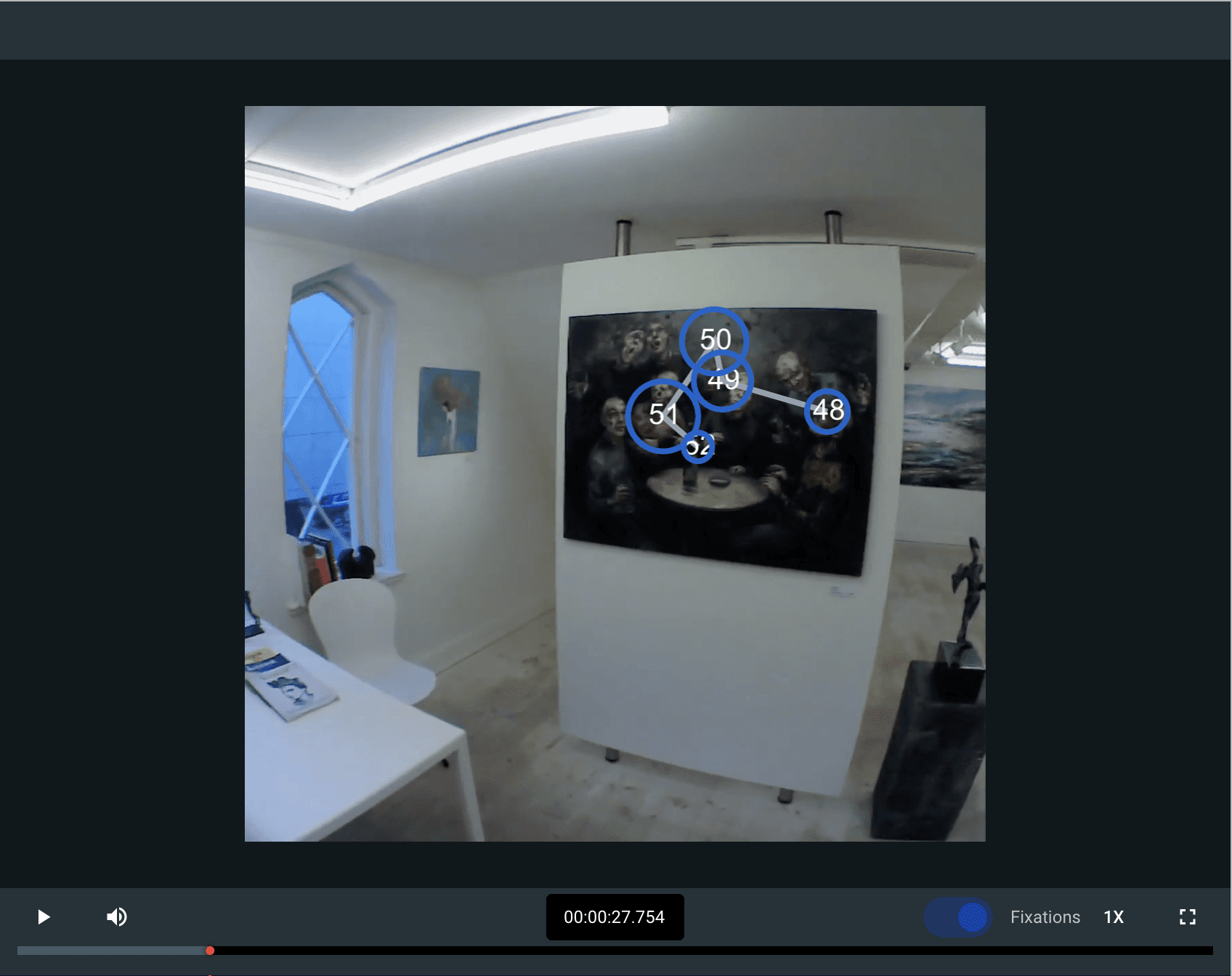

Fixation Scanpath Visualization

We have added a new visualization for fixation scanpaths to all video playback in Pupil Cloud. It shows the sequence of fixations for the last two seconds in a recording. The visualization compensates for head movements to ensure fixations remain in the right location even when the viewpoint changes. (We've got a white paper on the new fixation detection algorithm coming soon - stay tuned!)

July 8, 2022

Big updates for Pupil Cloud! A Demo Workspace with sample recordings, projects, and enrichments for everyone to explore. Fixation scanpaths can now visualized in all video playback in Cloud.

Demo Workspace

Every Pupil Cloud user now has access to our new Demo Workspace. It contains recordings and an example project with enrichments. We encourage everyone to explore it to help understand cloud features, best practices, and to get hands-on on with a real world dataset recorded with Pupil Invisible. We will continue to add more projects over time. Have a use-case you'd like to see as a demo? Get in touch!

Learn more about the demo workspace here.

Fixation Scanpath Visualization

We have added a new visualization for fixation scanpaths to all video playback in Pupil Cloud. It shows the sequence of fixations for the last two seconds in a recording. The visualization compensates for head movements to ensure fixations remain in the right location even when the viewpoint changes. (We've got a white paper on the new fixation detection algorithm coming soon - stay tuned!)

May 11, 2022

Gaze Super Speed!

We have drastically increased the framerate of the real-time gaze signal to 120+ Hz. This was made possible by the hard work of our R&D and engineering teams in optimizing the neural network that runs on the Companion Device. Accuracy remains with drastic improvements to inference speed!

The high speed gaze data is available both in the recording data as well as via the real-time API. As usual, the full 200 Hz signal is available after uploading to Pupil Cloud.

May 11, 2022

Gaze Super Speed!

We have drastically increased the framerate of the real-time gaze signal to 120+ Hz. This was made possible by the hard work of our R&D and engineering teams in optimizing the neural network that runs on the Companion Device. Accuracy remains with drastic improvements to inference speed!

The high speed gaze data is available both in the recording data as well as via the real-time API. As usual, the full 200 Hz signal is available after uploading to Pupil Cloud.

April 29, 2022

Pupil Invisible Monitor

Monitor your data collection straight from the web-browser - on any device!

Just type pi.local:8080 into your address bar and the Pupil Invisible Monitor app will open, which allows you to view video and gaze data from all the Pupil Invisible Glasses in your network!

It also allows you to remote control them, see storage and battery levels, and save events with the press of a button.

A more detailed introduction can be found here.

April 29, 2022

Pupil Invisible Monitor

Monitor your data collection straight from the web-browser - on any device!

Just type pi.local:8080 into your address bar and the Pupil Invisible Monitor app will open, which allows you to view video and gaze data from all the Pupil Invisible Glasses in your network!

It also allows you to remote control them, see storage and battery levels, and save events with the press of a button.

A more detailed introduction can be found here.

March 24, 2022

We are excited to announce our latest update for Pupil Cloud including a new blink detector for Pupil Invisible, visualizations for the Reference Image Mapper enrichment and quicker access to data downloads in convenient formats!

Blink Detector

We built a brand new blink detection algorithm and are making it available in Pupil Cloud. Blinks will be calculated for recordings automatically on upload to Pupil Cloud and be available in exports. The algorithm analyzes motion patterns in the eye videos to robustly detect blink events including blink duration. We are planning on open sourcing the blink detection algorithm in the near future.

Learn more about the algorithm here.

Checkout the export format of blink data here.

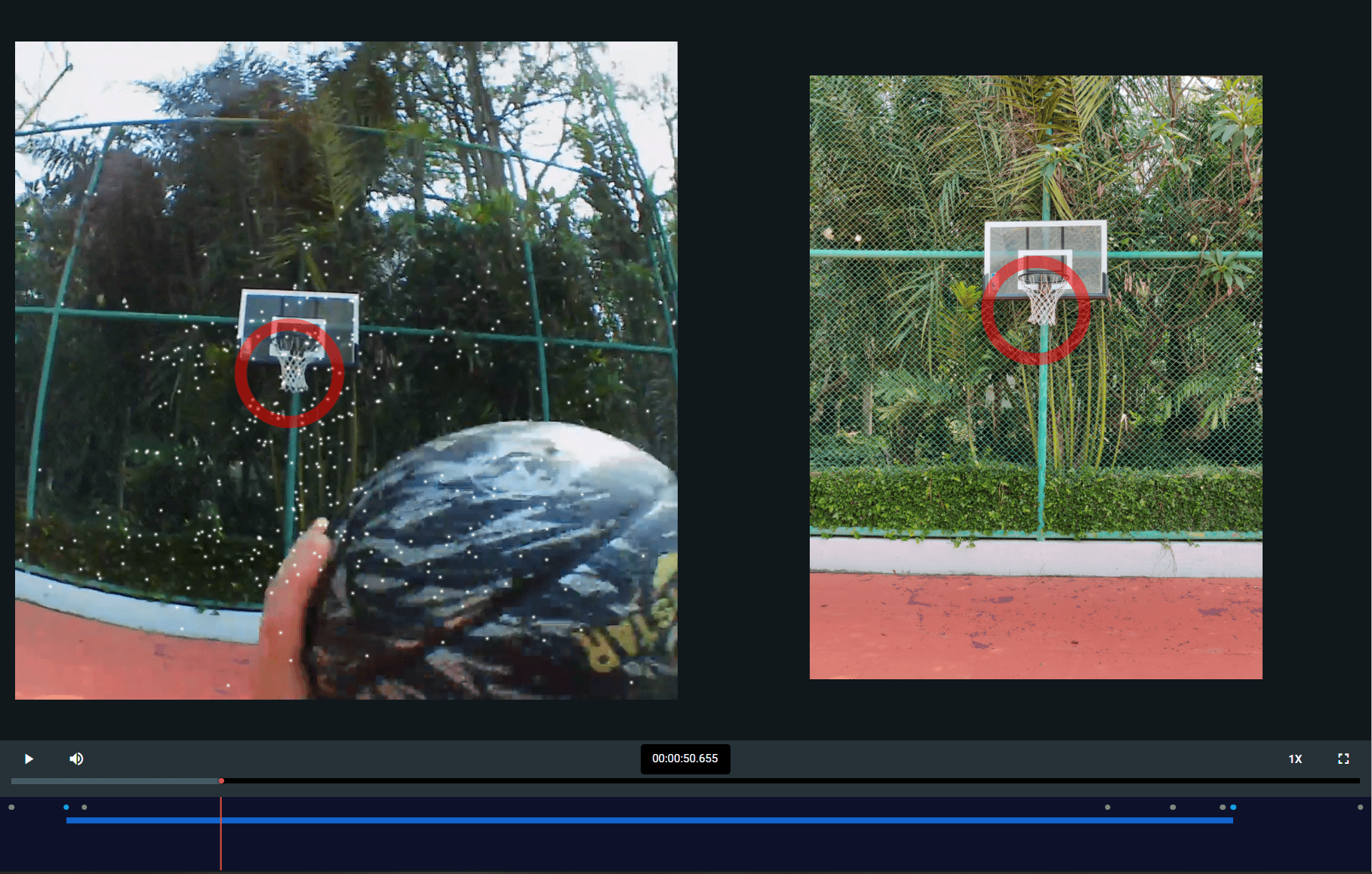

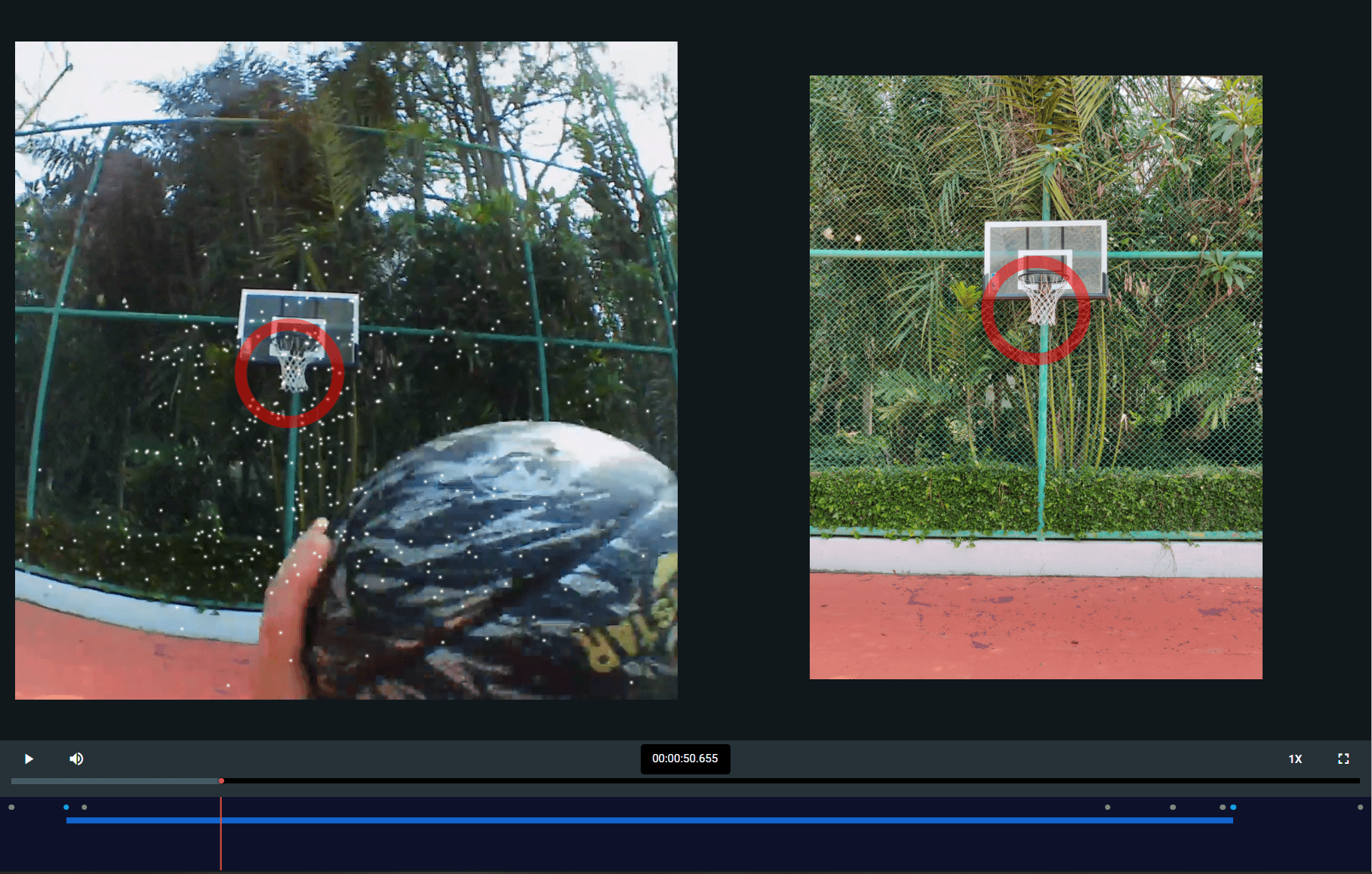

Reference Image Mapper Visualizations

The Reference Image Mapper enables you to automatically map gaze from the scene video onto a reference image. We want to give users a glimpse into how the algorithm works and a way to inspect the results.

To facilitate that, we added two new visualizations for the Reference Image Mapper to the project editor.

If you select a Reference Image Mapper enrichment in the project editor sidebar you can enable a side-by-side view of the reference image and the scene video. You can play back gaze on them simultaneously and verify the correctness of the mapping.

Internally, the Reference Image Mapper is generating a 3D point cloud representation of the recorded environment. You can now enable a visualization of this point cloud projected onto the scene video. This allows you to verify that the 3D scene camera motion has been estimated correctly.

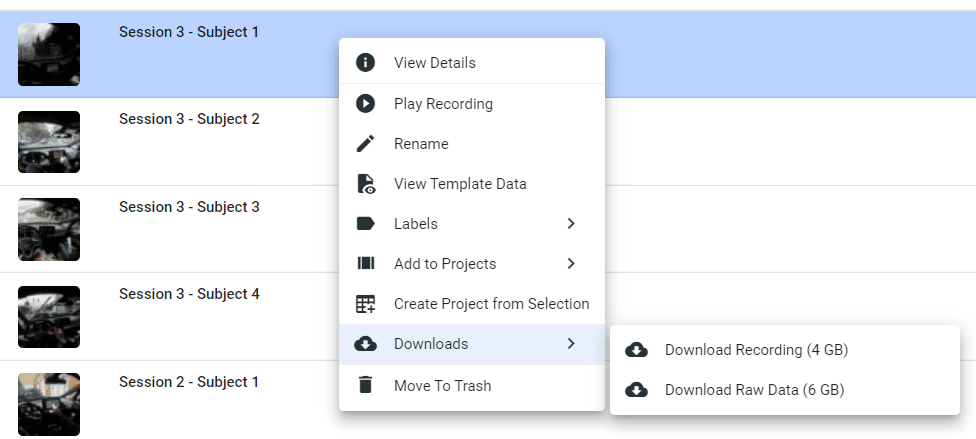

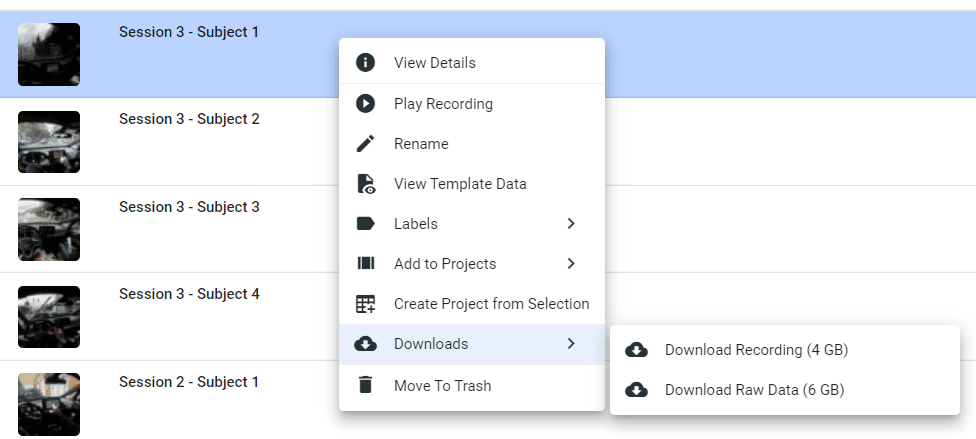

CSV Data Downloads In Drive

You can already use the Raw Data Exporter enrichment to download recordings in convenient formats like CSV and MP4 files. Now you can download recordings in convenient formats directly from Drive! We did this to help speed up the exploration of data for those who don’t need to create a project.

In the Drive view you can click on the Download button and now see two options. Download binary recording data which downloads the raw data as recorded on the Companion Device (plus 200Hz gaze data). And the new option called Download Recording which will result in CSV data files and videos in convenient formats (including 200 Hz gaze, fixation and blink data).

March 24, 2022

We are excited to announce our latest update for Pupil Cloud including a new blink detector for Pupil Invisible, visualizations for the Reference Image Mapper enrichment and quicker access to data downloads in convenient formats!

Blink Detector

We built a brand new blink detection algorithm and are making it available in Pupil Cloud. Blinks will be calculated for recordings automatically on upload to Pupil Cloud and be available in exports. The algorithm analyzes motion patterns in the eye videos to robustly detect blink events including blink duration. We are planning on open sourcing the blink detection algorithm in the near future.

Learn more about the algorithm here.

Checkout the export format of blink data here.

Reference Image Mapper Visualizations

The Reference Image Mapper enables you to automatically map gaze from the scene video onto a reference image. We want to give users a glimpse into how the algorithm works and a way to inspect the results.

To facilitate that, we added two new visualizations for the Reference Image Mapper to the project editor.

If you select a Reference Image Mapper enrichment in the project editor sidebar you can enable a side-by-side view of the reference image and the scene video. You can play back gaze on them simultaneously and verify the correctness of the mapping.

Internally, the Reference Image Mapper is generating a 3D point cloud representation of the recorded environment. You can now enable a visualization of this point cloud projected onto the scene video. This allows you to verify that the 3D scene camera motion has been estimated correctly.

CSV Data Downloads In Drive

You can already use the Raw Data Exporter enrichment to download recordings in convenient formats like CSV and MP4 files. Now you can download recordings in convenient formats directly from Drive! We did this to help speed up the exploration of data for those who don’t need to create a project.

In the Drive view you can click on the Download button and now see two options. Download binary recording data which downloads the raw data as recorded on the Companion Device (plus 200Hz gaze data). And the new option called Download Recording which will result in CSV data files and videos in convenient formats (including 200 Hz gaze, fixation and blink data).

March 21, 2022

Real-Time Network API and python library

Check out Pupil Invisible’s new real-time network API! This makes it easier than ever to get real-time eye tracking data for your research and applications.

Use our new Python library to instantly receive video and gaze data on your computer and remote control all your Pupil Invisible devices.

Implement gaze-interaction methodologies or track the progress of your data collection by starting recordings and saving events programmatically in no-time!

To learn more, please check out our Real-Time API Guides.

A new and improved version of Pupil Invisible Monitor based on this API is also coming very soon!

Pupil Invisible & Pupil Cloud Docs

Big update to the Pupil Invisible & Pupil Cloud docs. We improved the structure and added new tutorials and guides. Take a look!

If you are new to Pupil Invisible & Pupil Cloud, check out our new getting started guides.

Looking to dive deep? Check out the explainer sections on. the available data streams or enrichments.

More guides and other content additions are already in the works!

March 21, 2022

Real-Time Network API and python library

Check out Pupil Invisible’s new real-time network API! This makes it easier than ever to get real-time eye tracking data for your research and applications.

Use our new Python library to instantly receive video and gaze data on your computer and remote control all your Pupil Invisible devices.

Implement gaze-interaction methodologies or track the progress of your data collection by starting recordings and saving events programmatically in no-time!

To learn more, please check out our Real-Time API Guides.

A new and improved version of Pupil Invisible Monitor based on this API is also coming very soon!

Pupil Invisible & Pupil Cloud Docs

Big update to the Pupil Invisible & Pupil Cloud docs. We improved the structure and added new tutorials and guides. Take a look!

If you are new to Pupil Invisible & Pupil Cloud, check out our new getting started guides.

Looking to dive deep? Check out the explainer sections on. the available data streams or enrichments.

More guides and other content additions are already in the works!

December 10, 2021

We are excited to announce our latest release for Pupil Cloud including a novel fixation detector, advanced privacy features in workspaces, and more! All recordings uploaded after this release will automatically have fixation data.

Fixation Detection

We have developed a novel fixation detector for Pupil Invisible! It was designed to cope with the challenges of head-mounted eye tracking and is one of the most robust algorithms out there!

Traditional fixation detection algorithms are designed for stationary settings which assume minimal head movement. Our algorithm actively compensates for head movements and can detect fixations more reliably in dynamic scenarios. This includes robustness to vestibulo–ocular reflex (VOR) movements.

Fixations will be calculated automatically on upload to Pupil Cloud and be available in all exports. We are planning on open sourcing our new fixation detection algorithm in the near future along with a white paper and integration into Pupil Player for offline support.

Check out documentation on fixations exported for enrichments in the docs

Advanced Workspace Privacy Settings

We are introducing additional privacy settings for workspaces to cover a few specialized use cases that were requested by the community.

You can now disable scene video upload for the entire workspace. This allows users to make use of Pupil Cloud features like the calculation of 200 Hz gaze or fixations, while complying with strict privacy policies that would not allow scene video uploads to our servers.

In a future release we will introduce the ability to automatically blur faces on-upload of a recording to a workspace, such that the potentially sensitive original version is never stored in Pupil Cloud.

“Created by” Column in Recordings List

You can now see who uploaded each recording in the Drive view “Created by” column. We hope this makes collaboration easier within your workspace.

Optimized Project Editor Layout

We made more space for the video player at the center, and added a toolbar above the video player. The toolbar contains contextual enrichment related functions. Currently only for the Marker Mapper enrichment - more in the near future!

December 10, 2021

We are excited to announce our latest release for Pupil Cloud including a novel fixation detector, advanced privacy features in workspaces, and more! All recordings uploaded after this release will automatically have fixation data.

Fixation Detection

We have developed a novel fixation detector for Pupil Invisible! It was designed to cope with the challenges of head-mounted eye tracking and is one of the most robust algorithms out there!

Traditional fixation detection algorithms are designed for stationary settings which assume minimal head movement. Our algorithm actively compensates for head movements and can detect fixations more reliably in dynamic scenarios. This includes robustness to vestibulo–ocular reflex (VOR) movements.

Fixations will be calculated automatically on upload to Pupil Cloud and be available in all exports. We are planning on open sourcing our new fixation detection algorithm in the near future along with a white paper and integration into Pupil Player for offline support.

Check out documentation on fixations exported for enrichments in the docs

Advanced Workspace Privacy Settings

We are introducing additional privacy settings for workspaces to cover a few specialized use cases that were requested by the community.

You can now disable scene video upload for the entire workspace. This allows users to make use of Pupil Cloud features like the calculation of 200 Hz gaze or fixations, while complying with strict privacy policies that would not allow scene video uploads to our servers.

In a future release we will introduce the ability to automatically blur faces on-upload of a recording to a workspace, such that the potentially sensitive original version is never stored in Pupil Cloud.

“Created by” Column in Recordings List

You can now see who uploaded each recording in the Drive view “Created by” column. We hope this makes collaboration easier within your workspace.

Optimized Project Editor Layout

We made more space for the video player at the center, and added a toolbar above the video player. The toolbar contains contextual enrichment related functions. Currently only for the Marker Mapper enrichment - more in the near future!

November 29, 2021

We are pleased to announce the release of Pupil Core software v3.5!

Download the latest bundle (scroll down to the end of the release notes and see Assets).

Please feel free to get in touch with feedback and questions via the #pupil channel on Discord! 😄

Overview

In Pupil v3.5, we cleaned up log messages, improved software stability, fixations are now cached between Pupil Player sessions, and we have fine tuned 3d pupil confidence.

Pupil v3.5 is the first release that supports the new macOS Monterey (with a small caveat). Read more below.

New

Recording-specific annotation hotkeys

Add option to filter out low-confidence values in raw data export

Add option to disable world video recording

macOS Monterey support

Improved

Reduced number of user-facing log messages

Export-in-progress file-name suffixes

Windows: Handle exceptions during driver installation gracefully

Windows: Option to skip automatic driver installation

Windows: Create start menu entries and desktop shortcuts for all users on install

Add Euler angles to headpose tracker result

Normalize IMU pitch/roll values to [-180, 180] degrees

Confidence adjustment for pye3d results

Cache post-hoc fixation detector results

Documentation

New

Recording-specific annotation hotkeys - #2170

Until now, the keyboard-shortcut setup for annotations was stored in Pupil Player's session settings. These were reset if you updated Pupil Core software to a new version.

Starting with this version, annotation keyboard shortcuts will be stored persistently within the recording.

The definitions are stored in <recording dir>/offline_data/annotation_definitions.json with the format:

{

"version": 1,

"definitions": {

"<label>": "<hotkey>"

}

}This file can be shared between recordings.

When Pupil Player is opened, it will attempt to load the annotation definitions from the recording-specific file. If the file is not found or is invalid, Pupil Player will fallback to the session settings which contain the last known annotation definitions.

Add option to filter out low-confidence values in raw data export - #2210

Pupil Core software assigns "confidence" values to its pupil detections and gaze estimations. They indicate the quality of the measurement. For visualization and some analysis Plugins, Pupil Player hides gaze data below the "Minimum data confidence" threshold (adjustable in the general settings menu).

Prior to this release, the raw data exporter plugin exported all data, independently of its confidence. New users, therefore, often wonder why their exported data looks noisier than what they have seen in Pupil Player.

Starting with this release, users will have the option to filter out low-confidence data (i.e. below the "Minimum data confidence" threshold) in the export. The option can be enabled in the raw data exporter's menu.

Add option to disable world video recording - #2169

If you are not interested in recording the scene video, you can now disable the scene video recording in the Recorder menu of Pupil Capture's world window.

macOS Monterey support

Due to new technical limitations, Pupil Capture and Pupil Service need to be started with administrator privileges to get access to the video camera feeds. To do that, copy the applications into your /Applications folder and run the corresponding command from the terminal:

Pupil Capture:

sudo /Applications/Pupil\ Capture.app/Contents/MacOS/pupil_capturePupil Service:

sudo /Applications/Pupil\ Service.app/Contents/MacOS/pupil_service

Note: The terminal will prompt you for your administrator password. It will not preview any typed keys. Simply hit enter once the password has been typed.

Note: When recording with administrator privileges, the resulting folder inherits admin file permissions. Pupil Player will detect these and ask you for the administrator password to reset the file permissions. This will be only necessary once per recording.

Improved

Export-in-progress file-name suffixes - #2167, #2186

Exporting video can take a long time. Pupil Player displays the progress as a growing circle around the menu icon while writing the result incrementally to disk. Specifically, it writes the result directly to the final export location. While the export is ongoing, the video file is invalid but still available to the user. This can lead to users opening the video before it has finished exporting and then encountering playback issues.

Starting with this release, Pupil Core software changes the video exports to include a .writing file name suffix during the export. This prevents other applications from recognizing the partially exported video file as complete. Once the export is done, the suffix is removed and other applications are able to recognize the file type as video.

Reduced number of user-facing log messages - #2190

Over the years, Pupil Capture's functionality has grown and with it the number of user-facing log messages. They can be overwhelming to the point where important log messages are overlooked or ignored. For this release, we have reviewed the log messages that are being displayed in the UI during typical actions, e.g. calibration and recordings, and have reduced them significantly.

Windows: Handle exceptions during driver installation gracefully - #2173

Previously, errors during the driver installation could cause the software to crash. Now, errors will be logged to the log file and the user will be asked to install the drivers manually.

Windows: Option to skip automatic driver installation - #2207

Using the -skip-driv or --skip-driver-installation flags, one can now launch Pupil Capture and Pupil Service without running the automatic driver installation on Windows. This can be helpful to speed up the start up time or to avoid unwanted driver installations.

Windows: Create start menu entries and desktop shortcuts for all users on install - #2166

Pupil Core software is installed to C:\Program Files (x86)\Pupil-Labs by default. This directory should be accessible by all users but the start menu entry and desktop shortcut are only installed for the current user. Starting with this release, the installer will create start menu entries and desktop shortcuts for all users on install.

Add Euler angles to headpose tracker result - #2175

Next to camera poses as Rodrigues' rotation vectors, the headpose tracker now also exports the camera orientation in Euler angles.

We also fixed a typo in the exported file name.

- head_pose_tacker_model.csv

+ head_pose_tracker_model.csv

Normalize IMU pitch/roll values to [-180, 180] - #2171

Orientation values exported by the IMU Timeline plugin will now be normalized to a range of [-180, 180] degrees.

Confidence adjustment for pye3d results - #2195

In some cases, pye3d was overestimating the confidence of its pupil detections. These were visible as noisy gaze estimations in the Pupil Core applications. This release includes an updated version of pye3d with adjusted confidence estimations. As a result, the gaze signal should look more stable during lower confidence situations, e.g. while squinting or looking down. For more information, see the Developer notes below.

Cache post-hoc fixation detector results - #2194

Post-hoc fixation detector results no longer need to be recalculated if you close and reopen a recording. Pupil Player stores the results together with the used configuration (version of the gaze data and fixation detection parameters) within the recording directory. The cached data will be restored if the Player configuration matches the configuration stored on disk. If it does not match, Player will recalculate the fixations to avoid inconsistencies.

Documentation

We have improved our documentation in several places:

Pupillometry best practices - pupil-docs#453

Blink detection - pupil-docs#463

Pupil Core API - pupil-docs#455

Fixed

Fixed typo in exported headpose tracker files - #2185

Fix confidence graphs in Pupil Player for pre-2.0 recordings - #2191

Use correct socket API to retrieve local IP address for Service - #2201

Fix loading 200 Hz gaze data in Pupil Invisible recordings - #2204

Fix loading short audio streams - #2206

Known issues

Windows: Connecting remotely to Pupil Remote on Pupil Service does not work in some cases

Pupil Groups: Users have reported issues with Pupil Groups connectivity when running Pupil Capture on different devices.

We are investigating these issues and will try to resolve them with an update as soon as possible.

Developer notes

Dependencies updates

Python dependencies can be updated using pip and the requirements.txt file:

python -m pip install --upgrade pip wheel

pip install -r requirements.txtpye3d v0.2.0 and v0.3.0

Version 0.2.0 of pye3d has lowered 3d-search-result confidence by 40%. When the confidence of the input 2d pupil datum is lower than a specific threshold (0.7), pye3d attempts to reconstruct the 2d pupil ellipse based on its existing 3d eye model. We noticed that the confidence for these results was systematically overestimated given the actual detection result quality and have therefore adjusted the overall confidence assignment for them. See pye3d-detector#36 for reference.

Version 0.3.0 uses a new serialization format for the refractionizer parameters but is otherwise functionally equivalent to version 0.2.0.

pyglui v1.31.0

This new version of pyglui introduces support for multi-line thumb and icon labels.

Example:

from pyglui import ui

context = {"state": False}

ui.Icon(

"state",

context,

label="Multi\nLine",

label_offset_size=-10, # smaller font

label_offset_x=0, # no horizontal adjustments

label_offset_y=-10, # move label upwards

label_line_height=0.8, # reduce line height

)zeromq-pyre >= v0.3.4

Starting with version v0.3.4, zeromq-pyre is able to handle cases gracefully where it is not able to access any network interfaces. See #2187 for reference.

Plugin API

Easy plugin icon customization - #2196

Plugins can now overwrite the following class attributes to customize the menu icon:

icon_pos_delta: relative positioningicon_size_delta: relative font resizingicon_line_height(multi-line icons only): line distance

Requires pyglui v1.31.0 or higher

Real-time Network API

Add surf_to_dist_img_trans and dist_img_to_surf_trans to surface events #2168

These two homographies are necessary to transform points from the surface coordinate system to the 2d image space of the scene camera, and vice versa. They have been available as part of the surface location exports in Pupil Player. With this release, they also become available to other plugins in Pupil Capture or via its Network API.

Example plugin that access the new homographies in real-time:

import numpy as np

from plugin import Plugin

class Example(Plugin):

def recent_events(self, events):

# access surfaces or fallback to empty list if not available

surfaces = events.get("surfaces", [])

for surface in surfaces:

# access raw homography data, a list of lists

homography = surface["surf_to_dist_img_trans"]

# convert to a matrix

homography = np.array(homography)

# use homography, e.g. printing it

print(f"{surface['name']}: {homography}")Downloads

To open the RAR-archive on Windows, you will need to use decompression software, such as WinRAR or 7-Zip (both are available for free).

Supported operating systems

macOS Mojave 10.14, macOS Catalina 10.15, macOS Big Sur 11

macOS Monterey 12 requires administrator privileges to access the video cameras

Ubuntu 16.04 or newer

Windows 10

Checksums

$ shasum -a 256 pupil_v3.5*

41428173c33d07171bb7bf8f0c83b7debfabb66a1dfc7530d1ae302be70ccc9c pupil_v3.5-1-g1cdbe38_windows_x64.msi.rar

608aceaf4bf450254812b3fcaf8ea553450fcf2d626ae9c2ed0404348918d6ad pupil_v3.5-7-g651931ba_macos_x64.dmg

786feb5e937a68a21821ab6b6d86be915bcbb7c5e24c11664dbf8a95e5fcb8cc pupil_v3.5-8-g0c019f6_linux_x64.zipNovember 29, 2021

We are pleased to announce the release of Pupil Core software v3.5!

Download the latest bundle (scroll down to the end of the release notes and see Assets).

Please feel free to get in touch with feedback and questions via the #pupil channel on Discord! 😄

Overview

In Pupil v3.5, we cleaned up log messages, improved software stability, fixations are now cached between Pupil Player sessions, and we have fine tuned 3d pupil confidence.

Pupil v3.5 is the first release that supports the new macOS Monterey (with a small caveat). Read more below.

New

Recording-specific annotation hotkeys

Add option to filter out low-confidence values in raw data export

Add option to disable world video recording

macOS Monterey support

Improved

Reduced number of user-facing log messages

Export-in-progress file-name suffixes

Windows: Handle exceptions during driver installation gracefully

Windows: Option to skip automatic driver installation

Windows: Create start menu entries and desktop shortcuts for all users on install

Add Euler angles to headpose tracker result

Normalize IMU pitch/roll values to [-180, 180] degrees

Confidence adjustment for pye3d results

Cache post-hoc fixation detector results

Documentation

New

Recording-specific annotation hotkeys - #2170

Until now, the keyboard-shortcut setup for annotations was stored in Pupil Player's session settings. These were reset if you updated Pupil Core software to a new version.

Starting with this version, annotation keyboard shortcuts will be stored persistently within the recording.

The definitions are stored in <recording dir>/offline_data/annotation_definitions.json with the format:

{

"version": 1,

"definitions": {

"<label>": "<hotkey>"

}

}This file can be shared between recordings.

When Pupil Player is opened, it will attempt to load the annotation definitions from the recording-specific file. If the file is not found or is invalid, Pupil Player will fallback to the session settings which contain the last known annotation definitions.

Add option to filter out low-confidence values in raw data export - #2210

Pupil Core software assigns "confidence" values to its pupil detections and gaze estimations. They indicate the quality of the measurement. For visualization and some analysis Plugins, Pupil Player hides gaze data below the "Minimum data confidence" threshold (adjustable in the general settings menu).

Prior to this release, the raw data exporter plugin exported all data, independently of its confidence. New users, therefore, often wonder why their exported data looks noisier than what they have seen in Pupil Player.

Starting with this release, users will have the option to filter out low-confidence data (i.e. below the "Minimum data confidence" threshold) in the export. The option can be enabled in the raw data exporter's menu.

Add option to disable world video recording - #2169

If you are not interested in recording the scene video, you can now disable the scene video recording in the Recorder menu of Pupil Capture's world window.

macOS Monterey support

Due to new technical limitations, Pupil Capture and Pupil Service need to be started with administrator privileges to get access to the video camera feeds. To do that, copy the applications into your /Applications folder and run the corresponding command from the terminal:

Pupil Capture:

sudo /Applications/Pupil\ Capture.app/Contents/MacOS/pupil_capturePupil Service:

sudo /Applications/Pupil\ Service.app/Contents/MacOS/pupil_service

Note: The terminal will prompt you for your administrator password. It will not preview any typed keys. Simply hit enter once the password has been typed.

Note: When recording with administrator privileges, the resulting folder inherits admin file permissions. Pupil Player will detect these and ask you for the administrator password to reset the file permissions. This will be only necessary once per recording.

Improved

Export-in-progress file-name suffixes - #2167, #2186

Exporting video can take a long time. Pupil Player displays the progress as a growing circle around the menu icon while writing the result incrementally to disk. Specifically, it writes the result directly to the final export location. While the export is ongoing, the video file is invalid but still available to the user. This can lead to users opening the video before it has finished exporting and then encountering playback issues.

Starting with this release, Pupil Core software changes the video exports to include a .writing file name suffix during the export. This prevents other applications from recognizing the partially exported video file as complete. Once the export is done, the suffix is removed and other applications are able to recognize the file type as video.

Reduced number of user-facing log messages - #2190

Over the years, Pupil Capture's functionality has grown and with it the number of user-facing log messages. They can be overwhelming to the point where important log messages are overlooked or ignored. For this release, we have reviewed the log messages that are being displayed in the UI during typical actions, e.g. calibration and recordings, and have reduced them significantly.

Windows: Handle exceptions during driver installation gracefully - #2173

Previously, errors during the driver installation could cause the software to crash. Now, errors will be logged to the log file and the user will be asked to install the drivers manually.

Windows: Option to skip automatic driver installation - #2207

Using the -skip-driv or --skip-driver-installation flags, one can now launch Pupil Capture and Pupil Service without running the automatic driver installation on Windows. This can be helpful to speed up the start up time or to avoid unwanted driver installations.

Windows: Create start menu entries and desktop shortcuts for all users on install - #2166

Pupil Core software is installed to C:\Program Files (x86)\Pupil-Labs by default. This directory should be accessible by all users but the start menu entry and desktop shortcut are only installed for the current user. Starting with this release, the installer will create start menu entries and desktop shortcuts for all users on install.

Add Euler angles to headpose tracker result - #2175

Next to camera poses as Rodrigues' rotation vectors, the headpose tracker now also exports the camera orientation in Euler angles.

We also fixed a typo in the exported file name.

- head_pose_tacker_model.csv

+ head_pose_tracker_model.csv

Normalize IMU pitch/roll values to [-180, 180] - #2171

Orientation values exported by the IMU Timeline plugin will now be normalized to a range of [-180, 180] degrees.

Confidence adjustment for pye3d results - #2195

In some cases, pye3d was overestimating the confidence of its pupil detections. These were visible as noisy gaze estimations in the Pupil Core applications. This release includes an updated version of pye3d with adjusted confidence estimations. As a result, the gaze signal should look more stable during lower confidence situations, e.g. while squinting or looking down. For more information, see the Developer notes below.

Cache post-hoc fixation detector results - #2194

Post-hoc fixation detector results no longer need to be recalculated if you close and reopen a recording. Pupil Player stores the results together with the used configuration (version of the gaze data and fixation detection parameters) within the recording directory. The cached data will be restored if the Player configuration matches the configuration stored on disk. If it does not match, Player will recalculate the fixations to avoid inconsistencies.

Documentation

We have improved our documentation in several places:

Pupillometry best practices - pupil-docs#453

Blink detection - pupil-docs#463

Pupil Core API - pupil-docs#455

Fixed

Fixed typo in exported headpose tracker files - #2185

Fix confidence graphs in Pupil Player for pre-2.0 recordings - #2191

Use correct socket API to retrieve local IP address for Service - #2201

Fix loading 200 Hz gaze data in Pupil Invisible recordings - #2204

Fix loading short audio streams - #2206

Known issues

Windows: Connecting remotely to Pupil Remote on Pupil Service does not work in some cases

Pupil Groups: Users have reported issues with Pupil Groups connectivity when running Pupil Capture on different devices.

We are investigating these issues and will try to resolve them with an update as soon as possible.

Developer notes

Dependencies updates

Python dependencies can be updated using pip and the requirements.txt file:

python -m pip install --upgrade pip wheel

pip install -r requirements.txtpye3d v0.2.0 and v0.3.0

Version 0.2.0 of pye3d has lowered 3d-search-result confidence by 40%. When the confidence of the input 2d pupil datum is lower than a specific threshold (0.7), pye3d attempts to reconstruct the 2d pupil ellipse based on its existing 3d eye model. We noticed that the confidence for these results was systematically overestimated given the actual detection result quality and have therefore adjusted the overall confidence assignment for them. See pye3d-detector#36 for reference.

Version 0.3.0 uses a new serialization format for the refractionizer parameters but is otherwise functionally equivalent to version 0.2.0.

pyglui v1.31.0

This new version of pyglui introduces support for multi-line thumb and icon labels.

Example:

from pyglui import ui

context = {"state": False}

ui.Icon(

"state",

context,

label="Multi\nLine",

label_offset_size=-10, # smaller font

label_offset_x=0, # no horizontal adjustments

label_offset_y=-10, # move label upwards

label_line_height=0.8, # reduce line height

)zeromq-pyre >= v0.3.4

Starting with version v0.3.4, zeromq-pyre is able to handle cases gracefully where it is not able to access any network interfaces. See #2187 for reference.

Plugin API

Easy plugin icon customization - #2196

Plugins can now overwrite the following class attributes to customize the menu icon:

icon_pos_delta: relative positioningicon_size_delta: relative font resizingicon_line_height(multi-line icons only): line distance

Requires pyglui v1.31.0 or higher

Real-time Network API

Add surf_to_dist_img_trans and dist_img_to_surf_trans to surface events #2168

These two homographies are necessary to transform points from the surface coordinate system to the 2d image space of the scene camera, and vice versa. They have been available as part of the surface location exports in Pupil Player. With this release, they also become available to other plugins in Pupil Capture or via its Network API.

Example plugin that access the new homographies in real-time:

import numpy as np

from plugin import Plugin

class Example(Plugin):

def recent_events(self, events):

# access surfaces or fallback to empty list if not available

surfaces = events.get("surfaces", [])

for surface in surfaces:

# access raw homography data, a list of lists

homography = surface["surf_to_dist_img_trans"]

# convert to a matrix

homography = np.array(homography)

# use homography, e.g. printing it

print(f"{surface['name']}: {homography}")Downloads

To open the RAR-archive on Windows, you will need to use decompression software, such as WinRAR or 7-Zip (both are available for free).

Supported operating systems

macOS Mojave 10.14, macOS Catalina 10.15, macOS Big Sur 11

macOS Monterey 12 requires administrator privileges to access the video cameras

Ubuntu 16.04 or newer

Windows 10

Checksums

$ shasum -a 256 pupil_v3.5*

41428173c33d07171bb7bf8f0c83b7debfabb66a1dfc7530d1ae302be70ccc9c pupil_v3.5-1-g1cdbe38_windows_x64.msi.rar

608aceaf4bf450254812b3fcaf8ea553450fcf2d626ae9c2ed0404348918d6ad pupil_v3.5-7-g651931ba_macos_x64.dmg

786feb5e937a68a21821ab6b6d86be915bcbb7c5e24c11664dbf8a95e5fcb8cc pupil_v3.5-8-g0c019f6_linux_x64.zipSeptember 30, 2021

Big release! We added two powerful new features. Workspaces enable collaboration with your colleagues. Face Mapper automatically detects faces in recordings!

Workspaces

Workspaces enable you to collaborate with colleagues through role based access from data collection with Pupil Invisible Companion App to data annotation and enrichment in Pupil Cloud. Workspaces act as isolated spaces that contain recordings, wearers, templates, labels, projects and enrichments.

Note: Update the Pupil Invisible Companion app to the most recent version (v1.3.0) to make use of workspaces.

Face Mapper

Face Mapper is a new enrichment that automatically and robustly detects faces in the scene video and maps gaze data onto the detected faces. This enables you to easily determine when a subject has been looking at a face and to compute aggregate statistics (example: how much time was spent looking at faces?).

Share your thoughts

Have feedback, questions, feature requests - send us your thoughts!

September 30, 2021

Big release! We added two powerful new features. Workspaces enable collaboration with your colleagues. Face Mapper automatically detects faces in recordings!

Workspaces

Workspaces enable you to collaborate with colleagues through role based access from data collection with Pupil Invisible Companion App to data annotation and enrichment in Pupil Cloud. Workspaces act as isolated spaces that contain recordings, wearers, templates, labels, projects and enrichments.

Note: Update the Pupil Invisible Companion app to the most recent version (v1.3.0) to make use of workspaces.

Face Mapper

Face Mapper is a new enrichment that automatically and robustly detects faces in the scene video and maps gaze data onto the detected faces. This enables you to easily determine when a subject has been looking at a face and to compute aggregate statistics (example: how much time was spent looking at faces?).

Share your thoughts

Have feedback, questions, feature requests - send us your thoughts!

July 22, 2021

Head Pitch & Roll Estimates are now available!

The Inertial Measurment Unit (IMU) sensor embedded in the Pupil Invisible Glasses frame provides measurements that can tell us how the wearer’s head is moving. This can be valuable if you want to measure when your subject is looking downwards vs upwards for example.

In this update we have made the outputs of the IMU much easier to work with. Instead of only reporting the raw outputs of the IMU (rotational speed and translational acceleration), we now include estimates of the absolute pitch and roll of the head.

This is part of the Raw Data Exporter. You can find more details about how the estimates are calculated in the docs!

Information on when the Glasses are worn now included in Raw Data Export

The gaze.csv file included in the Raw Data Export now contains a new column called worn that indicates whether the Pupil Invisible Glasses have been worn by a subject at the respective time point (1.0 = worn, 0.0 = not worn).

This data has previously only been available as part of the binary recording data.

Improved Export Format of Marker Mapper

We have updated the export format of the Marker Mapper to be easier to use:

We have added a new column detected markers to the aoi_positions.csv file that contains the IDs of all the markers detected in the respective frame.We have split the corner coordinates in image column in the aoi_positions.csv file in to a separate column per coordinate to make it easier to parse.

Do you have feedback you would like to share?

July 22, 2021

Head Pitch & Roll Estimates are now available!

The Inertial Measurment Unit (IMU) sensor embedded in the Pupil Invisible Glasses frame provides measurements that can tell us how the wearer’s head is moving. This can be valuable if you want to measure when your subject is looking downwards vs upwards for example.

In this update we have made the outputs of the IMU much easier to work with. Instead of only reporting the raw outputs of the IMU (rotational speed and translational acceleration), we now include estimates of the absolute pitch and roll of the head.

This is part of the Raw Data Exporter. You can find more details about how the estimates are calculated in the docs!

Information on when the Glasses are worn now included in Raw Data Export

The gaze.csv file included in the Raw Data Export now contains a new column called worn that indicates whether the Pupil Invisible Glasses have been worn by a subject at the respective time point (1.0 = worn, 0.0 = not worn).

This data has previously only been available as part of the binary recording data.

Improved Export Format of Marker Mapper

We have updated the export format of the Marker Mapper to be easier to use:

We have added a new column detected markers to the aoi_positions.csv file that contains the IDs of all the markers detected in the respective frame.We have split the corner coordinates in image column in the aoi_positions.csv file in to a separate column per coordinate to make it easier to parse.

Do you have feedback you would like to share?

July 13, 2021

We are pleased to announce the release of Pupil Core software v3.4!

Download the latest bundle (scroll down to the end of the release notes and see Assets).

Please feel free to get in touch with feedback and questions via the #pupil channel on Discord! 😄

Overview

Pupil v3.4 introduces a new color scheme for pupil detection algorithms, improved gaze-estimation stability for frozen eye models, and a new IMU visualization and export plugin for Pupil Invisible recordings within Pupil Player.

New

Dynamic pye3d model confidence

Pupil detection color scheme

Added IMU Timeline plugin

Improved

Gaze-estimation stability with frozen eye models

Annotation log message

Prevent log flood in Surface Tracker related to finding homographies

Support upgrading legacy recordings

New

Dynamic pye3d model confidence

Pupil Core software version v3.4 ships the new pye3d version 0.1.1.

In the previous versions of pye3d model_confidence was fixed to a value of 1.0. Starting with version 0.1.0, pye3d assigns different model_confidence values based on the long-term model's parameters. Specifically, model_confidence is set to 1.0 by default and set to 0.1 if at least one model output exceeds its physiologically reasonable range. If the ranges are exceeded, it is likely that the model is either not fit well or the 2d input ellipse was a false detection.

The assumed physiological bounds are:

phi: [-80, 80] degrees

theta: [-80, 80] degrees

pupil diameter: [1.0, 9.0] mm

eyeball center x: [-10, 10] mm

eyeball center y: [-10, 10] mm

eyeball center z: [20, 75] mm

Phi and theta ranges are relative to the eye camera's optical axis. The eye ball center ranges are defined relative to the origin of the eye camera's 3d coordinate system.

The model_confidence will be set to 0.0 if the gaze direction cannot be calculated.

Pupil detection color scheme

The new pupil detection color scheme makes use of the dynamic model confidence values by visualizing within-bounds and out-of-bounds models in different colors.

Color legend:

#FAC800: Pupil ellipse (2d)#F92500: Pupil ellipse (3d)#0777FA: Long-term model outline (within-bounds)#80E0FF: Long-term model outline (out-of-bounds)

Note: Even though the detected pupil ellipses fit the pupil well on the right-side picture, the model is outside of the physiological ranges defined above. This can lead to errors in gaze-direction and pupil-size estimates. You can improve such a model by looking into different directions.

While the 3d debug window is opened, pye3d also visualizes the short- and ultra-long-term model outlines:

#FFB876: Short-term model outline#FF5C16: Ultra-long-term model outline

Added IMU Timeline plugin - #2151

This is a new plugin designed to visualize and export IMU data available in Pupil Invisible recordings.

In addition to the raw angular velocity and linear acceleration, the plugin also uses Madgwick's algorithm to estimate pitch and roll.

To export the data, the plugin must be enabled and a raw data export must be triggered. Once the export is complete, there should be a imu_timeline.csv file with the following columns

Improved

Improve gaze-estimation stability with frozen eye models

Freezing the eye models is the best way to reduce noisy data when in a controlled environment. Prior to this release, freezing the eye model only affected eye ball center and pupil diameter measurements. Starting with this version, freezing the eye model also stabilizes gaze direction estimates.

In order to adapt to slippage, pye3d estimates eyeball positions on different time scales. These serve specific downstream purposes. By default, the short-term model, which integrates only the most recent pupil observations, is used for calculating raw gaze-direction estimates.

In highly controlled environments with few eye movements (e.g. subject uses head rest and is asked to fixate a static target), the most recent pupil observations might not be sufficient to build a stable short-term model. If this is the case, the gaze direction varies more than one would expect given the controlled environment.Starting in pye3d version 0.1.0, the gaze direction will be inferred from the long-term model if it is frozen.

Freezing the eye model is only recommended in controlled environments as this prevents the model from adapting to slippage. In turn, it is able to provide very stable data.

Improve annotation log message - #2148

When creating a new annotation, the log message will no longer show the Pupil Timestamp. Instead, Pupil Capture will show how old the annotation is, while Pupil Player will show the frame index of the world video.

This change should help avoid the confusion associated with displaying Pupil Timestamp.

Prevent log flood in Surface Tracker related to finding homographies - #2150

Previously, failing to locate a surface because of missing homographies would print an error message in the main window. In some cases, this would result in a flood of error logs.

This change ensures that failures of such type are only issued once, and are written to the log file directly.

Support upgrading legacy recordings - #2161

Pupil Core software version v1.16 is no longer necessary to upgrade deprecated recording formats. Instead, Pupil v3.4 performs all necessary upgrades by itself. This release also includes a bug fix for an issue with pre v0.7.4 recordings.

In v1.16, recordings made with Pupil Capture v1.2 or earlier, andPupil Mobile r0.22.x or earlier have been deprecated due to the fact that these recordings are missing meta information that is required for the upgrade to the Pupil Player Recording Format 2.0. For details see "Missing Meta Information" in the v1.16 release notes.

Fixed

Use UTF-8 decoding for known UTF-8 encoded text files - #2146

Update circle drawing to display correctly on Apple Silicon devices - #2147

Fix crash on surface tracker export if filling the marker cache is not complete - #2157

Fix surface tracker marker export to use export range - #2158

Developer notes

Dependencies updates

Python dependencies can be updated using pip and the requirements.txt file:

python -m pip install --upgrade pip wheel

pip install -r requirements.txtpyglui v1.30.0

This new version of pyglui includes the menu icon for the new IMU timeline plugin as well as the new Color_Legend UI element.

from pyglui import ui

color_rgb_red = (1.0, 0.0, 0.0)

label = "red color label"

ui.Color_Legend(color_rgb_red, label)pye3d v0.1.1

This version improves gaze-estimation stability for frozen eye models. For details see above and the pye3d changelog.

Downloads

To open the RAR-archive on Windows, you will need to use decompression software, such as WinRAR or 7-Zip (both are available for free).

Supported operating systems

Windows 10

Ubuntu 16.04+

macOS Mojave 10.14+

macOS Monterey 12 is not supported by this release

July 13, 2021

We are pleased to announce the release of Pupil Core software v3.4!

Download the latest bundle (scroll down to the end of the release notes and see Assets).

Please feel free to get in touch with feedback and questions via the #pupil channel on Discord! 😄

Overview

Pupil v3.4 introduces a new color scheme for pupil detection algorithms, improved gaze-estimation stability for frozen eye models, and a new IMU visualization and export plugin for Pupil Invisible recordings within Pupil Player.

New

Dynamic pye3d model confidence

Pupil detection color scheme

Added IMU Timeline plugin

Improved

Gaze-estimation stability with frozen eye models

Annotation log message

Prevent log flood in Surface Tracker related to finding homographies

Support upgrading legacy recordings

New

Dynamic pye3d model confidence

Pupil Core software version v3.4 ships the new pye3d version 0.1.1.

In the previous versions of pye3d model_confidence was fixed to a value of 1.0. Starting with version 0.1.0, pye3d assigns different model_confidence values based on the long-term model's parameters. Specifically, model_confidence is set to 1.0 by default and set to 0.1 if at least one model output exceeds its physiologically reasonable range. If the ranges are exceeded, it is likely that the model is either not fit well or the 2d input ellipse was a false detection.

The assumed physiological bounds are:

phi: [-80, 80] degrees

theta: [-80, 80] degrees

pupil diameter: [1.0, 9.0] mm

eyeball center x: [-10, 10] mm

eyeball center y: [-10, 10] mm

eyeball center z: [20, 75] mm

Phi and theta ranges are relative to the eye camera's optical axis. The eye ball center ranges are defined relative to the origin of the eye camera's 3d coordinate system.

The model_confidence will be set to 0.0 if the gaze direction cannot be calculated.

Pupil detection color scheme

The new pupil detection color scheme makes use of the dynamic model confidence values by visualizing within-bounds and out-of-bounds models in different colors.

Color legend:

#FAC800: Pupil ellipse (2d)#F92500: Pupil ellipse (3d)#0777FA: Long-term model outline (within-bounds)#80E0FF: Long-term model outline (out-of-bounds)

Note: Even though the detected pupil ellipses fit the pupil well on the right-side picture, the model is outside of the physiological ranges defined above. This can lead to errors in gaze-direction and pupil-size estimates. You can improve such a model by looking into different directions.

While the 3d debug window is opened, pye3d also visualizes the short- and ultra-long-term model outlines:

#FFB876: Short-term model outline#FF5C16: Ultra-long-term model outline

Added IMU Timeline plugin - #2151

This is a new plugin designed to visualize and export IMU data available in Pupil Invisible recordings.

In addition to the raw angular velocity and linear acceleration, the plugin also uses Madgwick's algorithm to estimate pitch and roll.

To export the data, the plugin must be enabled and a raw data export must be triggered. Once the export is complete, there should be a imu_timeline.csv file with the following columns

Improved

Improve gaze-estimation stability with frozen eye models

Freezing the eye models is the best way to reduce noisy data when in a controlled environment. Prior to this release, freezing the eye model only affected eye ball center and pupil diameter measurements. Starting with this version, freezing the eye model also stabilizes gaze direction estimates.

In order to adapt to slippage, pye3d estimates eyeball positions on different time scales. These serve specific downstream purposes. By default, the short-term model, which integrates only the most recent pupil observations, is used for calculating raw gaze-direction estimates.

In highly controlled environments with few eye movements (e.g. subject uses head rest and is asked to fixate a static target), the most recent pupil observations might not be sufficient to build a stable short-term model. If this is the case, the gaze direction varies more than one would expect given the controlled environment.Starting in pye3d version 0.1.0, the gaze direction will be inferred from the long-term model if it is frozen.