Saliency in VR: How do people explore virtual environments?

Community Stories

Author(s): Pupil Dev Team

January 9, 2017

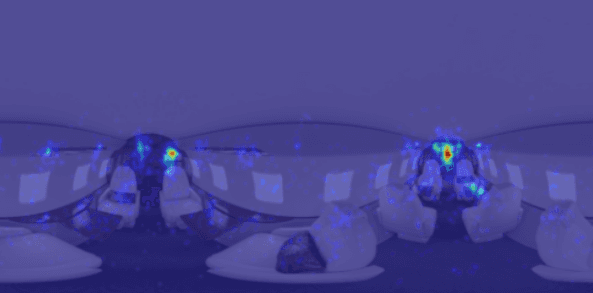

Image Caption: Saliency map generated using ground truth data collected using Pupil Labs Oculus DK2 eye tracking add-on overlay on top of one of the stimulus panorama images shown to the participants. Image Source: Fig 5. Page 7.

In their recent research paper Saliency in VR: How do people explore virtual environments?, Vincent Sitzmann et al., argue that viewing behavior in VR environments is much more complex than on conventional displays due to the technology of interactions in kinematics that makes VR possible.

To further understand viewing behavior and saliency in VR, Vincent Sitzmann et al. collected a dataset that records gaze data and head orientation from users oberserving omni-directional stereo panoramas using an Oculus Rift DK2 VR headset with Pupil Lab's Oculus Rift DK2 add-on cup.

The dataset shows that gaze and head orientation can be used to build more accurate saliency maps for VR environments. Based on the data, Sitzmann and his colleagues propose new methods to learn and predict time-dependent saliency in VR. The collected data is a first step towards building saliency models specifically tailored to VR environments. If successful, these VR saliency models could serve as a method to approximate and predict gaze movements using movement data and image information.

If you use Pupil in your research and have published work, please send us a note. We would love to include your work here on the blog and in a list of work that cites Pupil.