What is eye tracking?

News

December 10, 2020

As the proverbial “windows to the soul”, our eyes are a rich source of information about our internal world.

Eye tracking is a powerful method for collecting eye-related signals, such as gaze direction, pupil size, and blink rate. By analyzing these signals, either in isolation or in relation to the visual scene, important insights can be gained into human behaviors and mental states.

It is a versatile approach to answering a range of fundamental questions, such as: What is this person feeling? What are they interested in? How distracted or drowsy are they? What is their state of mental or physical health?

Why and how do we move our eyes?

Let’s start with a seemingly simple question: Why do we move our eyes in the first place? The answers to this question are crucial for understanding why eye movements are so informative about our behaviors and mental states.

As humans, we move our eyes in very specific ways, known as “movement motifs”. To put these in context, it’s helpful to first explore the anatomy of the eye itself.

How does the anatomy of the eye relate to eye movements?

At the back of the human eye is a light-sensitive sheet of cells called the retina. It contains two types of photoreceptor cells: cones, which provide high-resolution color vision; and rods, which are responsible for low-resolution monochromatic vision (Dowling, 2007).

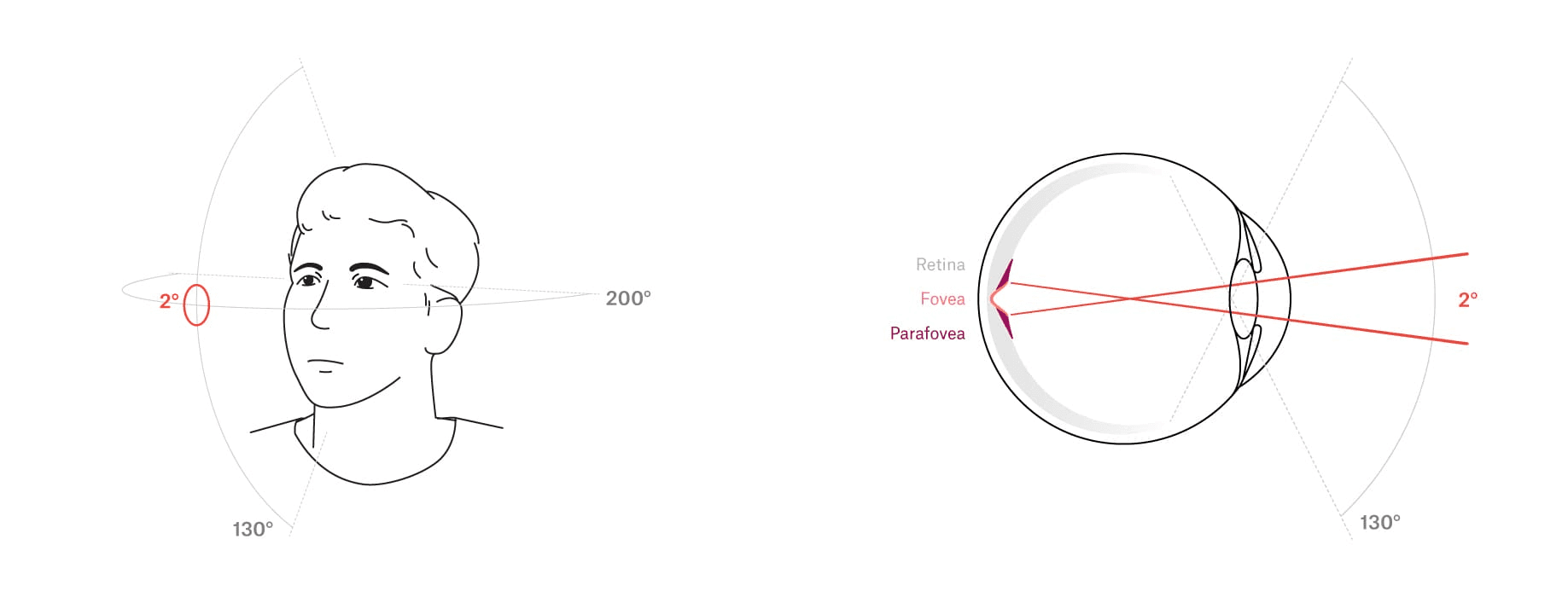

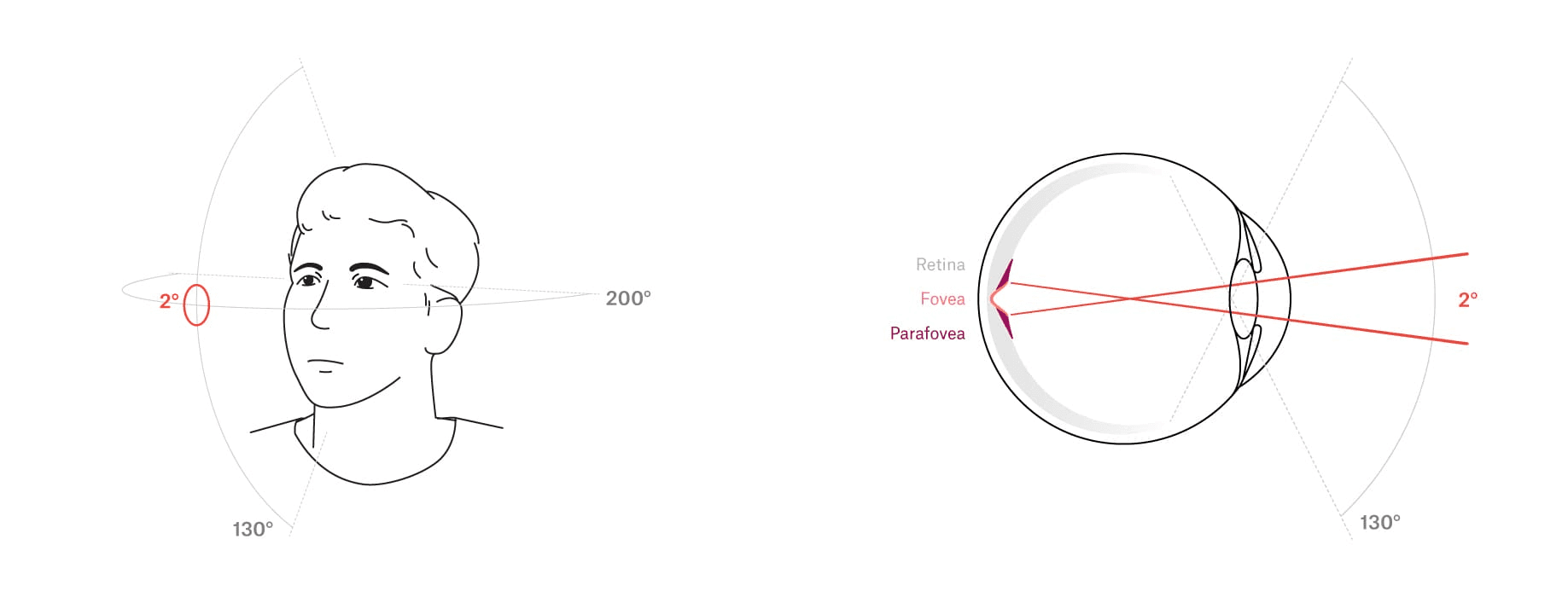

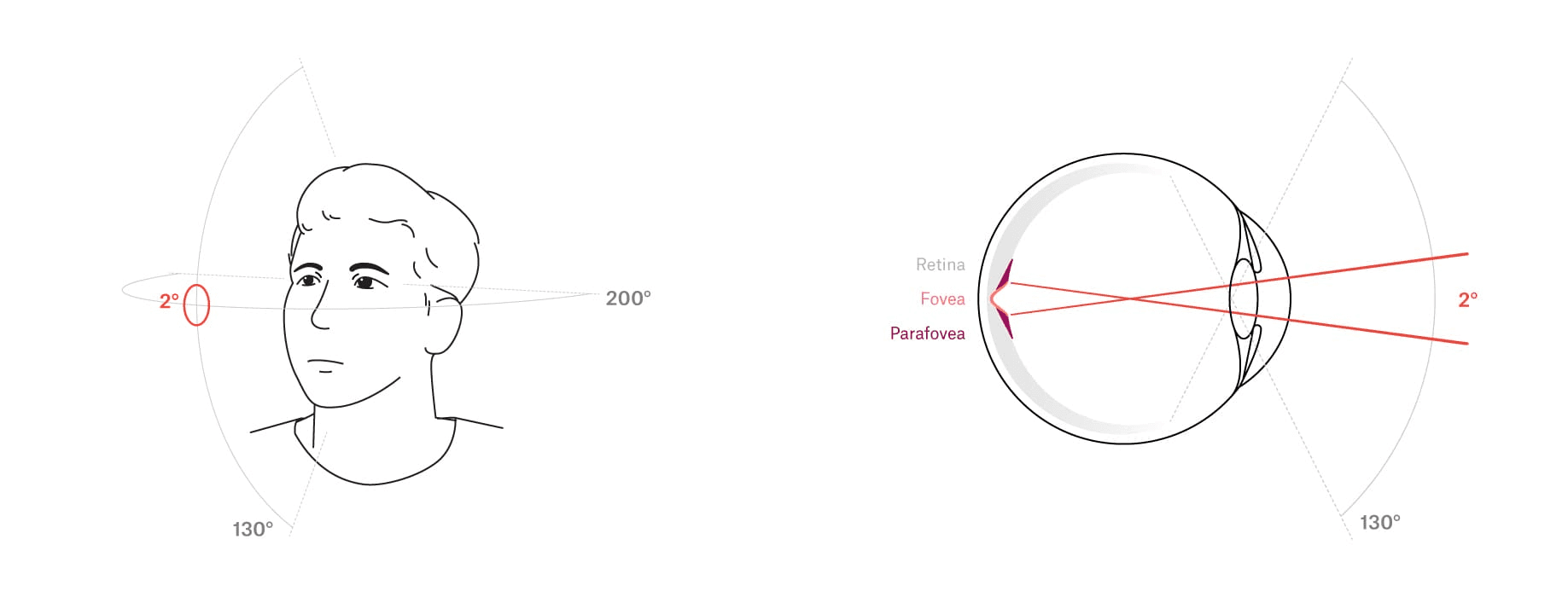

Cones are packed at the highest density within an extremely small, specialized area known as the fovea, which covers a mere 2° around the focal point of each eye (roughly the width of your thumbnail if you hold your arm at full length away from you).

Figure 1: The binocular visual field in humans extends about 130° vertically and 200° horizontally. While the ability to detect motion is almost uniform over this range, color-vision and sharp form perception are restricted to its most central portion. The physiological basis for this is the limited extent of the highly specialized foveal region within the retina. As a consequence, humans need to move their eyes to take in the relevant details present in a given scene.

The fovea functions like a high-intensity “spotlight”, providing sufficient resolution to distinguish the fine details of the visual scene (such as letters on a page). Some details are still distinguishable in the immediately surrounding parafoveal region (5° around fixation). However, beyond this in the periphery, visual resolution diminishes rapidly and the image becomes blurry.

This implies that to use high-resolution foveal vision to take in the different elements of a scene in detail, we have to move our eyes.

Figure 2: Qualitatively different eye movement motifs can be distinguished. Saccades refer to rapid jumps in gaze from fixation to fixation.

Figure 3: During smooth pursuits, gaze follows a moving object in a continuous manner.

How are eye movements classified?

Eye movements can be classified into three discrete movement motifs: fixations, saccades, and smooth pursuits (Rayner & Castelhano, 2007; Findlay & Walker, 2012).

In contrast to the typically rapid movements of the eyes, fixations are relatively longer periods (0.2–0.6 s) of steady focus on an object. They make use of the high resolution of the fovea to maximize the visual information gained about the focal object.

Smooth pursuits and saccades are types of proper eye movement. Saccades are rapid, point-to-point eye movements that shift the focus of the eyes abruptly (typically in 20–100 ms) to a new fixation point, while smooth pursuits are continuous movements of the eye to track a moving object.

When people visually investigate a scene, they make a series of rapid saccades, interspersed with relatively longer periods of fixations on its key features, or smooth pursuits of moving objects. This pattern of eye movements makes best use of the limited focus of foveal vision to quickly gather detailed visual information.Importantly, this information is only processed by the brain during periods of fixation or smooth pursuit, and not during saccades. This means that the detail taken in by the foveal “spotlight” depends on the locations and durations of fixations and smooth pursuits in the visual scene.

The visual information from the fovea has a powerful influence on a person’s cognitive, emotional, physiological, and neurological states. Therefore, by analyzing eye movements alone or in relation to the visual scene in front of them, important insights can be gained into behaviors and mental states.

What is eye tracking used for?

Given the insights that eye tracking offers, it’s no surprise that it has been applied to many different areas of research and industry (Duchowski, 2002). The scope and possibilities of eye tracking are huge, and continue to expand as novel applications are created and developed.

With eye tracking it is possible to answer fundamental questions about human behavior: What is this person interested in? What are they looking at? What are they feeling? What do their eyes tell us about their health, or their coordination, or their alertness?

The answers to questions such as these are relevant to many different fields. Below, we explore some important examples of current applications of eye tracking.

Academic research

Eye tracking began in 1908 when Edmund Huey built a device in his lab to study how people read. This academic tradition lives on to the present day, with eye tracking still being a widely used experimental method. Eye-tracking results continue to advance the frontiers of academic research, especially in the fields of psychology, neuroscience, and human–computer interaction (HCI) (for a diverse list of publications and projects that have used eye tracking in recent years, check out our publications page.

Marketing and design

The movements of the eyes hold a wealth of information about someone’s interests and desires, making eye tracking a powerful tool within the fields of marketing and design. It enables insights to be gained into how users interact with or attend to graphical content (dos Santos et al., 2015), including what they are most interested in. This can help to optimize anything from UX design, to point-of-sale displays, to video content, to online store layouts.

Performance training

In sport and skilled professions, every little movement can matter and make the difference between a poor performance and a good one. Professionals such as athletes or skilled laborers or surgeons (or their managers or coaches) use eye tracking to understand what visual information is most important to attend to for peak performance, and to train motor coordination and skilled action (Hüttermann et al., 2018, Causer et al., 2014).

Medicine and healthcare

Eye tracking is an invaluable diagnostic and monitoring tool within medicine. It can be applied to diagnose specific conditions, such as concussion (Samadani, 2015), and to improve and complement existing methods of diagnosis (Brunyé et al., 2019). Non-invasive eye tracking methods make it possible for doctors to quickly and easily monitor medical conditions and diseases such as Alzheimer’s (Molitor et al., 2015).

Communications

The eyes express vital information during social interactions. Remote communication/telepresence technologies (such as virtual meeting spaces) are becoming more engaging thanks to the incorporation of eye movement information in virtual avatars.

Interactive applications

Computer-based and virtual systems are exciting and rapidly growing domains of application for eye tracking, especially with regard to user interaction based on eye gaze.

The field of HCI has long pioneered the use of eye movements as active signals for controlling computers (Köles, 2017). These advances are being applied to many different challenges, from the creation of assistive technologies that allow disabled people to interact hands-free with a computer using an “eye mouse”, to prosthetics that respond intelligently to eye gaze (Lukander, 2016).

Eye tracking data can be used to assess a user’s interests and modify online videos and search suggestions in real time (see the Fovea project for an example of this), with implications for online shopping and content creation. In the world of e-learning, this interactive approach is being used to responsively adapt virtual learning content based on eye-related measurements of key factors, such as a learner’s interests, cognitive load, and alertness (Al-Khalifa & George, 2010; Rosch & Vogel-Walcutt, 2013).

Finally, the computer game and VR industry is a hotbed of innovative eye tracking applications, with game designers making use of eye-tracking data to boost performance and improve user immersion and interaction within games. Emerging applications include ”foveated rendering”, which boosts frame rates by selectively rendering graphics at the highest resolution within foveal vision (Guenter et al., 2012), and eye-gaze-dependent interfaces, which offer smarter and more intuitive modes of user interaction that respond dynamically to where the user is looking (Corcoran et al., 2012).

What is an eye tracker?

What exactly are eye trackers and how do they work? Broadly defined, the term “eye tracker” describes any system that measures signals from the eyes, including eye movement, blinking, and pupil size.

They come in many different shapes and sizes, ranging from invasive systems that detect the movement of sensors attached to the eye itself (such as in a contact lens), to the more commonly used non-invasive eye trackers.

Non-invasive methods can broadly be divided into electro-oculography, which detects eye movements using electrodes placed near the eyes, and video-oculography (video-based eye tracking), which uses one or more cameras to record videos of the eye region.

What are the main types of video-based eye tracker and how do they work?

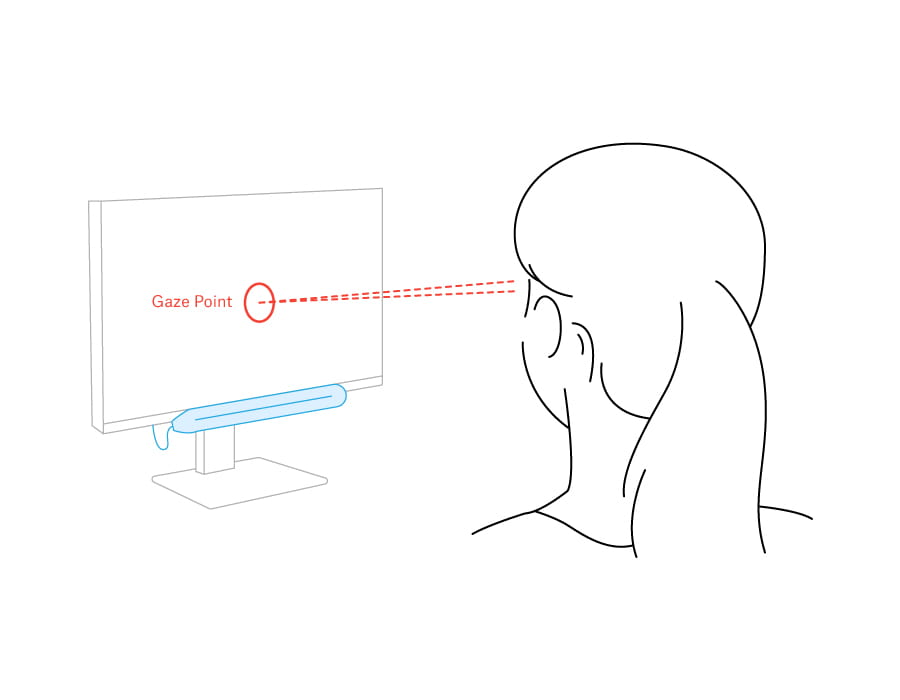

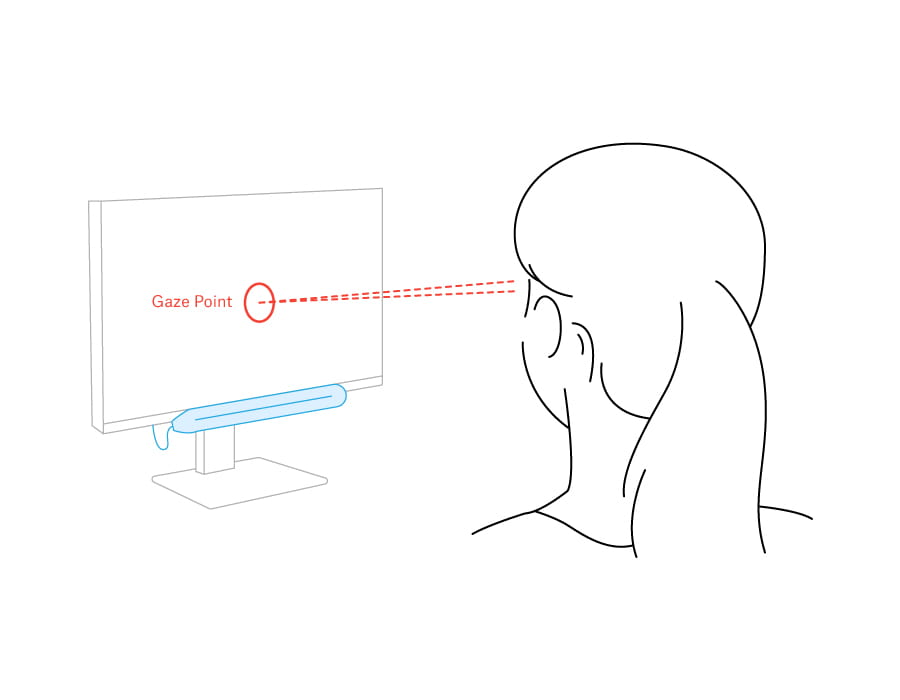

Video-based eye-tracking systems can be classified into remote (stationary) and wearable (head-mounted) eye trackers.

Remote

Track eye movements from a distance

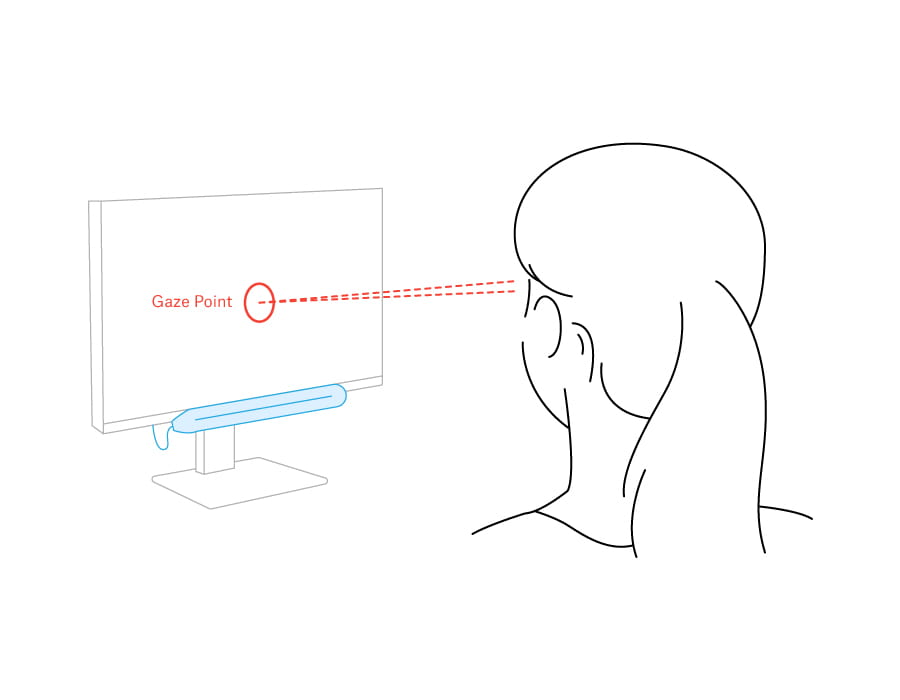

System mounted on or near a PC monitor

Subject sits in front of screen, largely stationary

Allow analysis and/or user interaction based on eye movement relative to on-screen content (e.g., websites, computer games, or assistive technologies such as the “eye mouse”)

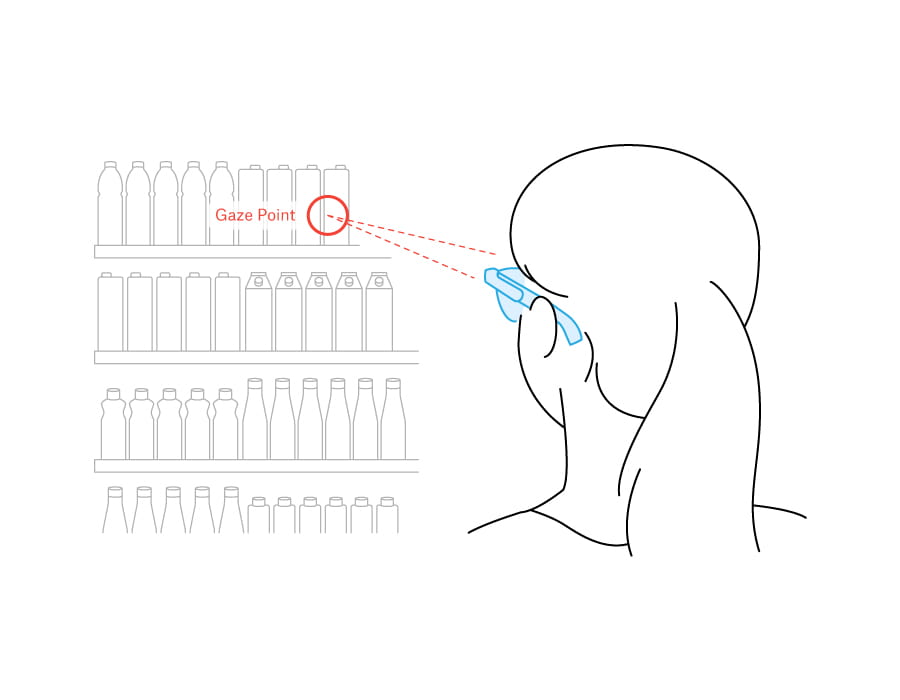

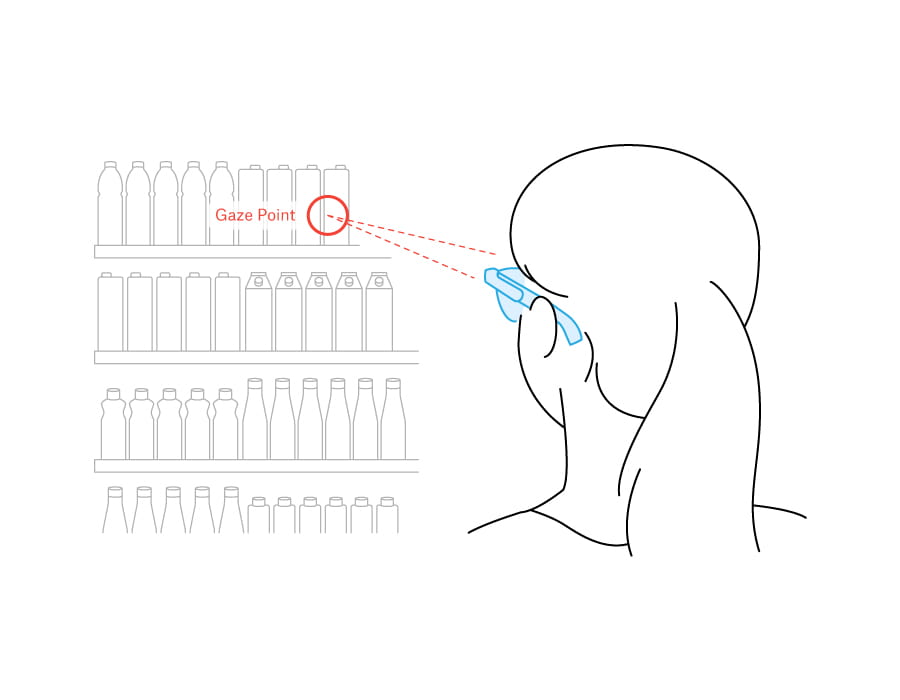

Wearable

Track eye movements close up

System mounted on wearable eye glasses-like frame

Subject free to move and engage with real and VR environments

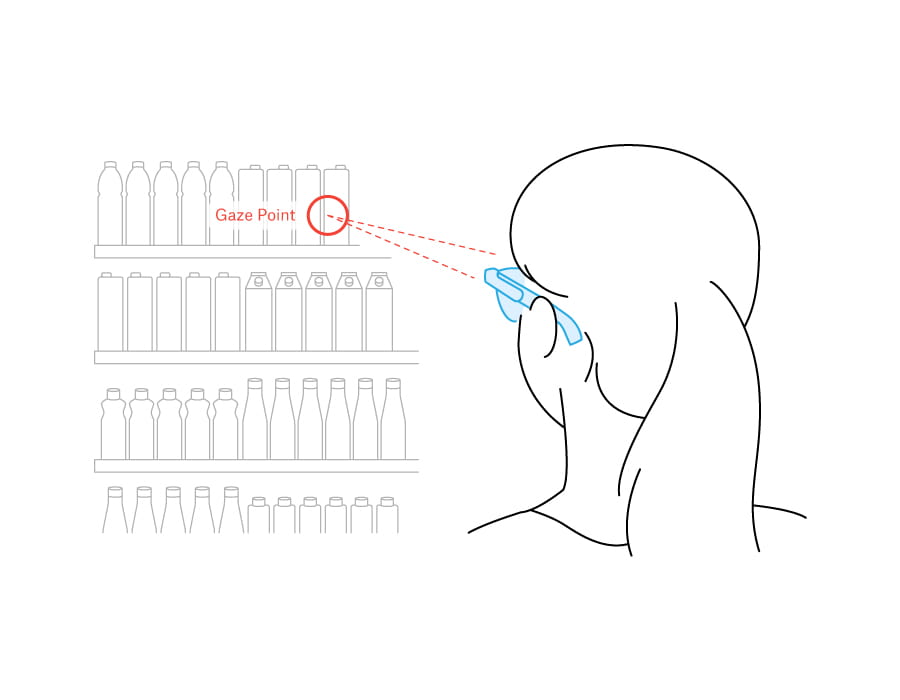

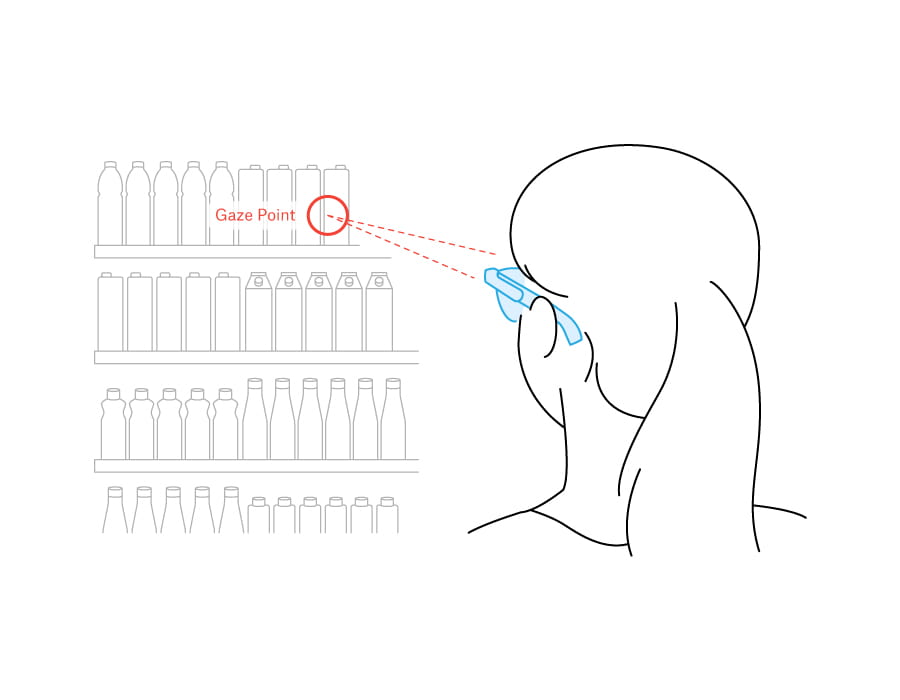

Forward-facing camera allows analysis of eye gaze relative people and objects in view while the subject engages in tasks and interactions (e.g., during sport, shopping, or product use)

Remote systems mount the video camera(s) on or near a stationary object displaying the visual stimulus, such as a PC monitor, and track a person’s eyes from a distance (Narcizo et al., 2013). The person being tracked sits in front of the screen to perform the task of interest (such as reading or browsing a website) and remains largely stationary throughout.

Wearable systems, in contrast, mount the video camera(s) onto a glasses-like frame, allowing the subject to move freely while their eyes are tracked from close up (Wan et al., 2019). Typically, they also include a forward-facing camera that records a video of the person’s field of view, enabling eye-related signals to be analyzed in the context of the changing visual scene.

All video-based eye trackers, whether remote or wearable, detect the appearance and/or features of the eye(s) under the illumination of either “passive”, ambient light or an “active”, near-infrared light source. In active systems, the direct reflection of the light source off the cornea (known as the “glint”) can be used as an additional feature for tracking.

What are the relative advantages and disadvantages of remote and wearable systems?

As remote eye trackers are coupled with a monitor displaying a defined visual stimulus, such as a website, the exact positions of text and visual elements relative to the user’s eyes are always known. Defining the areas of interest on the stimulus in this way facilitates “closed loop” analysis of the collected eye tracking data, based on the known relationships between the stimulus and eye gaze direction.

Additionally, remote eye trackers allow the stimulus to be changed in a controlled way depending on eye-related signals such as gaze direction, opening up rich possibilities for interactive feedback. However, they offer limited scope for the subject to move, which restricts their usage to static, screen-based tasks.

In contrast, the freedom of movement offered by wearable eye trackers vastly expands the range of tasks and situations in which eye-related signals can be recorded and analyzed. The possibility of recording eye tracking data in open-ended environments yields rich, complex video datasets, with methods for analyzing these data continuing to be an important topic of active research (Panetta et al., 2019).

What kinds of eye-related signals can eye trackers measure?

Eye tracking systems can measure a range of eye-related signals, which provide a wealth of information about a person’s behaviors, and mental and physical states (Eckstein et al., 2017):

Gaze direction measurement is crucial for characterizing fixations, saccades, and smooth pursuits, allowing insights to be gained into cognitive, emotional, physiological, and/or neurological processes;

Pupil size and reactivity, as measured by pupillometry, can be used to illuminate cognitive processes as diverse as emotion, language, perception, and memory (Sirois & Brisson, 2014; Köles, 2017);

Blink rate, meanwhile, is a reliable measure of cognitive processing related to learning and goal-oriented behavior (Eckstein et al., 2017);

Eye state (openness/closure) allows physiological states of arousal or drowsiness to be assessed (e.g. Tabrizi & Zoroofi, 2008).

What factors should be considered when choosing an eye tracker?

The question of whether to use a remote or a wearable eye tracker is multifaceted, depending on the particular task and/or question of interest (Punde et al., 2017).

One major factor to consider is the degree of movement that the task involves. Does the task involve the person sitting still; for example, while browsing a website? In this case, remote eye trackers are generally preferred. Or does the person need to move about; for example, while walking through a shop or playing sport? Do they need to interact with multiple screens of different sizes? In such cases, a wearable eye tracker would typically be used.

The task determines the likely factors affecting the performance of the eye tracker. For example, for an outdoor, high-dynamic task, glare from sunlight or slippage (movement of the eye tracker relative to the eyes) could have a substantial influence on performance. In contrast, for long-duration, static recordings in the lab, whether the accuracy and quality of eye-related signals can be maintained over time might be the key indicator of performance.

Therefore, depending on the task, a range of variables might be important to consider when evaluating the performance of an eye tracker, such as (Ehinger et al., 2019):

Accuracy and precision, which quantify the angular error and the inherent “noise” of the gaze direction estimate, respectively;

Recording quality other eye-related signals, including pupil size, blinking, and eye state;

Robustness (reliability in response) to, for example, changes in lighting, movement of the eye tracker relative to the eyes, subject head translation/rotation, or temporary eye occlusion/closure;

Signal deterioration over time, which affects how long data from the system can be reliably used before the eye tracker requires recalibration.

More practical considerations such as the cost of the system and its ease of use (in particular whether complicated setup and calibration are required) can be important in selecting the right eye tracker for the job.

Finally, it’s important to remember that any single measure alone is normally insufficient for evaluating the suitability of a given eye tracker to a particular application. Rather, by considering a set of relevant variables, a more well-informed choice can be made.

How is eye gaze direction estimated?

How exactly do video-based eye trackers figure out where someone is looking? In essence, they use information contained in eye images to estimate the direction of a person’s gaze. Gaze estimation is a challenging problem that has been tackled using various approaches (Hansen & Ji, 2010), with active research continuing to improve its accuracy and reliability.

The accuracy and reliability of eye gaze estimation of both remote and wearable eye trackers are affected by factors such as differences in inter-individual eye anatomy. The use of wearable trackers in high-dynamic environments means that their estimation of eye gaze can also be influenced by additional factors, such as varying levels of light outdoors or slippage of the eye tracker during physical activity.

In this section, we explore some key questions about eye gaze estimation, including: Which features (aspects) of the eye image can be used to estimate eye gaze direction? How do the algorithms used to perform the estimation work? What do recent developments in AI-based image analysis have to offer to the challenge of eye gaze estimation?

How do gaze estimation algorithms work?

Gaze estimation algorithms extract meaningful signals from eye images and transform them into estimates of gaze direction or gaze points in 3D space. Although the exact methods vary, all approaches rely on correlations between quantitative features in the eye image and gaze direction (Kar & Corcoran, 2017).

The features used range in complexity—from simple point features (like the pupil center or corneal reflection), to low-dimensional geometric shapes (such as ellipses describing the pupil), to high-dimensional representations of the entire eye region. In practice, combining features at different levels of complexity leads to more robust and accurate gaze estimates.

Figure 4: Gaze estimation algorithms differ along several key dimensions. One is the complexity of features extracted from raw eye images, ranging from simple points to full eye images. Another is the degree to which domain knowledge—about ocular anatomy and motion—informs the assumptions built into the algorithm, distinguishing explicit from implicit approaches.

Since gaze estimation maps an input feature space (the eye image) to an output variable (gaze direction), it can be tackled using either traditional computer vision techniques or machine learning. In recent years, AI-based approaches have become increasingly prominent due to major advances in computer vision (Voulodimos et al., 2018).

How do traditional and AI-based algorithms differ?

While all gaze estimation methods aim to map eye features to gaze direction, they differ in how the transformation is modeled.

Traditional algorithms are based on theoretical and empirical insights and use hand-crafted rules written in code. These typically require only small amounts of calibration data—recorded samples that relate eye features to known gaze targets. Two main types exist:

Regression-based methods, which fit generic mathematical functions to calibration data. These functions implicitly capture the mapping from features to gaze direction.

Model-based methods, which explicitly model the geometry, motion, and/or physiology of the eye in 3D.

AI-based algorithms, in contrast, use large datasets to train neural networks to learn this mapping automatically. The need for large, high-quality training data is one of the key challenges in developing these systems.

Hybrid approaches combine the strengths of both. In these systems, learning-based methods are guided by inductive biases drawn from explicit models of ocular mechanics and optics. NeonNet, the core gaze-estimation pipeline in Neon, is one such hybrid method.

What are the strengths of each approach?

Traditional algorithms are well-suited for controlled environments where lighting is stable, head motion is limited, and calibration can be repeated. Their logic is transparent and modifiable (especially when open source), which can be advantageous for development and debugging.

AI-based methods offer greater robustness in dynamic, real-world conditions. They adapt better to changes in lighting, partial occlusion, and tracker slippage, and can significantly reduce the need for user calibration. These strengths make them ideal for mobile, daily-life eye tracking and for users who prioritize ease of use.

Hybrid methods like NeonNet offer the best of both worlds. They achieve robustness through data-driven learning, maintain accuracy through model-informed structure, and even provide deeper insights—such as tracking the time-varying geometry of the eye (e.g. eyeball position, pupil size)—in real time.

How is the visual scene analyzed to yield meaningful insights?

In addition to analyzing eye-related signals in isolation, analysis of the visual scene or stimulus in relation to these signals provides a powerful approach to gaining insight into a person’s behaviors and mental states.

Imagine someone shopping – if we just knew their eye movements over a ten minute period, that alone would not tell us very much. But if we also knew what had been in their field of view during that time – the products on the shelves, the price tags, the advertising posters – then we could capture their visual journey in full and begin to understand what motivated their choices to buy certain products.

Visual content analysis is the process of identifying and labeling distinct objects and temporal episodes in the video data of the visual scene or stimulus. By analyzing a person’s eye movement data in the context of these objects and episodes, meaningful high-level insights can be drawn about underlying behaviors and mental states.

How is visual content analysis performed?

The approach to visual content analysis used differs radically depending on whether the content of the visual stimulus is known (e.g., in screen-based tasks, such as browsing a website) or unknown beforehand (e.g., in real-world scenarios, such as playing sport).

Remote eye tracking systems typically use a screen to display a defined visual stimulus. This approach allows eye movements to be analyzed directly in relation to known objects, features, and areas of the stimulus (for example, the areas covered by different images on a website), facilitating subsequent interpretation of the eye movement data.In contrast, for video data collected in high-dynamic situations (e.g., shopping or playing sport) from the forward-facing camera of a wearable eye tracker, visual content analysis requires the objects and episodes in the video to first be identified and labeled before the eye gaze data can be analyzed and interpreted.

How can video data from high-dynamic situations be analyzed?

While manual labeling of the objects or episodes in a scene video is possible, this becomes prohibitively time-consuming as the amount of data increases—this is especially true for pervasive eye tracking in high-dynamic environments.

Therefore, to capitalize on the large amount of information in high-dynamic video datasets, semi- and fully-automated methods of analysis are required, such as area-of-interest (AOI) tracking and semantic analysis (Fritz & Paletta, 2010; Panetta et al., 2019). These methods employ computer vision algorithms to perform object/episode recognition, classification, and labeling.

What are the most common metrics for analyzing eye movements in relation to the visual stimulus?

After labeling, the eye movement data can be summarized in relation to the visual stimulus or scene using a variety of spatial and temporal metrics, and subsequently visualized (Blascheck et al., 2017). These metrics range from scanpaths (relatively unprocessed plots of eye movements over time), to attention maps (summarizing the time spent fixating different parts of the stimulus or scene), to AOI-based metrics such as dwell time (measuring the total time spent fixating within a specific area, for example on a given object).

These metrics enable eye movement data to be meaningfully summarized in relation to the visual content, allowing high-level insights to be drawn about the mental states underlying eye movements.

Summary

When analyzed alone or in relation to a visual stimulus or scene, eye movement data obtained from an eye tracker can yield rich insights into a person’s behaviors and mental states. The insights gained from eye tracking are valuable in diverse fields, from psychology to medicine, marketing to performance training, and human–computer interface (HCI) research to computer game rendering.

Numerous high-quality gaze estimation algorithms have been developed, and progress continues to be made in improving both traditional and AI-based algorithms. These advances offer greater accuracy, enhanced reliability, and increased ease of use. In addition to gaze direction, other eye-related signals, such as pupil size, blink rate, and eye state (openness/closure), are useful sources of information.

The most commonly used types of eye-tracking systems are remote and wearable video-based eye trackers, with the decision of which system to use depending on the particular situation or question of interest. While remote systems facilitate closed-loop analysis of the visual stimulus in static situations, wearable eye trackers allow freedom of movement, yielding expressive , high-dynamic datasets that open up rich opportunities for applying eye tracking to novel situations and questions.

References

Al-Khalifa HS, George RP. Eye tracking and e-learning: seeing through your students' eyes. eLearn. 2010 Jun 1;2010(6).

Blascheck T, Kurzhals K, Raschke M, Burch M, Weiskopf D, Ertl T. Visualization of eye tracking data: a taxonomy and survey. Computer Graphics Forum. 2017;36(8):260–84.

Brunyé TT, Drew T, Weaver DL, Elmore JG. A review of eye tracking for understanding and improving diagnostic interpretation. Cognitive Research: Principles and Implications. 2019 Feb 22;4(1):7.

Corcoran PM, Nanu F, Petrescu S, Bigioi P. Real-time eye gaze tracking for gaming design and consumer electronics systems. IEEE Transactions on Consumer Electronics. 2012 May;58(2):347–55.

Dowling J. Retina. Scholarpedia. 2007 Dec 7;2(12):3487

dos Santos RD, de Oliveira JH, Rocha JB, Giraldi JD. Eye tracking in neuromarketing: a research agenda for marketing studies. International Journal of Psychological Studies. 2015 Mar 1;7(1):32.

Duchowski AT. A breadth-first survey of eye-tracking applications. Behavior Research Methods, Instruments, & Computers. 2002 Nov 1;34(4):455–70.

Eckstein MK, Guerra-Carrillo B, Miller Singley AT, Bunge SA. Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development? Developmental Cognitive Neuroscience. 2017 Jun 1;25:69–91.

Ehinger BV, Groß K, Ibs I, König P. A new comprehensive eye-tracking test battery concurrently evaluating the Pupil Labs glasses and the EyeLink 1000. PeerJ. 2019 Jul 9;7:e7086.

Findlay J, Walker R. Human saccadic eye movements. Scholarpedia. 2012 Jul 27;7(7):5095.

Fritz G, Paletta L. Semantic analysis of human visual attention in mobile eye tracking applications. In: 2010 IEEE International Conference on Image Processing. 2010. p. 4565–8.

Guenter B, Finch M, Drucker S, Tan D, Snyder J. Foveated 3D graphics. ACM Trans Graph. 2012 Nov 1;31(6):164:1–164:10.

Hansen DW, Ji Q. In the eye of the beholder: a survey of models for eyes and gaze. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2010 Mar;32(3):478–500.

Hüttermann S, Noël B, Memmert D. Eye tracking in high-performance sports: evaluation of its application in expert athletes. International Journal of Computer Science in Sport. 2018 Dec 1;17(2):182–203.

Kar A, Corcoran P. A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms. IEEE Access. 2017;5:16495–519.

Köles M. A review of pupillometry for human-computer interaction studies. Periodica Polytechnica Electrical Engineering and Computer Science. 2017 Sep 1;61(4):320–6.

Lukander K. A short review and primer on eye tracking in human computer interaction applications. arXiv preprint arXiv:1609.07342. 2016 Sep 23.

Molitor RJ, Ko PC, Ally BA. Eye movements in Alzheimer’s disease. J Alzheimers Dis. 2015;44(1):1–12.

Narcizo FB, Queiroz JER de, Gomes HM. Remote eye tracking systems: technologies and applications. In: 2013 26th Conference on Graphics, Patterns and Images Tutorials. 2013. p. 15–22.

Panetta K, Wan Q, Kaszowska A, Taylor HA, Agaian S. Software architecture for automating cognitive science eye-tracking data analysis and object annotation. IEEE Transactions on Human-Machine Systems. 2019 Jun;49(3):268–77.

Punde PA, Jadhav ME, Manza RR. A study of eye tracking technology and its applications. In: 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM). 2017. p. 86–90.

Rayner K, Castelhano M. Eye movements. Scholarpedia. 2007 Oct 9;2(10):3649.

Rosch JL, Vogel-Walcutt JJ. A review of eye-tracking applications as tools for training. Cogn Tech Work. 2013 Aug 1;15(3):313–27.

Samadani U. A new tool for monitoring brain function: eye tracking goes beyond assessing attention to measuring central nervous system physiology. Neural Regen Res. 2015 Aug;10(8):1231–3.

Sirois S, Brisson J. Pupillometry. WIREs Cognitive Science. 2014;5(6):679–92.

Tabrizi PR, Zoroofi RA. Open/closed eye analysis for drowsiness detection. In: 2008 First Workshops on Image Processing Theory, Tools and Applications. 2008. p. 1–7.

Voulodimos A, Doulamis N, Doulamis A, Protopapadakis E. Deep learning for computer vision: a brief review. Computational Intelligence and Neuroscience. 2018 Feb 1;2018.

Wan Q, Kaszowska A, Panetta K, A Taylor H, Agaian S. A comprehensive head-mounted eye tracking review: software solutions, applications, and challenges. Electronic Imaging. 2019 Jan 13;2019(3):654-1.

As the proverbial “windows to the soul”, our eyes are a rich source of information about our internal world.

Eye tracking is a powerful method for collecting eye-related signals, such as gaze direction, pupil size, and blink rate. By analyzing these signals, either in isolation or in relation to the visual scene, important insights can be gained into human behaviors and mental states.

It is a versatile approach to answering a range of fundamental questions, such as: What is this person feeling? What are they interested in? How distracted or drowsy are they? What is their state of mental or physical health?

Why and how do we move our eyes?

Let’s start with a seemingly simple question: Why do we move our eyes in the first place? The answers to this question are crucial for understanding why eye movements are so informative about our behaviors and mental states.

As humans, we move our eyes in very specific ways, known as “movement motifs”. To put these in context, it’s helpful to first explore the anatomy of the eye itself.

How does the anatomy of the eye relate to eye movements?

At the back of the human eye is a light-sensitive sheet of cells called the retina. It contains two types of photoreceptor cells: cones, which provide high-resolution color vision; and rods, which are responsible for low-resolution monochromatic vision (Dowling, 2007).

Cones are packed at the highest density within an extremely small, specialized area known as the fovea, which covers a mere 2° around the focal point of each eye (roughly the width of your thumbnail if you hold your arm at full length away from you).

Figure 1: The binocular visual field in humans extends about 130° vertically and 200° horizontally. While the ability to detect motion is almost uniform over this range, color-vision and sharp form perception are restricted to its most central portion. The physiological basis for this is the limited extent of the highly specialized foveal region within the retina. As a consequence, humans need to move their eyes to take in the relevant details present in a given scene.

The fovea functions like a high-intensity “spotlight”, providing sufficient resolution to distinguish the fine details of the visual scene (such as letters on a page). Some details are still distinguishable in the immediately surrounding parafoveal region (5° around fixation). However, beyond this in the periphery, visual resolution diminishes rapidly and the image becomes blurry.

This implies that to use high-resolution foveal vision to take in the different elements of a scene in detail, we have to move our eyes.

Figure 2: Qualitatively different eye movement motifs can be distinguished. Saccades refer to rapid jumps in gaze from fixation to fixation.

Figure 3: During smooth pursuits, gaze follows a moving object in a continuous manner.

How are eye movements classified?

Eye movements can be classified into three discrete movement motifs: fixations, saccades, and smooth pursuits (Rayner & Castelhano, 2007; Findlay & Walker, 2012).

In contrast to the typically rapid movements of the eyes, fixations are relatively longer periods (0.2–0.6 s) of steady focus on an object. They make use of the high resolution of the fovea to maximize the visual information gained about the focal object.

Smooth pursuits and saccades are types of proper eye movement. Saccades are rapid, point-to-point eye movements that shift the focus of the eyes abruptly (typically in 20–100 ms) to a new fixation point, while smooth pursuits are continuous movements of the eye to track a moving object.

When people visually investigate a scene, they make a series of rapid saccades, interspersed with relatively longer periods of fixations on its key features, or smooth pursuits of moving objects. This pattern of eye movements makes best use of the limited focus of foveal vision to quickly gather detailed visual information.Importantly, this information is only processed by the brain during periods of fixation or smooth pursuit, and not during saccades. This means that the detail taken in by the foveal “spotlight” depends on the locations and durations of fixations and smooth pursuits in the visual scene.

The visual information from the fovea has a powerful influence on a person’s cognitive, emotional, physiological, and neurological states. Therefore, by analyzing eye movements alone or in relation to the visual scene in front of them, important insights can be gained into behaviors and mental states.

What is eye tracking used for?

Given the insights that eye tracking offers, it’s no surprise that it has been applied to many different areas of research and industry (Duchowski, 2002). The scope and possibilities of eye tracking are huge, and continue to expand as novel applications are created and developed.

With eye tracking it is possible to answer fundamental questions about human behavior: What is this person interested in? What are they looking at? What are they feeling? What do their eyes tell us about their health, or their coordination, or their alertness?

The answers to questions such as these are relevant to many different fields. Below, we explore some important examples of current applications of eye tracking.

Academic research

Eye tracking began in 1908 when Edmund Huey built a device in his lab to study how people read. This academic tradition lives on to the present day, with eye tracking still being a widely used experimental method. Eye-tracking results continue to advance the frontiers of academic research, especially in the fields of psychology, neuroscience, and human–computer interaction (HCI) (for a diverse list of publications and projects that have used eye tracking in recent years, check out our publications page.

Marketing and design

The movements of the eyes hold a wealth of information about someone’s interests and desires, making eye tracking a powerful tool within the fields of marketing and design. It enables insights to be gained into how users interact with or attend to graphical content (dos Santos et al., 2015), including what they are most interested in. This can help to optimize anything from UX design, to point-of-sale displays, to video content, to online store layouts.

Performance training

In sport and skilled professions, every little movement can matter and make the difference between a poor performance and a good one. Professionals such as athletes or skilled laborers or surgeons (or their managers or coaches) use eye tracking to understand what visual information is most important to attend to for peak performance, and to train motor coordination and skilled action (Hüttermann et al., 2018, Causer et al., 2014).

Medicine and healthcare

Eye tracking is an invaluable diagnostic and monitoring tool within medicine. It can be applied to diagnose specific conditions, such as concussion (Samadani, 2015), and to improve and complement existing methods of diagnosis (Brunyé et al., 2019). Non-invasive eye tracking methods make it possible for doctors to quickly and easily monitor medical conditions and diseases such as Alzheimer’s (Molitor et al., 2015).

Communications

The eyes express vital information during social interactions. Remote communication/telepresence technologies (such as virtual meeting spaces) are becoming more engaging thanks to the incorporation of eye movement information in virtual avatars.

Interactive applications

Computer-based and virtual systems are exciting and rapidly growing domains of application for eye tracking, especially with regard to user interaction based on eye gaze.

The field of HCI has long pioneered the use of eye movements as active signals for controlling computers (Köles, 2017). These advances are being applied to many different challenges, from the creation of assistive technologies that allow disabled people to interact hands-free with a computer using an “eye mouse”, to prosthetics that respond intelligently to eye gaze (Lukander, 2016).

Eye tracking data can be used to assess a user’s interests and modify online videos and search suggestions in real time (see the Fovea project for an example of this), with implications for online shopping and content creation. In the world of e-learning, this interactive approach is being used to responsively adapt virtual learning content based on eye-related measurements of key factors, such as a learner’s interests, cognitive load, and alertness (Al-Khalifa & George, 2010; Rosch & Vogel-Walcutt, 2013).

Finally, the computer game and VR industry is a hotbed of innovative eye tracking applications, with game designers making use of eye-tracking data to boost performance and improve user immersion and interaction within games. Emerging applications include ”foveated rendering”, which boosts frame rates by selectively rendering graphics at the highest resolution within foveal vision (Guenter et al., 2012), and eye-gaze-dependent interfaces, which offer smarter and more intuitive modes of user interaction that respond dynamically to where the user is looking (Corcoran et al., 2012).

What is an eye tracker?

What exactly are eye trackers and how do they work? Broadly defined, the term “eye tracker” describes any system that measures signals from the eyes, including eye movement, blinking, and pupil size.

They come in many different shapes and sizes, ranging from invasive systems that detect the movement of sensors attached to the eye itself (such as in a contact lens), to the more commonly used non-invasive eye trackers.

Non-invasive methods can broadly be divided into electro-oculography, which detects eye movements using electrodes placed near the eyes, and video-oculography (video-based eye tracking), which uses one or more cameras to record videos of the eye region.

What are the main types of video-based eye tracker and how do they work?

Video-based eye-tracking systems can be classified into remote (stationary) and wearable (head-mounted) eye trackers.

Remote

Track eye movements from a distance

System mounted on or near a PC monitor

Subject sits in front of screen, largely stationary

Allow analysis and/or user interaction based on eye movement relative to on-screen content (e.g., websites, computer games, or assistive technologies such as the “eye mouse”)

Wearable

Track eye movements close up

System mounted on wearable eye glasses-like frame

Subject free to move and engage with real and VR environments

Forward-facing camera allows analysis of eye gaze relative people and objects in view while the subject engages in tasks and interactions (e.g., during sport, shopping, or product use)

Remote systems mount the video camera(s) on or near a stationary object displaying the visual stimulus, such as a PC monitor, and track a person’s eyes from a distance (Narcizo et al., 2013). The person being tracked sits in front of the screen to perform the task of interest (such as reading or browsing a website) and remains largely stationary throughout.

Wearable systems, in contrast, mount the video camera(s) onto a glasses-like frame, allowing the subject to move freely while their eyes are tracked from close up (Wan et al., 2019). Typically, they also include a forward-facing camera that records a video of the person’s field of view, enabling eye-related signals to be analyzed in the context of the changing visual scene.

All video-based eye trackers, whether remote or wearable, detect the appearance and/or features of the eye(s) under the illumination of either “passive”, ambient light or an “active”, near-infrared light source. In active systems, the direct reflection of the light source off the cornea (known as the “glint”) can be used as an additional feature for tracking.

What are the relative advantages and disadvantages of remote and wearable systems?

As remote eye trackers are coupled with a monitor displaying a defined visual stimulus, such as a website, the exact positions of text and visual elements relative to the user’s eyes are always known. Defining the areas of interest on the stimulus in this way facilitates “closed loop” analysis of the collected eye tracking data, based on the known relationships between the stimulus and eye gaze direction.

Additionally, remote eye trackers allow the stimulus to be changed in a controlled way depending on eye-related signals such as gaze direction, opening up rich possibilities for interactive feedback. However, they offer limited scope for the subject to move, which restricts their usage to static, screen-based tasks.

In contrast, the freedom of movement offered by wearable eye trackers vastly expands the range of tasks and situations in which eye-related signals can be recorded and analyzed. The possibility of recording eye tracking data in open-ended environments yields rich, complex video datasets, with methods for analyzing these data continuing to be an important topic of active research (Panetta et al., 2019).

What kinds of eye-related signals can eye trackers measure?

Eye tracking systems can measure a range of eye-related signals, which provide a wealth of information about a person’s behaviors, and mental and physical states (Eckstein et al., 2017):

Gaze direction measurement is crucial for characterizing fixations, saccades, and smooth pursuits, allowing insights to be gained into cognitive, emotional, physiological, and/or neurological processes;

Pupil size and reactivity, as measured by pupillometry, can be used to illuminate cognitive processes as diverse as emotion, language, perception, and memory (Sirois & Brisson, 2014; Köles, 2017);

Blink rate, meanwhile, is a reliable measure of cognitive processing related to learning and goal-oriented behavior (Eckstein et al., 2017);

Eye state (openness/closure) allows physiological states of arousal or drowsiness to be assessed (e.g. Tabrizi & Zoroofi, 2008).

What factors should be considered when choosing an eye tracker?

The question of whether to use a remote or a wearable eye tracker is multifaceted, depending on the particular task and/or question of interest (Punde et al., 2017).

One major factor to consider is the degree of movement that the task involves. Does the task involve the person sitting still; for example, while browsing a website? In this case, remote eye trackers are generally preferred. Or does the person need to move about; for example, while walking through a shop or playing sport? Do they need to interact with multiple screens of different sizes? In such cases, a wearable eye tracker would typically be used.

The task determines the likely factors affecting the performance of the eye tracker. For example, for an outdoor, high-dynamic task, glare from sunlight or slippage (movement of the eye tracker relative to the eyes) could have a substantial influence on performance. In contrast, for long-duration, static recordings in the lab, whether the accuracy and quality of eye-related signals can be maintained over time might be the key indicator of performance.

Therefore, depending on the task, a range of variables might be important to consider when evaluating the performance of an eye tracker, such as (Ehinger et al., 2019):

Accuracy and precision, which quantify the angular error and the inherent “noise” of the gaze direction estimate, respectively;

Recording quality other eye-related signals, including pupil size, blinking, and eye state;

Robustness (reliability in response) to, for example, changes in lighting, movement of the eye tracker relative to the eyes, subject head translation/rotation, or temporary eye occlusion/closure;

Signal deterioration over time, which affects how long data from the system can be reliably used before the eye tracker requires recalibration.

More practical considerations such as the cost of the system and its ease of use (in particular whether complicated setup and calibration are required) can be important in selecting the right eye tracker for the job.

Finally, it’s important to remember that any single measure alone is normally insufficient for evaluating the suitability of a given eye tracker to a particular application. Rather, by considering a set of relevant variables, a more well-informed choice can be made.

How is eye gaze direction estimated?

How exactly do video-based eye trackers figure out where someone is looking? In essence, they use information contained in eye images to estimate the direction of a person’s gaze. Gaze estimation is a challenging problem that has been tackled using various approaches (Hansen & Ji, 2010), with active research continuing to improve its accuracy and reliability.

The accuracy and reliability of eye gaze estimation of both remote and wearable eye trackers are affected by factors such as differences in inter-individual eye anatomy. The use of wearable trackers in high-dynamic environments means that their estimation of eye gaze can also be influenced by additional factors, such as varying levels of light outdoors or slippage of the eye tracker during physical activity.

In this section, we explore some key questions about eye gaze estimation, including: Which features (aspects) of the eye image can be used to estimate eye gaze direction? How do the algorithms used to perform the estimation work? What do recent developments in AI-based image analysis have to offer to the challenge of eye gaze estimation?

How do gaze estimation algorithms work?

Gaze estimation algorithms extract meaningful signals from eye images and transform them into estimates of gaze direction or gaze points in 3D space. Although the exact methods vary, all approaches rely on correlations between quantitative features in the eye image and gaze direction (Kar & Corcoran, 2017).

The features used range in complexity—from simple point features (like the pupil center or corneal reflection), to low-dimensional geometric shapes (such as ellipses describing the pupil), to high-dimensional representations of the entire eye region. In practice, combining features at different levels of complexity leads to more robust and accurate gaze estimates.

Figure 4: Gaze estimation algorithms differ along several key dimensions. One is the complexity of features extracted from raw eye images, ranging from simple points to full eye images. Another is the degree to which domain knowledge—about ocular anatomy and motion—informs the assumptions built into the algorithm, distinguishing explicit from implicit approaches.

Since gaze estimation maps an input feature space (the eye image) to an output variable (gaze direction), it can be tackled using either traditional computer vision techniques or machine learning. In recent years, AI-based approaches have become increasingly prominent due to major advances in computer vision (Voulodimos et al., 2018).

How do traditional and AI-based algorithms differ?

While all gaze estimation methods aim to map eye features to gaze direction, they differ in how the transformation is modeled.

Traditional algorithms are based on theoretical and empirical insights and use hand-crafted rules written in code. These typically require only small amounts of calibration data—recorded samples that relate eye features to known gaze targets. Two main types exist:

Regression-based methods, which fit generic mathematical functions to calibration data. These functions implicitly capture the mapping from features to gaze direction.

Model-based methods, which explicitly model the geometry, motion, and/or physiology of the eye in 3D.

AI-based algorithms, in contrast, use large datasets to train neural networks to learn this mapping automatically. The need for large, high-quality training data is one of the key challenges in developing these systems.

Hybrid approaches combine the strengths of both. In these systems, learning-based methods are guided by inductive biases drawn from explicit models of ocular mechanics and optics. NeonNet, the core gaze-estimation pipeline in Neon, is one such hybrid method.

What are the strengths of each approach?

Traditional algorithms are well-suited for controlled environments where lighting is stable, head motion is limited, and calibration can be repeated. Their logic is transparent and modifiable (especially when open source), which can be advantageous for development and debugging.

AI-based methods offer greater robustness in dynamic, real-world conditions. They adapt better to changes in lighting, partial occlusion, and tracker slippage, and can significantly reduce the need for user calibration. These strengths make them ideal for mobile, daily-life eye tracking and for users who prioritize ease of use.

Hybrid methods like NeonNet offer the best of both worlds. They achieve robustness through data-driven learning, maintain accuracy through model-informed structure, and even provide deeper insights—such as tracking the time-varying geometry of the eye (e.g. eyeball position, pupil size)—in real time.

How is the visual scene analyzed to yield meaningful insights?

In addition to analyzing eye-related signals in isolation, analysis of the visual scene or stimulus in relation to these signals provides a powerful approach to gaining insight into a person’s behaviors and mental states.

Imagine someone shopping – if we just knew their eye movements over a ten minute period, that alone would not tell us very much. But if we also knew what had been in their field of view during that time – the products on the shelves, the price tags, the advertising posters – then we could capture their visual journey in full and begin to understand what motivated their choices to buy certain products.

Visual content analysis is the process of identifying and labeling distinct objects and temporal episodes in the video data of the visual scene or stimulus. By analyzing a person’s eye movement data in the context of these objects and episodes, meaningful high-level insights can be drawn about underlying behaviors and mental states.

How is visual content analysis performed?

The approach to visual content analysis used differs radically depending on whether the content of the visual stimulus is known (e.g., in screen-based tasks, such as browsing a website) or unknown beforehand (e.g., in real-world scenarios, such as playing sport).

Remote eye tracking systems typically use a screen to display a defined visual stimulus. This approach allows eye movements to be analyzed directly in relation to known objects, features, and areas of the stimulus (for example, the areas covered by different images on a website), facilitating subsequent interpretation of the eye movement data.In contrast, for video data collected in high-dynamic situations (e.g., shopping or playing sport) from the forward-facing camera of a wearable eye tracker, visual content analysis requires the objects and episodes in the video to first be identified and labeled before the eye gaze data can be analyzed and interpreted.

How can video data from high-dynamic situations be analyzed?

While manual labeling of the objects or episodes in a scene video is possible, this becomes prohibitively time-consuming as the amount of data increases—this is especially true for pervasive eye tracking in high-dynamic environments.

Therefore, to capitalize on the large amount of information in high-dynamic video datasets, semi- and fully-automated methods of analysis are required, such as area-of-interest (AOI) tracking and semantic analysis (Fritz & Paletta, 2010; Panetta et al., 2019). These methods employ computer vision algorithms to perform object/episode recognition, classification, and labeling.

What are the most common metrics for analyzing eye movements in relation to the visual stimulus?

After labeling, the eye movement data can be summarized in relation to the visual stimulus or scene using a variety of spatial and temporal metrics, and subsequently visualized (Blascheck et al., 2017). These metrics range from scanpaths (relatively unprocessed plots of eye movements over time), to attention maps (summarizing the time spent fixating different parts of the stimulus or scene), to AOI-based metrics such as dwell time (measuring the total time spent fixating within a specific area, for example on a given object).

These metrics enable eye movement data to be meaningfully summarized in relation to the visual content, allowing high-level insights to be drawn about the mental states underlying eye movements.

Summary

When analyzed alone or in relation to a visual stimulus or scene, eye movement data obtained from an eye tracker can yield rich insights into a person’s behaviors and mental states. The insights gained from eye tracking are valuable in diverse fields, from psychology to medicine, marketing to performance training, and human–computer interface (HCI) research to computer game rendering.

Numerous high-quality gaze estimation algorithms have been developed, and progress continues to be made in improving both traditional and AI-based algorithms. These advances offer greater accuracy, enhanced reliability, and increased ease of use. In addition to gaze direction, other eye-related signals, such as pupil size, blink rate, and eye state (openness/closure), are useful sources of information.

The most commonly used types of eye-tracking systems are remote and wearable video-based eye trackers, with the decision of which system to use depending on the particular situation or question of interest. While remote systems facilitate closed-loop analysis of the visual stimulus in static situations, wearable eye trackers allow freedom of movement, yielding expressive , high-dynamic datasets that open up rich opportunities for applying eye tracking to novel situations and questions.

References

Al-Khalifa HS, George RP. Eye tracking and e-learning: seeing through your students' eyes. eLearn. 2010 Jun 1;2010(6).

Blascheck T, Kurzhals K, Raschke M, Burch M, Weiskopf D, Ertl T. Visualization of eye tracking data: a taxonomy and survey. Computer Graphics Forum. 2017;36(8):260–84.

Brunyé TT, Drew T, Weaver DL, Elmore JG. A review of eye tracking for understanding and improving diagnostic interpretation. Cognitive Research: Principles and Implications. 2019 Feb 22;4(1):7.

Corcoran PM, Nanu F, Petrescu S, Bigioi P. Real-time eye gaze tracking for gaming design and consumer electronics systems. IEEE Transactions on Consumer Electronics. 2012 May;58(2):347–55.

Dowling J. Retina. Scholarpedia. 2007 Dec 7;2(12):3487

dos Santos RD, de Oliveira JH, Rocha JB, Giraldi JD. Eye tracking in neuromarketing: a research agenda for marketing studies. International Journal of Psychological Studies. 2015 Mar 1;7(1):32.

Duchowski AT. A breadth-first survey of eye-tracking applications. Behavior Research Methods, Instruments, & Computers. 2002 Nov 1;34(4):455–70.

Eckstein MK, Guerra-Carrillo B, Miller Singley AT, Bunge SA. Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development? Developmental Cognitive Neuroscience. 2017 Jun 1;25:69–91.

Ehinger BV, Groß K, Ibs I, König P. A new comprehensive eye-tracking test battery concurrently evaluating the Pupil Labs glasses and the EyeLink 1000. PeerJ. 2019 Jul 9;7:e7086.

Findlay J, Walker R. Human saccadic eye movements. Scholarpedia. 2012 Jul 27;7(7):5095.

Fritz G, Paletta L. Semantic analysis of human visual attention in mobile eye tracking applications. In: 2010 IEEE International Conference on Image Processing. 2010. p. 4565–8.

Guenter B, Finch M, Drucker S, Tan D, Snyder J. Foveated 3D graphics. ACM Trans Graph. 2012 Nov 1;31(6):164:1–164:10.

Hansen DW, Ji Q. In the eye of the beholder: a survey of models for eyes and gaze. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2010 Mar;32(3):478–500.

Hüttermann S, Noël B, Memmert D. Eye tracking in high-performance sports: evaluation of its application in expert athletes. International Journal of Computer Science in Sport. 2018 Dec 1;17(2):182–203.

Kar A, Corcoran P. A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms. IEEE Access. 2017;5:16495–519.

Köles M. A review of pupillometry for human-computer interaction studies. Periodica Polytechnica Electrical Engineering and Computer Science. 2017 Sep 1;61(4):320–6.

Lukander K. A short review and primer on eye tracking in human computer interaction applications. arXiv preprint arXiv:1609.07342. 2016 Sep 23.

Molitor RJ, Ko PC, Ally BA. Eye movements in Alzheimer’s disease. J Alzheimers Dis. 2015;44(1):1–12.

Narcizo FB, Queiroz JER de, Gomes HM. Remote eye tracking systems: technologies and applications. In: 2013 26th Conference on Graphics, Patterns and Images Tutorials. 2013. p. 15–22.

Panetta K, Wan Q, Kaszowska A, Taylor HA, Agaian S. Software architecture for automating cognitive science eye-tracking data analysis and object annotation. IEEE Transactions on Human-Machine Systems. 2019 Jun;49(3):268–77.

Punde PA, Jadhav ME, Manza RR. A study of eye tracking technology and its applications. In: 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM). 2017. p. 86–90.

Rayner K, Castelhano M. Eye movements. Scholarpedia. 2007 Oct 9;2(10):3649.

Rosch JL, Vogel-Walcutt JJ. A review of eye-tracking applications as tools for training. Cogn Tech Work. 2013 Aug 1;15(3):313–27.

Samadani U. A new tool for monitoring brain function: eye tracking goes beyond assessing attention to measuring central nervous system physiology. Neural Regen Res. 2015 Aug;10(8):1231–3.

Sirois S, Brisson J. Pupillometry. WIREs Cognitive Science. 2014;5(6):679–92.

Tabrizi PR, Zoroofi RA. Open/closed eye analysis for drowsiness detection. In: 2008 First Workshops on Image Processing Theory, Tools and Applications. 2008. p. 1–7.

Voulodimos A, Doulamis N, Doulamis A, Protopapadakis E. Deep learning for computer vision: a brief review. Computational Intelligence and Neuroscience. 2018 Feb 1;2018.

Wan Q, Kaszowska A, Panetta K, A Taylor H, Agaian S. A comprehensive head-mounted eye tracking review: software solutions, applications, and challenges. Electronic Imaging. 2019 Jan 13;2019(3):654-1.

As the proverbial “windows to the soul”, our eyes are a rich source of information about our internal world.

Eye tracking is a powerful method for collecting eye-related signals, such as gaze direction, pupil size, and blink rate. By analyzing these signals, either in isolation or in relation to the visual scene, important insights can be gained into human behaviors and mental states.

It is a versatile approach to answering a range of fundamental questions, such as: What is this person feeling? What are they interested in? How distracted or drowsy are they? What is their state of mental or physical health?

Why and how do we move our eyes?

Let’s start with a seemingly simple question: Why do we move our eyes in the first place? The answers to this question are crucial for understanding why eye movements are so informative about our behaviors and mental states.

As humans, we move our eyes in very specific ways, known as “movement motifs”. To put these in context, it’s helpful to first explore the anatomy of the eye itself.

How does the anatomy of the eye relate to eye movements?

At the back of the human eye is a light-sensitive sheet of cells called the retina. It contains two types of photoreceptor cells: cones, which provide high-resolution color vision; and rods, which are responsible for low-resolution monochromatic vision (Dowling, 2007).

Cones are packed at the highest density within an extremely small, specialized area known as the fovea, which covers a mere 2° around the focal point of each eye (roughly the width of your thumbnail if you hold your arm at full length away from you).

Figure 1: The binocular visual field in humans extends about 130° vertically and 200° horizontally. While the ability to detect motion is almost uniform over this range, color-vision and sharp form perception are restricted to its most central portion. The physiological basis for this is the limited extent of the highly specialized foveal region within the retina. As a consequence, humans need to move their eyes to take in the relevant details present in a given scene.

The fovea functions like a high-intensity “spotlight”, providing sufficient resolution to distinguish the fine details of the visual scene (such as letters on a page). Some details are still distinguishable in the immediately surrounding parafoveal region (5° around fixation). However, beyond this in the periphery, visual resolution diminishes rapidly and the image becomes blurry.

This implies that to use high-resolution foveal vision to take in the different elements of a scene in detail, we have to move our eyes.

Figure 2: Qualitatively different eye movement motifs can be distinguished. Saccades refer to rapid jumps in gaze from fixation to fixation.

Figure 3: During smooth pursuits, gaze follows a moving object in a continuous manner.

How are eye movements classified?

Eye movements can be classified into three discrete movement motifs: fixations, saccades, and smooth pursuits (Rayner & Castelhano, 2007; Findlay & Walker, 2012).

In contrast to the typically rapid movements of the eyes, fixations are relatively longer periods (0.2–0.6 s) of steady focus on an object. They make use of the high resolution of the fovea to maximize the visual information gained about the focal object.

Smooth pursuits and saccades are types of proper eye movement. Saccades are rapid, point-to-point eye movements that shift the focus of the eyes abruptly (typically in 20–100 ms) to a new fixation point, while smooth pursuits are continuous movements of the eye to track a moving object.

When people visually investigate a scene, they make a series of rapid saccades, interspersed with relatively longer periods of fixations on its key features, or smooth pursuits of moving objects. This pattern of eye movements makes best use of the limited focus of foveal vision to quickly gather detailed visual information.Importantly, this information is only processed by the brain during periods of fixation or smooth pursuit, and not during saccades. This means that the detail taken in by the foveal “spotlight” depends on the locations and durations of fixations and smooth pursuits in the visual scene.

The visual information from the fovea has a powerful influence on a person’s cognitive, emotional, physiological, and neurological states. Therefore, by analyzing eye movements alone or in relation to the visual scene in front of them, important insights can be gained into behaviors and mental states.

What is eye tracking used for?

Given the insights that eye tracking offers, it’s no surprise that it has been applied to many different areas of research and industry (Duchowski, 2002). The scope and possibilities of eye tracking are huge, and continue to expand as novel applications are created and developed.

With eye tracking it is possible to answer fundamental questions about human behavior: What is this person interested in? What are they looking at? What are they feeling? What do their eyes tell us about their health, or their coordination, or their alertness?

The answers to questions such as these are relevant to many different fields. Below, we explore some important examples of current applications of eye tracking.

Academic research

Eye tracking began in 1908 when Edmund Huey built a device in his lab to study how people read. This academic tradition lives on to the present day, with eye tracking still being a widely used experimental method. Eye-tracking results continue to advance the frontiers of academic research, especially in the fields of psychology, neuroscience, and human–computer interaction (HCI) (for a diverse list of publications and projects that have used eye tracking in recent years, check out our publications page.

Marketing and design

The movements of the eyes hold a wealth of information about someone’s interests and desires, making eye tracking a powerful tool within the fields of marketing and design. It enables insights to be gained into how users interact with or attend to graphical content (dos Santos et al., 2015), including what they are most interested in. This can help to optimize anything from UX design, to point-of-sale displays, to video content, to online store layouts.

Performance training

In sport and skilled professions, every little movement can matter and make the difference between a poor performance and a good one. Professionals such as athletes or skilled laborers or surgeons (or their managers or coaches) use eye tracking to understand what visual information is most important to attend to for peak performance, and to train motor coordination and skilled action (Hüttermann et al., 2018, Causer et al., 2014).

Medicine and healthcare

Eye tracking is an invaluable diagnostic and monitoring tool within medicine. It can be applied to diagnose specific conditions, such as concussion (Samadani, 2015), and to improve and complement existing methods of diagnosis (Brunyé et al., 2019). Non-invasive eye tracking methods make it possible for doctors to quickly and easily monitor medical conditions and diseases such as Alzheimer’s (Molitor et al., 2015).

Communications

The eyes express vital information during social interactions. Remote communication/telepresence technologies (such as virtual meeting spaces) are becoming more engaging thanks to the incorporation of eye movement information in virtual avatars.

Interactive applications

Computer-based and virtual systems are exciting and rapidly growing domains of application for eye tracking, especially with regard to user interaction based on eye gaze.

The field of HCI has long pioneered the use of eye movements as active signals for controlling computers (Köles, 2017). These advances are being applied to many different challenges, from the creation of assistive technologies that allow disabled people to interact hands-free with a computer using an “eye mouse”, to prosthetics that respond intelligently to eye gaze (Lukander, 2016).

Eye tracking data can be used to assess a user’s interests and modify online videos and search suggestions in real time (see the Fovea project for an example of this), with implications for online shopping and content creation. In the world of e-learning, this interactive approach is being used to responsively adapt virtual learning content based on eye-related measurements of key factors, such as a learner’s interests, cognitive load, and alertness (Al-Khalifa & George, 2010; Rosch & Vogel-Walcutt, 2013).

Finally, the computer game and VR industry is a hotbed of innovative eye tracking applications, with game designers making use of eye-tracking data to boost performance and improve user immersion and interaction within games. Emerging applications include ”foveated rendering”, which boosts frame rates by selectively rendering graphics at the highest resolution within foveal vision (Guenter et al., 2012), and eye-gaze-dependent interfaces, which offer smarter and more intuitive modes of user interaction that respond dynamically to where the user is looking (Corcoran et al., 2012).

What is an eye tracker?

What exactly are eye trackers and how do they work? Broadly defined, the term “eye tracker” describes any system that measures signals from the eyes, including eye movement, blinking, and pupil size.

They come in many different shapes and sizes, ranging from invasive systems that detect the movement of sensors attached to the eye itself (such as in a contact lens), to the more commonly used non-invasive eye trackers.

Non-invasive methods can broadly be divided into electro-oculography, which detects eye movements using electrodes placed near the eyes, and video-oculography (video-based eye tracking), which uses one or more cameras to record videos of the eye region.

What are the main types of video-based eye tracker and how do they work?

Video-based eye-tracking systems can be classified into remote (stationary) and wearable (head-mounted) eye trackers.

Remote

Track eye movements from a distance

System mounted on or near a PC monitor

Subject sits in front of screen, largely stationary

Allow analysis and/or user interaction based on eye movement relative to on-screen content (e.g., websites, computer games, or assistive technologies such as the “eye mouse”)

Wearable

Track eye movements close up

System mounted on wearable eye glasses-like frame

Subject free to move and engage with real and VR environments

Forward-facing camera allows analysis of eye gaze relative people and objects in view while the subject engages in tasks and interactions (e.g., during sport, shopping, or product use)

Remote systems mount the video camera(s) on or near a stationary object displaying the visual stimulus, such as a PC monitor, and track a person’s eyes from a distance (Narcizo et al., 2013). The person being tracked sits in front of the screen to perform the task of interest (such as reading or browsing a website) and remains largely stationary throughout.

Wearable systems, in contrast, mount the video camera(s) onto a glasses-like frame, allowing the subject to move freely while their eyes are tracked from close up (Wan et al., 2019). Typically, they also include a forward-facing camera that records a video of the person’s field of view, enabling eye-related signals to be analyzed in the context of the changing visual scene.

All video-based eye trackers, whether remote or wearable, detect the appearance and/or features of the eye(s) under the illumination of either “passive”, ambient light or an “active”, near-infrared light source. In active systems, the direct reflection of the light source off the cornea (known as the “glint”) can be used as an additional feature for tracking.

What are the relative advantages and disadvantages of remote and wearable systems?

As remote eye trackers are coupled with a monitor displaying a defined visual stimulus, such as a website, the exact positions of text and visual elements relative to the user’s eyes are always known. Defining the areas of interest on the stimulus in this way facilitates “closed loop” analysis of the collected eye tracking data, based on the known relationships between the stimulus and eye gaze direction.

Additionally, remote eye trackers allow the stimulus to be changed in a controlled way depending on eye-related signals such as gaze direction, opening up rich possibilities for interactive feedback. However, they offer limited scope for the subject to move, which restricts their usage to static, screen-based tasks.

In contrast, the freedom of movement offered by wearable eye trackers vastly expands the range of tasks and situations in which eye-related signals can be recorded and analyzed. The possibility of recording eye tracking data in open-ended environments yields rich, complex video datasets, with methods for analyzing these data continuing to be an important topic of active research (Panetta et al., 2019).

What kinds of eye-related signals can eye trackers measure?

Eye tracking systems can measure a range of eye-related signals, which provide a wealth of information about a person’s behaviors, and mental and physical states (Eckstein et al., 2017):

Gaze direction measurement is crucial for characterizing fixations, saccades, and smooth pursuits, allowing insights to be gained into cognitive, emotional, physiological, and/or neurological processes;

Pupil size and reactivity, as measured by pupillometry, can be used to illuminate cognitive processes as diverse as emotion, language, perception, and memory (Sirois & Brisson, 2014; Köles, 2017);

Blink rate, meanwhile, is a reliable measure of cognitive processing related to learning and goal-oriented behavior (Eckstein et al., 2017);

Eye state (openness/closure) allows physiological states of arousal or drowsiness to be assessed (e.g. Tabrizi & Zoroofi, 2008).

What factors should be considered when choosing an eye tracker?

The question of whether to use a remote or a wearable eye tracker is multifaceted, depending on the particular task and/or question of interest (Punde et al., 2017).

One major factor to consider is the degree of movement that the task involves. Does the task involve the person sitting still; for example, while browsing a website? In this case, remote eye trackers are generally preferred. Or does the person need to move about; for example, while walking through a shop or playing sport? Do they need to interact with multiple screens of different sizes? In such cases, a wearable eye tracker would typically be used.

The task determines the likely factors affecting the performance of the eye tracker. For example, for an outdoor, high-dynamic task, glare from sunlight or slippage (movement of the eye tracker relative to the eyes) could have a substantial influence on performance. In contrast, for long-duration, static recordings in the lab, whether the accuracy and quality of eye-related signals can be maintained over time might be the key indicator of performance.

Therefore, depending on the task, a range of variables might be important to consider when evaluating the performance of an eye tracker, such as (Ehinger et al., 2019):

Accuracy and precision, which quantify the angular error and the inherent “noise” of the gaze direction estimate, respectively;

Recording quality other eye-related signals, including pupil size, blinking, and eye state;

Robustness (reliability in response) to, for example, changes in lighting, movement of the eye tracker relative to the eyes, subject head translation/rotation, or temporary eye occlusion/closure;

Signal deterioration over time, which affects how long data from the system can be reliably used before the eye tracker requires recalibration.

More practical considerations such as the cost of the system and its ease of use (in particular whether complicated setup and calibration are required) can be important in selecting the right eye tracker for the job.

Finally, it’s important to remember that any single measure alone is normally insufficient for evaluating the suitability of a given eye tracker to a particular application. Rather, by considering a set of relevant variables, a more well-informed choice can be made.

How is eye gaze direction estimated?

How exactly do video-based eye trackers figure out where someone is looking? In essence, they use information contained in eye images to estimate the direction of a person’s gaze. Gaze estimation is a challenging problem that has been tackled using various approaches (Hansen & Ji, 2010), with active research continuing to improve its accuracy and reliability.

The accuracy and reliability of eye gaze estimation of both remote and wearable eye trackers are affected by factors such as differences in inter-individual eye anatomy. The use of wearable trackers in high-dynamic environments means that their estimation of eye gaze can also be influenced by additional factors, such as varying levels of light outdoors or slippage of the eye tracker during physical activity.

In this section, we explore some key questions about eye gaze estimation, including: Which features (aspects) of the eye image can be used to estimate eye gaze direction? How do the algorithms used to perform the estimation work? What do recent developments in AI-based image analysis have to offer to the challenge of eye gaze estimation?

How do gaze estimation algorithms work?