Taking Flight: Decoding Pilot Cognition in the Real World

Research Digest

January 13, 2026

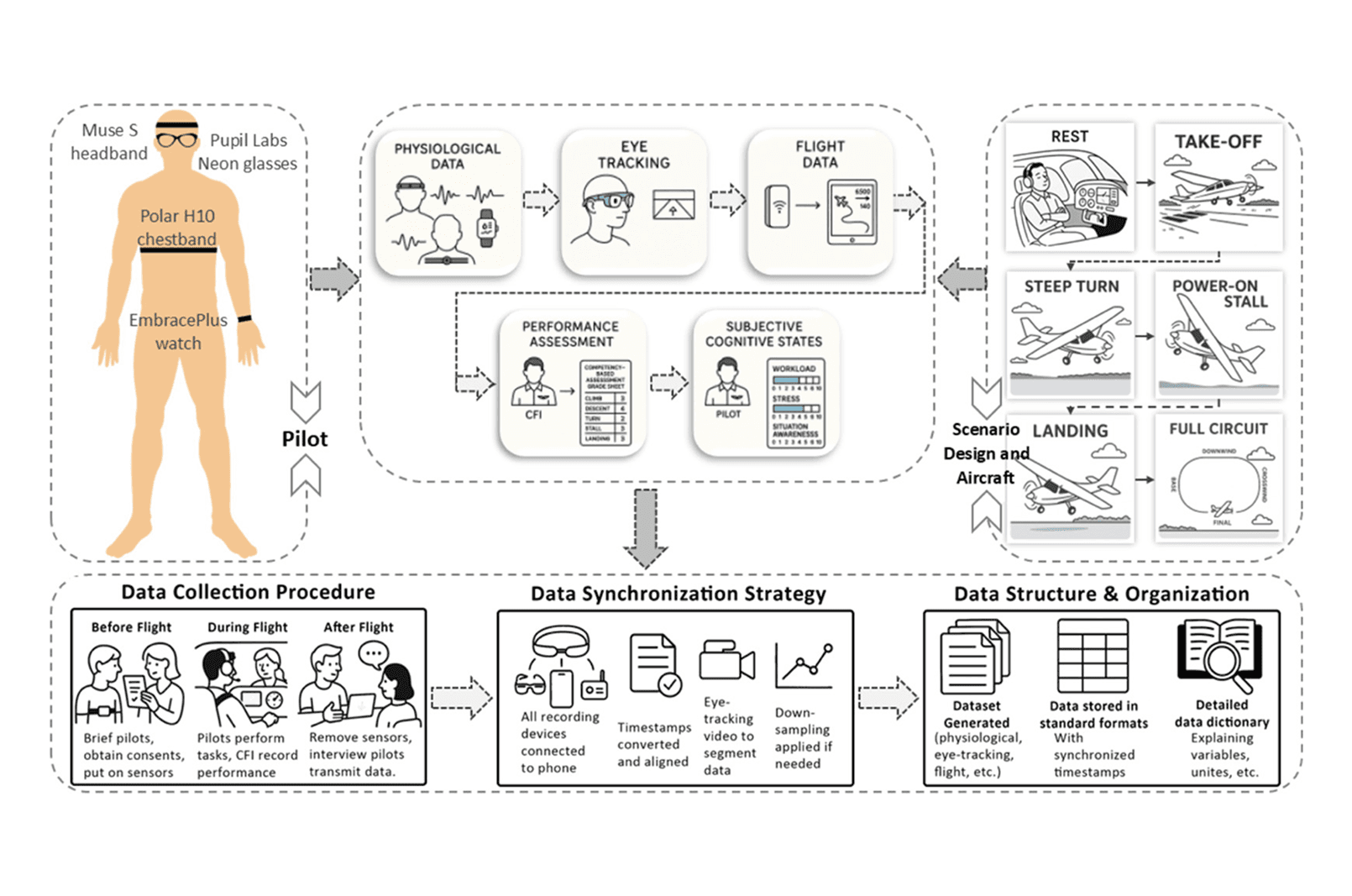

Figure: Graphical overview of the experimental setup, data collection procedure, and structure of the resulting dataset. Source: Xu, R., Cao, S., Barnett-Cowan, M., Bulbul, G., Irving, E., Niechwiej-Szwedo, E., & Kearns, S. (2025). An in-flight multimodal data collection method for assessing pilot cognitive states and performance in general aviation. MethodsX, 103589.

Figure: Graphical overview of the experimental setup, data collection procedure, and structure of the resulting dataset. Source: Xu, R., Cao, S., Barnett-Cowan, M., Bulbul, G., Irving, E., Niechwiej-Szwedo, E., & Kearns, S. (2025). An in-flight multimodal data collection method for assessing pilot cognitive states and performance in general aviation. MethodsX, 103589.

Figure: Graphical overview of the experimental setup, data collection procedure, and structure of the resulting dataset. Source: Xu, R., Cao, S., Barnett-Cowan, M., Bulbul, G., Irving, E., Niechwiej-Szwedo, E., & Kearns, S. (2025). An in-flight multimodal data collection method for assessing pilot cognitive states and performance in general aviation. MethodsX, 103589.

Video was recorded with Neon eye tracker. Video is not directly related with this research, but for visual purposes.

The Limits of the Simulator

Aviation safety has seen dramatic improvements over the years, yet human factors, such as workload, stress, and loss of situational awareness, remain a primary cause in 70–80% of accidents. Traditionally, researchers have relied on Flight Simulation Training Devices to study these phenomena. While simulators provide a safe and controlled environment, they cannot fully reproduce the psychological realism or consequences of actual flight. This reality gap can alter pilot behavior, ultimately limiting our ability to understand true cognitive demands during critical moments.

Bringing the Lab into the Cockpit

To address this gap, Rongbing Xu and colleagues at the University of Waterloo developed a standardized methodology for collecting high-quality multimodal data during real-world general aviation flights. By equipping pilots with a suite of consumer-grade wearable sensors, the team's goal is to capture the physiological and cognitive states of pilots during general aviation flight training such as flying a Cessna 172. This represents a significant leap forward, moving research out of the lab and into the skies.

A Multimodal Sensor Suite

The study utilized a comprehensive sensor ecosystem to generate a holistic view of pilot performance and physiological responses. The methodology synchronizes four distinct data streams:

Eye Tracking: Neon eye tracking glasses recorded gaze, pupil diameter, and blink rates at 200 Hz, revealing visual scan patterns for the study of pilot attention and mental workload.

Physiological Signals: A Muse S headband (EEG), Polar H10 strap (ECG), and EmbracePlus wristband (electrodermal activity and skin temperature) provided continuous measures of physiological signals for the study of stress and workload.

Flight Telemetry: Sentry Plus ADS-B receivers logged altitude, speed, pitch, and other aircraft parameters.

Expert Assessment & Self-Reports: A Certified Flight Instructor (CFI) rated maneuvers and ensured safety, while pilots provided brief post-maneuver self-reports of workload, stress, and situation awareness.

Key Insights and Feasibility

The protocol was tested with 25 pilots, ranging from students to licensed aviators, across a range of real flight scenarios, including take-offs, steep turns, stalls, and landings. The study yielded 20 complete datasets, confirming that high-quality data collection is possible in a confined cockpit in a confined cockpit without compromising safety.

Operational Feasibility: Pilots adapted well to the sensor setup, reporting little discomfort or distraction, proving the sensor setup is unobtrusive.

Ecological Validity: Collecting data in real flight captures genuine cognitive stressors, nuances often lost in simulation, and supports development of machine learning models to detect overload or cognitive incapacitation.

Data Synchronization: Physiological, gaze, and flight telemetry data were successfully aligned using timestamps and Neon scene video.

Toward Smarter Training and Safer Cockpits

This methodology lays the foundation for a new era of aviation human factors research, providing unprecedented insight into how pilots respond to operational demands by measuring workload, stress, attention, and other cognitive states in real flight conditions. These insights enable data-driven performance assessments, helping instructors identify areas where pilots may need additional support or targeted training.

The approach also allows for personalized pilot training programs tailored to individual cognitive and physiological responses, rather than relying solely on standardized procedures. Additionally, the rich multimodal dataset can inform the design of human-centered cockpit interfaces, such as adaptive displays or alert systems, that respond to a pilot’s current cognitive state.

Overall, this methodology supports the development of safer, more effective training and operational protocols, ultimately enhancing aviation safety.

Further Resources

Full article: https://www.sciencedirect.com/science/article/pii/S2215016125004339

Research Center:

Waterloo Institute for Sustainable Aeronautics (WISA), Waterloo, Ontario, Canada

University of Waterloo, Waterloo, Ontario, Canada

Video was recorded with Neon eye tracker. Video is not directly related with this research, but for visual purposes.

The Limits of the Simulator

Aviation safety has seen dramatic improvements over the years, yet human factors, such as workload, stress, and loss of situational awareness, remain a primary cause in 70–80% of accidents. Traditionally, researchers have relied on Flight Simulation Training Devices to study these phenomena. While simulators provide a safe and controlled environment, they cannot fully reproduce the psychological realism or consequences of actual flight. This reality gap can alter pilot behavior, ultimately limiting our ability to understand true cognitive demands during critical moments.

Bringing the Lab into the Cockpit

To address this gap, Rongbing Xu and colleagues at the University of Waterloo developed a standardized methodology for collecting high-quality multimodal data during real-world general aviation flights. By equipping pilots with a suite of consumer-grade wearable sensors, the team's goal is to capture the physiological and cognitive states of pilots during general aviation flight training such as flying a Cessna 172. This represents a significant leap forward, moving research out of the lab and into the skies.

A Multimodal Sensor Suite

The study utilized a comprehensive sensor ecosystem to generate a holistic view of pilot performance and physiological responses. The methodology synchronizes four distinct data streams:

Eye Tracking: Neon eye tracking glasses recorded gaze, pupil diameter, and blink rates at 200 Hz, revealing visual scan patterns for the study of pilot attention and mental workload.

Physiological Signals: A Muse S headband (EEG), Polar H10 strap (ECG), and EmbracePlus wristband (electrodermal activity and skin temperature) provided continuous measures of physiological signals for the study of stress and workload.

Flight Telemetry: Sentry Plus ADS-B receivers logged altitude, speed, pitch, and other aircraft parameters.

Expert Assessment & Self-Reports: A Certified Flight Instructor (CFI) rated maneuvers and ensured safety, while pilots provided brief post-maneuver self-reports of workload, stress, and situation awareness.

Key Insights and Feasibility

The protocol was tested with 25 pilots, ranging from students to licensed aviators, across a range of real flight scenarios, including take-offs, steep turns, stalls, and landings. The study yielded 20 complete datasets, confirming that high-quality data collection is possible in a confined cockpit in a confined cockpit without compromising safety.

Operational Feasibility: Pilots adapted well to the sensor setup, reporting little discomfort or distraction, proving the sensor setup is unobtrusive.

Ecological Validity: Collecting data in real flight captures genuine cognitive stressors, nuances often lost in simulation, and supports development of machine learning models to detect overload or cognitive incapacitation.

Data Synchronization: Physiological, gaze, and flight telemetry data were successfully aligned using timestamps and Neon scene video.

Toward Smarter Training and Safer Cockpits

This methodology lays the foundation for a new era of aviation human factors research, providing unprecedented insight into how pilots respond to operational demands by measuring workload, stress, attention, and other cognitive states in real flight conditions. These insights enable data-driven performance assessments, helping instructors identify areas where pilots may need additional support or targeted training.

The approach also allows for personalized pilot training programs tailored to individual cognitive and physiological responses, rather than relying solely on standardized procedures. Additionally, the rich multimodal dataset can inform the design of human-centered cockpit interfaces, such as adaptive displays or alert systems, that respond to a pilot’s current cognitive state.

Overall, this methodology supports the development of safer, more effective training and operational protocols, ultimately enhancing aviation safety.

Further Resources

Full article: https://www.sciencedirect.com/science/article/pii/S2215016125004339

Research Center:

Waterloo Institute for Sustainable Aeronautics (WISA), Waterloo, Ontario, Canada

University of Waterloo, Waterloo, Ontario, Canada

Video was recorded with Neon eye tracker. Video is not directly related with this research, but for visual purposes.

The Limits of the Simulator

Aviation safety has seen dramatic improvements over the years, yet human factors, such as workload, stress, and loss of situational awareness, remain a primary cause in 70–80% of accidents. Traditionally, researchers have relied on Flight Simulation Training Devices to study these phenomena. While simulators provide a safe and controlled environment, they cannot fully reproduce the psychological realism or consequences of actual flight. This reality gap can alter pilot behavior, ultimately limiting our ability to understand true cognitive demands during critical moments.

Bringing the Lab into the Cockpit

To address this gap, Rongbing Xu and colleagues at the University of Waterloo developed a standardized methodology for collecting high-quality multimodal data during real-world general aviation flights. By equipping pilots with a suite of consumer-grade wearable sensors, the team's goal is to capture the physiological and cognitive states of pilots during general aviation flight training such as flying a Cessna 172. This represents a significant leap forward, moving research out of the lab and into the skies.

A Multimodal Sensor Suite

The study utilized a comprehensive sensor ecosystem to generate a holistic view of pilot performance and physiological responses. The methodology synchronizes four distinct data streams:

Eye Tracking: Neon eye tracking glasses recorded gaze, pupil diameter, and blink rates at 200 Hz, revealing visual scan patterns for the study of pilot attention and mental workload.

Physiological Signals: A Muse S headband (EEG), Polar H10 strap (ECG), and EmbracePlus wristband (electrodermal activity and skin temperature) provided continuous measures of physiological signals for the study of stress and workload.

Flight Telemetry: Sentry Plus ADS-B receivers logged altitude, speed, pitch, and other aircraft parameters.

Expert Assessment & Self-Reports: A Certified Flight Instructor (CFI) rated maneuvers and ensured safety, while pilots provided brief post-maneuver self-reports of workload, stress, and situation awareness.

Key Insights and Feasibility

The protocol was tested with 25 pilots, ranging from students to licensed aviators, across a range of real flight scenarios, including take-offs, steep turns, stalls, and landings. The study yielded 20 complete datasets, confirming that high-quality data collection is possible in a confined cockpit in a confined cockpit without compromising safety.

Operational Feasibility: Pilots adapted well to the sensor setup, reporting little discomfort or distraction, proving the sensor setup is unobtrusive.

Ecological Validity: Collecting data in real flight captures genuine cognitive stressors, nuances often lost in simulation, and supports development of machine learning models to detect overload or cognitive incapacitation.

Data Synchronization: Physiological, gaze, and flight telemetry data were successfully aligned using timestamps and Neon scene video.

Toward Smarter Training and Safer Cockpits

This methodology lays the foundation for a new era of aviation human factors research, providing unprecedented insight into how pilots respond to operational demands by measuring workload, stress, attention, and other cognitive states in real flight conditions. These insights enable data-driven performance assessments, helping instructors identify areas where pilots may need additional support or targeted training.

The approach also allows for personalized pilot training programs tailored to individual cognitive and physiological responses, rather than relying solely on standardized procedures. Additionally, the rich multimodal dataset can inform the design of human-centered cockpit interfaces, such as adaptive displays or alert systems, that respond to a pilot’s current cognitive state.

Overall, this methodology supports the development of safer, more effective training and operational protocols, ultimately enhancing aviation safety.

Further Resources

Full article: https://www.sciencedirect.com/science/article/pii/S2215016125004339

Research Center:

Waterloo Institute for Sustainable Aeronautics (WISA), Waterloo, Ontario, Canada

University of Waterloo, Waterloo, Ontario, Canada